Somehow in my last few jobs I’ve ended up becoming “the subtitles guy”. This is sometimes a slippery slope because you then get asked to write the thought leadership posts about subtitles and captions on the internet, which some might say is a dry topic, but I disagree, there’s nothing more empowering than making sure everyone can enjoy your content, so buckle up for a wild ride!

This week we announced support for subtitles and captions in Mux Video. As we have in all our APIs, we’ve tried to keep the abstraction level high, while maintaining a powerful, feature rich product. We hope you’ll make use of this feature to make your content accessible to more viewers!

But how do captions and subtitles even work in streaming video, and what’s the difference between the two anyway? Let’s take a look.

First let’s just clear up that difference between captions and subtitles. The European Broadcast Union actually have a great wording in the EBU-TTML specification that I love to quote:

The term “captions” describes on screen text for use by deaf and hard of hearing audiences.

Captions include indications of the speakers and relevant sound effects.

The term “subtitles” describes on screen text for translation purposes.

So if you see [crowd cheers] at the bottom of your screen, you’re looking at captions, if you don’t… then you might not even have captions turned on. Apple also often refer to “captions” as “SDH” - Subtitles for the deaf and hard of hearing.

For the sake of brevity, we’re going to use the word “captions” to represent both captions and subtitles in the rest of this article. We’re also not going to talk about “open captions” here, where the text content is encoded visually into the content, and thus isn’t possible to enable, or disable.

Types of Captions

There’s a variety of ways to encode, package, and deliver captions online. First, we’ll talkthrough the approaches, and then dig into the specifics of how we’re delivering captions to you in Mux Video.

Broadly speaking, there’s two ways of carrying captions, in-band, and out-of-band. In-band captions are contained within the video stream, while out-of-band captions are delivered separately from the video stream.

In band

You might have heard of something called 608 or 708 captions. These are both examples of systems that use “in-band” delivery. Both of these technologies stem from the US broadcasting specifications, other countries have their own variants which follow the same basic approach.

CEA-608 captions is an older technology which stems from the analog era where closed captions data for the video is carried directly in the video transmission, in a line of the video content that wasn’t displayed unless the decoder was told to look for it. This was often referred to as “Line 21” captions. 608 captions have a very traditional, distinctive visual style, with no option for custom styling or colour information, and don’t support more than two languages.

CEA-708 captions are a more modern version of 608 captions, designed to be carried in a digital transmission alongside broadcast video, specifically inside MPEG transport streams for over-the-air transmission. 708 improves on 608 in a variety of ways, including customisable colours, limited positioning data, real multi-language support, and custom fonts.

While 608 and 708 captions are supported in a variety of smart TVs and set top boxes (collectively referred to as “over the top” or OTT devices), as well as many android and iOS phones, the complexity caused by the requirement for the captions to be deeply intertwined with the video delivery means that these technologies aren’t best suited for a rapidly changing online ecosystem.

Why not? Well modern subtitles and captions workflows are complex - text tracks often arrive and are replaced at a variety of times during the lifespan of a piece of content. For example more translations of content may become available after content is initially published as it becomes more popular in different countries than expected, or improved and re-aligned captions may become available for live programming the next morning. With in-band systems, you have to re-package your media with new captions files every time this happens, which is both time consuming, potentially expensive for compute and storage, and reduces cache efficiency.

So how can we better deliver captions in an OTT dominated world?

Out of band

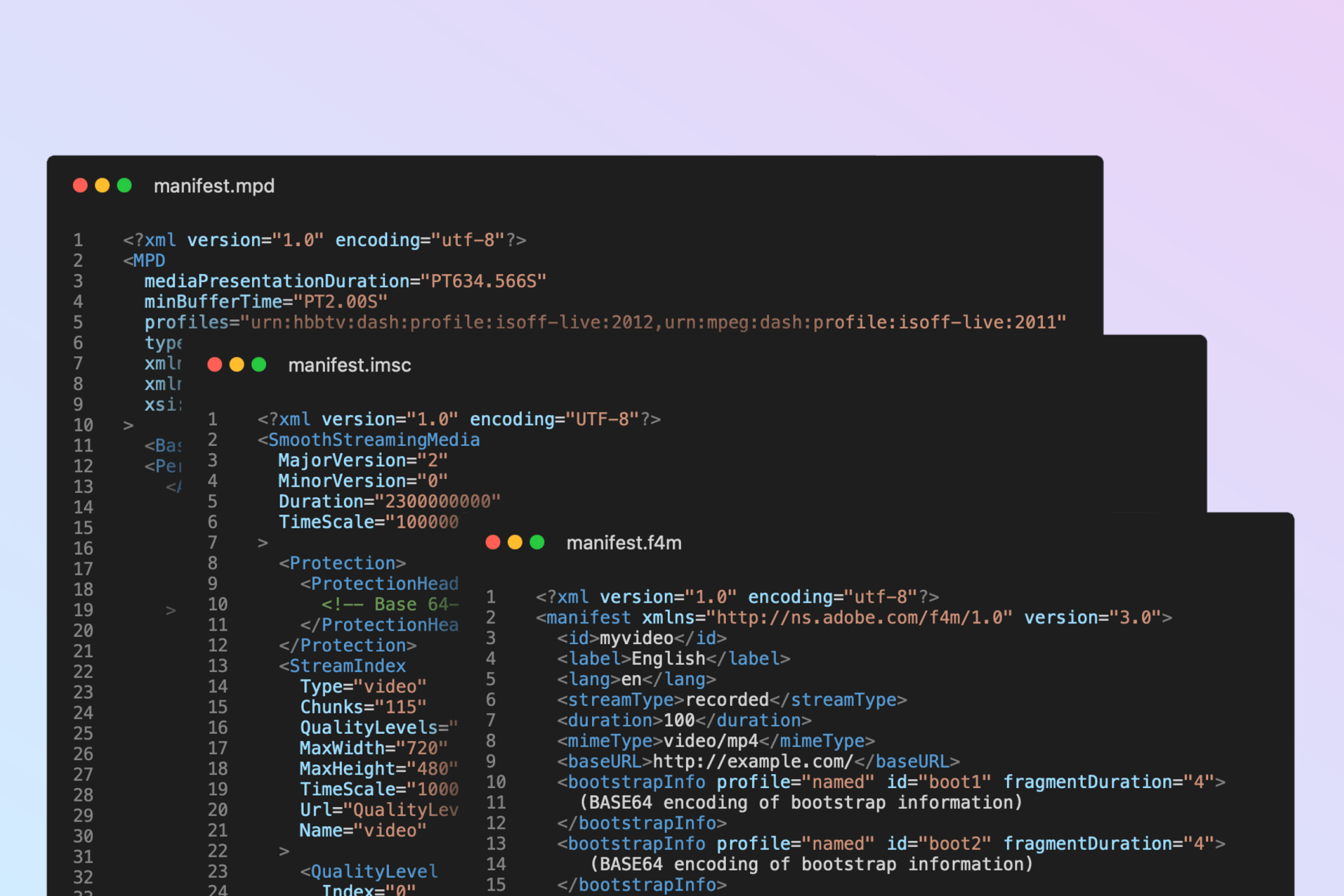

Out of band captions are now the most common and powerful way to deliver captions online. These standards work by delivering a separate text file (or collection of text files) which contain standalone captions content, which are then referenced from the HLS or DASH manifest or playlist file you use to deliver your video and audio content.

And of course what would life be without a variety of standards for delivering closed captions and subtitles files on the internet? Captions are one of those spaces where there’s an absolute cacophony of standards to pick from, however, three of these formats are dominant: SRT, TTML, and WebVTT. Let’s take a glance at each of those formats.

SRT

Let’s start with the simplest of these, SubRip Text (SRT). Born of the application bearing the same name, SubRip is a simple text format with no official support for styling or positioning information. The contents of an SRT file look something like this:

11

00:00:28,000 --> 00:00:30,000

...you have your robotics, and I

just want to be awesome in space.

12

00:00:31,000 --> 00:00:33,000

Why don't you just admit that

you're freaked out by my robot hand?While SRT continues to be a popular format in the ripping community, it’s not that popular in streaming video these days due to its lack of direct support on OTT devices, and limited feature set.

TTML

The first of the two W3C standards for timed metadata is Timed Text Markup Language (TTML). Not only does W3C have two standards for captions delivery, there’s also two versions of the TTML specification, TTML 1 and TTML 2. TTML 1 is still by far the most common, especially for online distribution, and the core of it looks something like this:

<!-- lots of XML boilerplate removed because no-one has time for that -->

<p begin="00:00:22.64" id="p1" end="00:00:26.56">

...you have your robotics, and I<br />

just want to be awesome in space.

</p>

<p begin="00:00:26.56" id="p2" end="00:00:30.60">

Why don't you just admit that<br />

you're freaked out by my robot hand?

</p>TTML is a flexible specification which can be used for a variety of authoring, storage, and distribution use cases. There also exists a variety of interchange specifications for TTML from various standards bodies around the world, focused on different areas (archive, distribution, etc.). These include EBU-TTML, DFXP, and SMPTE-TTML.

WebVTT

Last, but not least, there’s WebVTT (The Web Video Text Tracks Format), which is a W3C standard for the interchange of text track resources. WebVTT is a simple format, which closely resembles SRT, while adding a nice variety of formatting and positioning capabilities. Let’s take a look at some WebVTT:

00:28.000 --> 00:30.000 position:90% align:right size:35%

...you have your robotics, and I

just want to be awesome in space.

00:31.000 --> 00:33.000 position:90% align:right size:35%

Why don't you just admit that

you're freaked out by my robot hand?What’s super interesting is that WebVTT isn’t just used for subtitles and captions (though those are the primary use cases), it can also be used for other forms of structured metadata that you might want to deliver alongside your content. These closely model the HTML5 text track definitions, which includes chapters, descriptions, arbitrary metadata, alongside the more traditional subtitles and captions.

To us, WebVTT strikes an elegant balance between functionality, readability, and extensibility, being the only specification flexible enough to have a place to carry structured metadata. WebVTT is supported seamlessly on a comprehensive set of web players and OTT devices, which makes it great for streaming delivery.

Captions in Mux

So what type of captions is Mux supporting?

We’re glad you asked!

At Mux, we currently deliver all our video over the Apple HTTP Live Streaming protocol (HLS), and while the HLS protocol supports a variety of technologies to deliver captions, the best option in our mind is WebVTT. HLS uses Segmented WebVTT, which is a variant of WebVTT delivered in smaller segments, usually around 30 seconds in duration.

When you ingest captions into Mux, you’ll need to provide either a valid WebVTT file, or valid SRT file, you can either present this file as an input when you create an asset, or you can add them afterwards using the new tracks API.

Provide a captions file when you create your asset, or after, and we’ll go ahead and do all the magic required to make sure that your HLS manifest contains some captions files. This will allow you to simply pass the same Mux URL that you’ve always used to your player, and you’ll have captions [crowd cheers]!

If you want to try adding some captions to a Mux Video, why not try ingesting the Blender project’s “Tears of Steel” movie along with some WebVTT captions into your account, using the Asset POST below.

{

"input": [

{

"url": "https://tears-of-steel-subtitles.s3.amazonaws.com/tos.mp4"

},

{

"url": "https://tears-of-steel-subtitles.s3.amazonaws.com/tears-en.vtt",

"type": "text",

"text_type": "subtitles",

"closed_captions": false,

"language_code": "en",

"name": "English"

},

{

"url": "https://tears-of-steel-subtitles.s3.amazonaws.com/tears-fr.vtt",

"type": "text",

"text_type": "subtitles",

"closed_captions": false,

"language_code": "fr",

"name": "Française"

}

],

"playback_policy": [

"public"

]

}In-Manifest referenced WebVTT.

Let’s take a look under the hood at what’s happening in your HLS manifests when we add a WebVTT file. If you curl down your manifest, you should see a couple of changes. First, you’ll see that we’ve added a new subtitles group to the top of your manifest, it’ll look something like this:

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="sub1",CHARACTERISTICS="public.accessibility.transcribes-spoken-dialog",NAME="English",AUTOSELECT=YES,DEFAULT=NO,FORCED=NO,LANGUAGE="en-US",URI="https://this-url-is-very-long.com/hey-look-a-puppy.m3u8"Scroll a little further, and you’ll see we also add references to this subtitles group to the URLs for the renditions:

#EXT-X-STREAM-INF:BANDWIDTH=3839791,AVERAGE-BANDWIDTH=3839791,CODECS="mp4a.40.2,avc1.64002a",RESOLUTION=1920x800,SUBTITLES="sub1"Most things here should be pretty self explanatory - we’re adding a new rendition, which contains subtitles (HLS refers to all WebVTT delivered content as subtitles), but there’s 3 cryptic YES/NO flags in the subtitles group - AUTOSELECT, DEFAULT, and FORCED. These flags aren’t well understood (or well documented), so let’s take a look at what they’re supposed to do.

The mysterious three flags

First up, let’s take a look at what the HLS specification says about those magic three flags:

DEFAULT

The value is an enumerated-string; valid strings are YES and NO.

If the value is YES, then the client SHOULD play this Rendition of

the content in the absence of information from the user indicating

a different choice. This attribute is OPTIONAL. Its absence

indicates an implicit value of NO.

AUTOSELECT

The value is an enumerated-string; valid strings are YES and NO.

This attribute is OPTIONAL. Its absence indicates an implicit

value of NO. If the value is YES, then the client MAY choose to

play this Rendition in the absence of explicit user preference

because it matches the current playback environment, such as

chosen system language.

If the AUTOSELECT attribute is present, its value MUST be YES if

the value of the DEFAULT attribute is YES.

FORCED

The value is an enumerated-string; valid strings are YES and NO.

This attribute is OPTIONAL. Its absence indicates an implicit

value of NO. The FORCED attribute MUST NOT be present unless the

TYPE is SUBTITLES.

A value of YES indicates that the Rendition contains content which

is considered essential to play. When selecting a FORCED

Rendition, a client SHOULD choose the one that best matches the

current playback environment (e.g. language).

A value of NO indicates that the Rendition contains content which

is intended to be played in response to explicit user request.And let’s take a look at Apple’s recommendations regarding those flags:

4.6. If a subtitles track is intended to provide accessibility for the deaf and hard of hearing the AUTOSELECT attribute MUST have a value of “YES”.

[...]

5.8. If the content has forced subtitles and regular subtitles in a given language, the regular subtitles track in that language MUST contain both the forced subtitles and the regular subtitles for that language.

5.9. If your videos contain text burnt into the video and you have access to a version without the burn-in, you SHOULD use Forced Subtitles instead. (This allows you to easily translate into multiple languages. An example of when you might use forced subtitles is a science fiction film, where alien languages are translated into English.)

[...]

5.11. Forced subtitles SHOULD always have AUTOSELECT=YES.One of the challenges here is that the HLS specification and associated recommendations aren’t meant as a guide for client side or player implementation, just as a standard for the streams that are produced. Many HLS players are based on reproducing Apple’s implementation in iOS and Safari.

Based on repeated reading of the specifications and a variety of device and player testing, here’s my interpretation on how a player should use these flags:

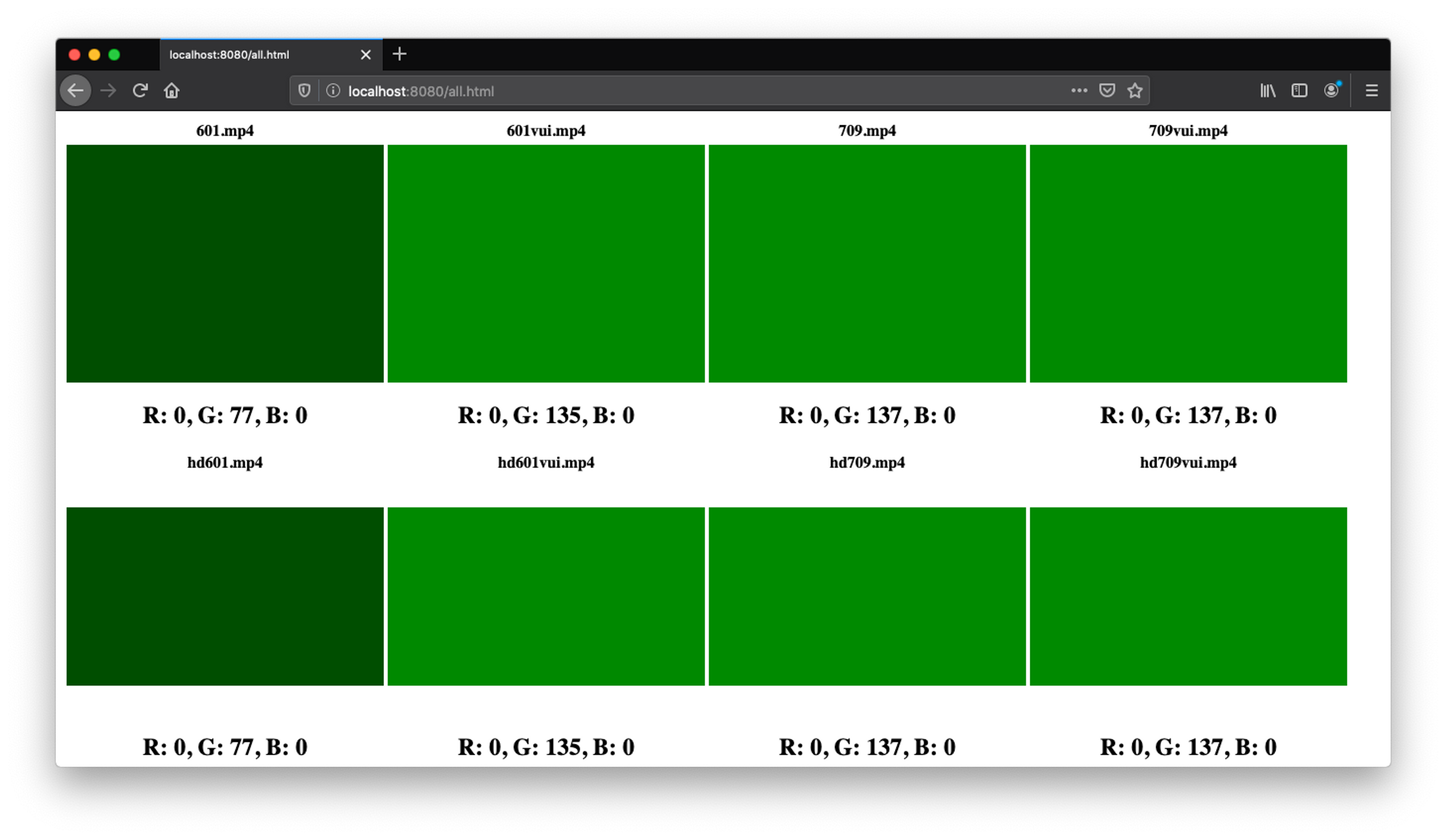

AUTOSELECT

AUTOSELECT exists to indicate to a player that this particular captions track is safe to activate automatically if the user environment implies this, this is of course a super vague definition. A practical example of this can be seen on iOS devices. Hidden deeply away in accessibility settings, there’s a toggle you can set, which lets iOS know that you are likely to want captions or subtitles enabled by default, it looks like this:

You can try this out with the copy of Tears of Steel you ingested into your Mux account(or try it on our copy here) - right now Mux sets AUTOSELECT on all your captions and subtitle tracks, so that they’re selected automatically when appropriate on many devices. It is worth noting though, that Apple’s logic is a little more complicated here - iOS will look for a “captions” track first, and failing that, it’ll pick a “subtitles” track - my interpretation of that logic is that for someone with an auditory disability, some understanding of what’s going on, is better than none.

AUTOSELECT is also used in a few other places in the HLS manifest, namely for alternate audio tracks. There’s fairly few reasons you wouldn’t want autoplay set to YES - the example in the Apple documentation is for commentary audio tracks and associated captions tracks. If you come up against any issues with this, please let us know.

So AUTOSELECT is great, but what about if you want your captions to appear, even if the user hasn't indicated they want this?

Why would you want to do this? Well in the era of unmuted video autoplay being mostly disabled inside browsers, it's becoming an increasing popular strategy to enable captions on muted videos when playing, just take a look at the more professionally produced content in the LinkedIn feed for example.

There's a couple of flags you can use to try to to achieve this in HLS:

DEFAULT

Practically speaking, DEFAULT is the flag which most players respect when they're loading an HLS manifest to indicate that a particular captions rendition should be enabled automatically without user interaction (Ignoring for example, the captions and SDH toggle on iOS), and indeed that's how popular web players like Video.js and HLS.js, and, for the most part, Safari/iOS behave.

There's one interesting note here though, it appears to be intended for AUTOSELECT to supersede DEFAULT if a device or player thinks it can make a more informed decision about what the user desires. For example, a device set to a French locale, may chose to select a French captions track which has AUTOSELECT set, in preference over a DEFAULT track in English, which also has AUTOSELECT enabled.

Today Mux doesn't give you any control over the DEFAULT flag in your HLS manifest. This is mainly because we want to make sure that we expose these sorts of options in the most meaningful manner, and in particular this means making sure that we release features that aren't specific to HLS, but map elegantly into other protocols like DASH, Smooth, or even SASH. We'll be giving you more control over the DEFAULT flag later this year, but please reach out if you have an urgent use case.

FORCED

FORCED is the strange and cryptic flag of the three. Its intended usage is for captions that cannot be turned off - as per the Apple example - “Aliens speaking in an alien language”. FORCED is a challenge however because while its intention is clear, its implementation in players is a little all over the place.

In the specification, Apple notes that all captions in the FORCED tracks must also be present in the non-forced versions of the tracks, and indeed this is how it appears in their official examples. In Apple's players, FORCED tracks deliberately don't appear in any menus (as per this Apple support thread), but also don't appear to actually be rendered to the user in any combination of magical flags that I could create. It’s unclear if this is a bug or not, so we've reported this to Apple and we're waiting for feedback.

Beyond that, the most popular two web players, HLS.js and Video.js, don't currently support FORCED consistently, for example HLS.js just treats FORCED captions in the same way as any others, while Video.js hides them from the menu, just like Apple’s players do. Neither of the players currently activate FORCED captions by default.

Many packagers, players and video platforms don’t support FORCED today, and those that do are notable in their inconsistencies. We don’t have any immediate plans to support it either, especially given Apple don’t even seem to be able to make FORCED captions work reliably.

A human readable summary of those magic flags

The takeaway from this you probably have is something along the lines of “Eugh, that’s complicated, why doesn’t the spec just tell how these flags interact?” Well, that’s a fair question, and I don’t have a really good answer to it, but here’s my summary of the behaviours and their interactions:

FORCED: Should be hidden from the user’s captions selection menu, but must be rendered. Must be paired with AUTOSELECT, and the player should use heuristics to decide the most appropriate track. (eg: Browser locale). Doesn’t work consistently across devices, including iOS.

AUTOSELECT: Indicates a track should be activated automatically if there’s something in the user’s environment to imply that it should be, usually in response to an indication that the user has an accessibility requirement. Can be set on many tracks.

DEFAULT: Indicates that this track must be played by default, unless the user overrides this. Must be paired with AUTOSELECT. Should only be set on one track.

A test environment for those magic flags

In isolation the magic flags I talked about earlier are fairly easy, but the really big challenge is understanding how these flags interact together in a particular environment. At Mux we love making video developer’s lives easier, so I put together some tooling to make it easier for you to test the behaviour of these flags, it’s a simple proxy service called hls-subtitles-vexillographer (a vexillographer is someone who designs flags), and you can find it here on Github.

There’s nothing really fancy here, just a little manifest proxy which looks for the first captions track in your HLS manifest, and lets you set the AUTOSELECT, DEFAULT, and FORCED as query parameters. All flags are set to "NO" by default, and you can manipulate them like this:

DEFAULT, AUTOSELECT and FORCED will be set to "NO"

http://localhost:4567/tears.m3u8

DEFAULT, AUTOSELECT will be set to "YES". FORCED will be set to "NO"

http://localhost:4567/tears.m3u8?default=YES&autoselect=YES

Custom playback ID. DEFAULT, AUTOSELECT and FORCED will be set to "NO":

http://localhost:4567/HDGj01zK01esWsWf9WJj5t5yuXQZJFF6bo.m3u8If you use /tears.m3u8 as the path, we’ll serve you our test Tears of Steel manifest, with a single, English language subtitles track, otherwise, you can put any Mux public playback ID before the .m3u8 in the URL and we'll proxy that and return a version of that manifest with the first subtitles track modified.

In summary

Captions and subtitles are a unexpectedly complex world of competing standards, and we’re trying hard to not only provide you the simplest tooling, but also to make it easier for video developers everywhere to improve their players and delivery technologies.

We’re excited to see our customers make their content more accessible, and we’re excited to bring more features out in the coming year to make content more accessible than ever. If you have any ideas for cool text track features we should build, please reach out!