If you’re a Mux Data media customer, you’re probably aware that you have the option to export the raw data collected by Mux into your own data warehouse. This is available either as a daily CSV file or as a data stream via Google Pub/Sub or AWS Kinesis, with the data stream allowing you to receive the data as views are completed in real time.

Why should you store this data? First, and most importantly, this is your data.

The Mux dashboard is a powerful tool to analyze your Quality of Experience metrics. For many customers, the dashboard covers all of their requirements, although one of the first limitations that may materialize is when you want to analyze data older than the maximum 100-day Mux retention policy. This alone is a reason to permanently store the Mux view data in your own data warehouse.

Another reason is that you might want to perform a complex query not directly available in the Mux dashboard. You could submit a product request — or with the data in your own data warehouse, you could simply help yourself. You may not know what insights you want to look at now, but when the time comes, you’ll be glad all your data was committed to a data warehouse.

For the purposes of this article, we will focus on creating a data reporting pipeline using tools within Google Cloud Platform, or GCP. We will set up a Pub/Sub data stream to receive the view exports from Mux as they complete, permanently persist the data in BigQuery, and then connect Looker to BigQuery so you can easily analyze the data that Mux has provided.

Set up a data pipeline

Before we get started, we need to download the latest version of video_view.proto from the mux-protobuf repository at https://github.com/muxinc/mux-protobuf/tree/main/video_view. This is used to create the Pub/Sub schema and generate the BigQuery schema.

Open up your Google Cloud Console at https://console.cloud.google.com/ and select the GCP project in which you want to ingest your Mux video views.

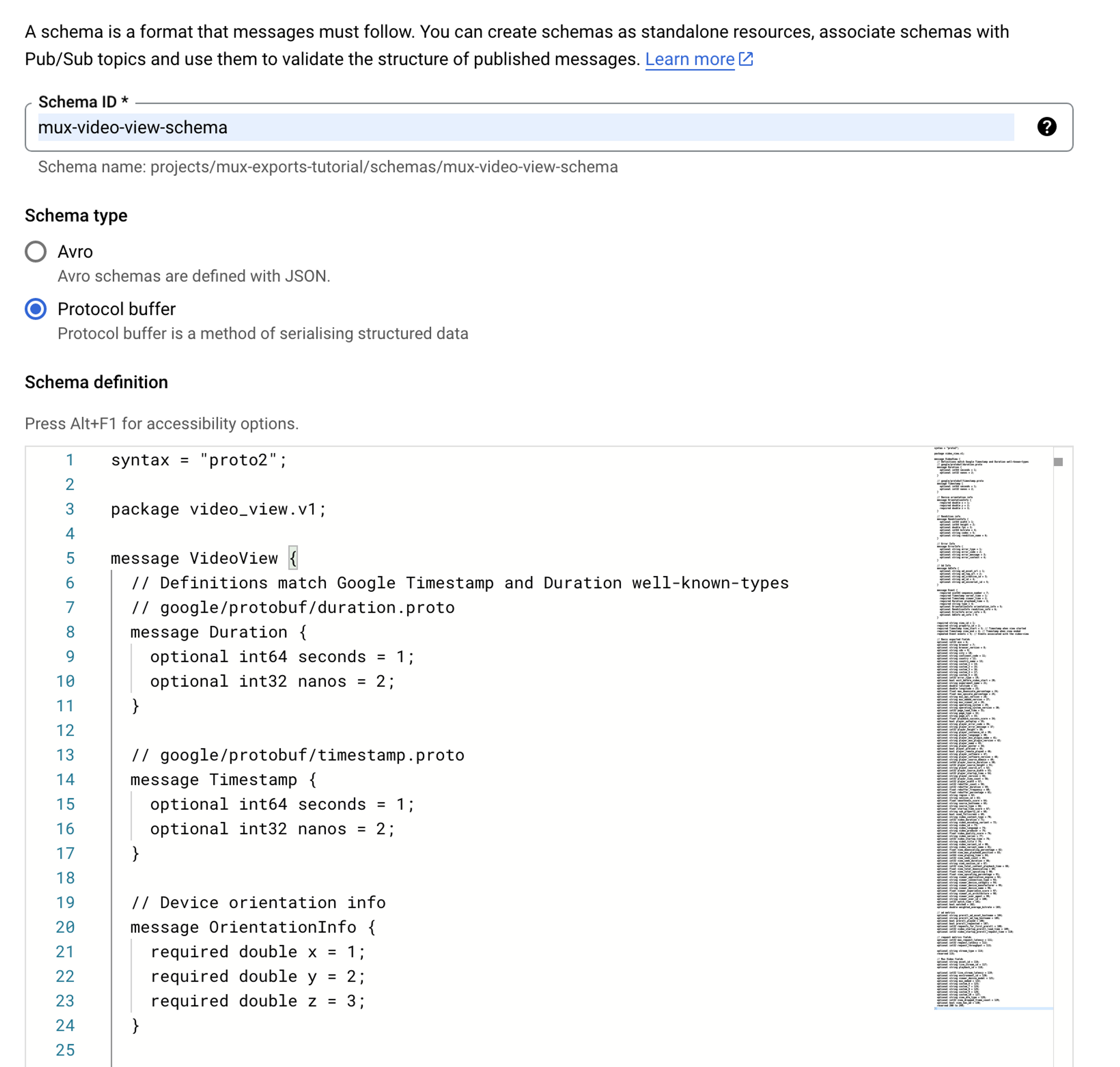

Create a Pub/Sub schema

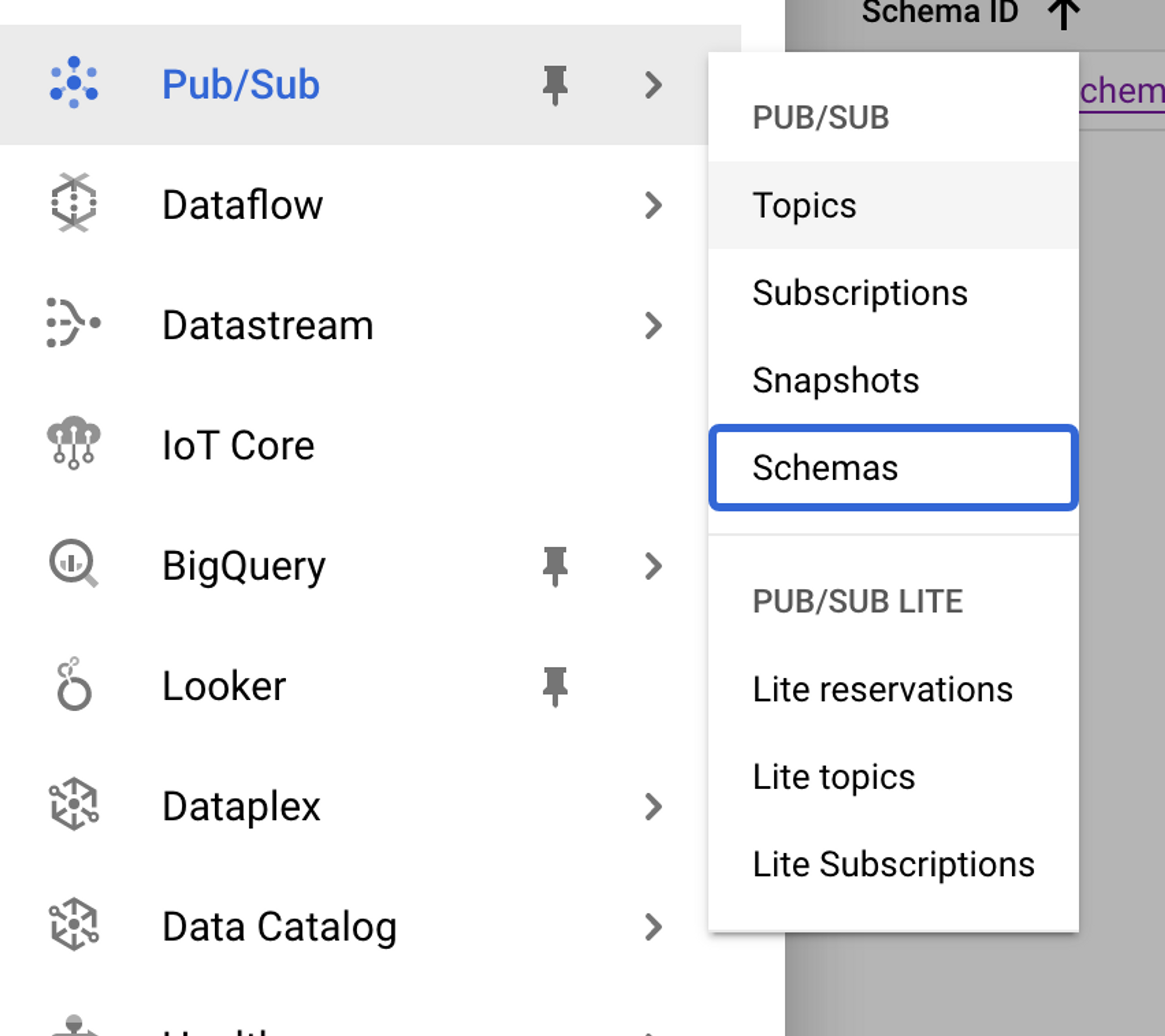

In the navigation menu, find Pub/Sub and click on Schemas.

Click on CREATE SCHEMA.

Create the schema

- Name your schema.

- Select Protocol buffer for the schema type.

- Copy and paste the contents of video_view.proto into the schema definition.

In the schema definition, find the sequence_number field under the Event object. The unsigned integer data type uint64 is unsupported in BigQuery, which will cause the BigQuery subscription step to fail. Change this data type to int64.

required int64 sequence_number = 7;

Validate the schema and click CREATE.

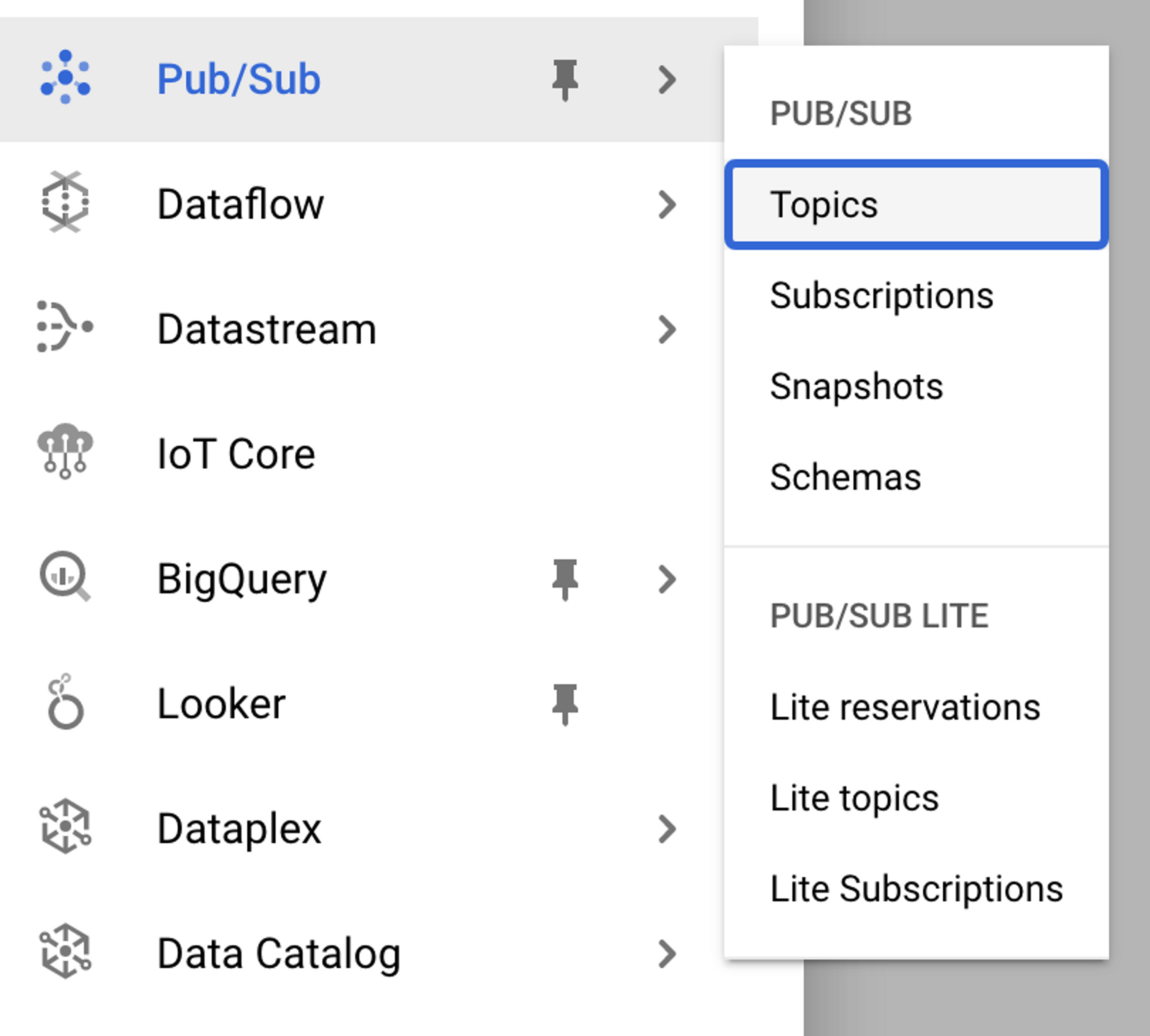

Create a Pub/Sub topic

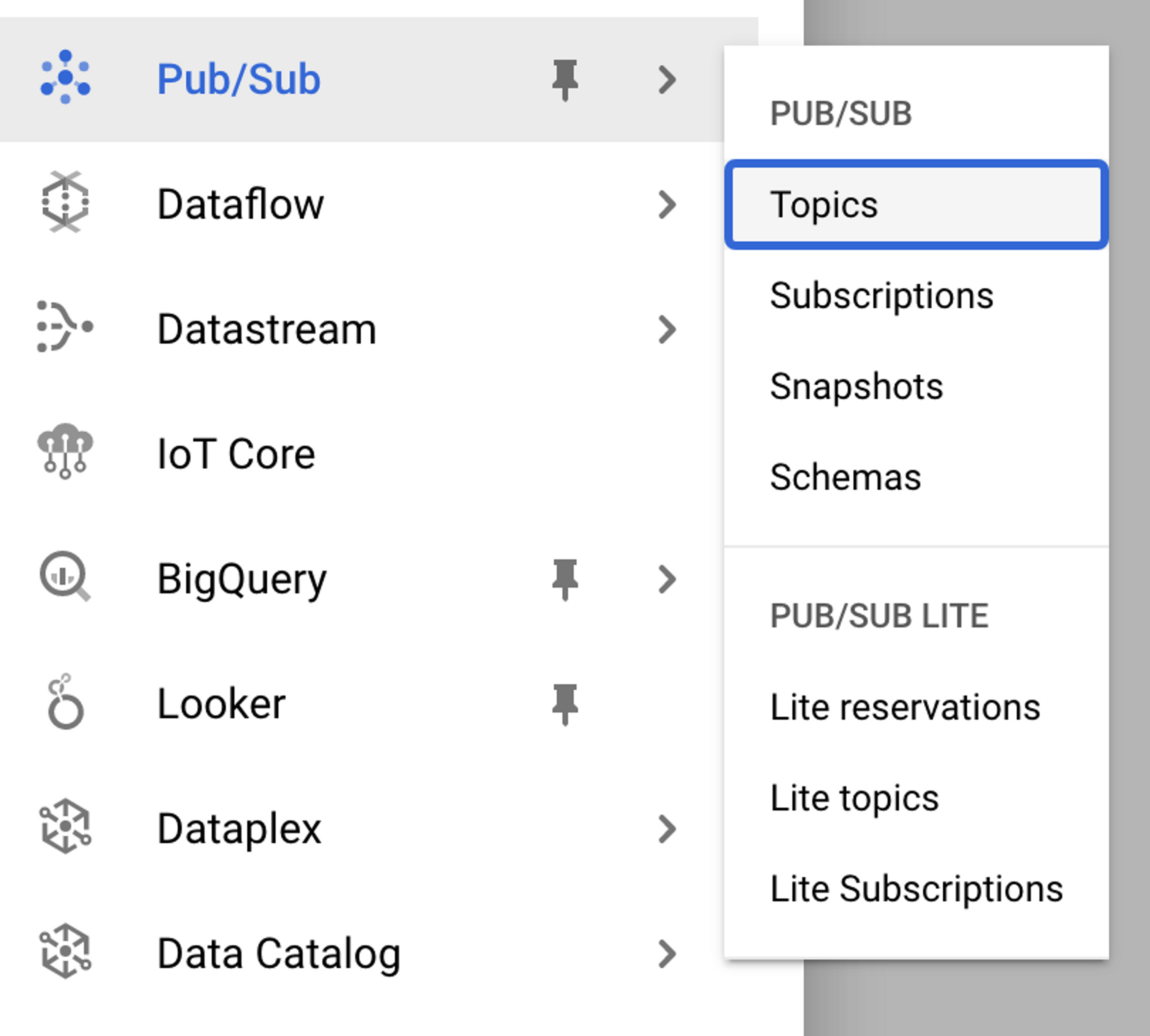

In the navigation menu, find Pub/Sub and click on Topics.

Click on CREATE TOPIC.

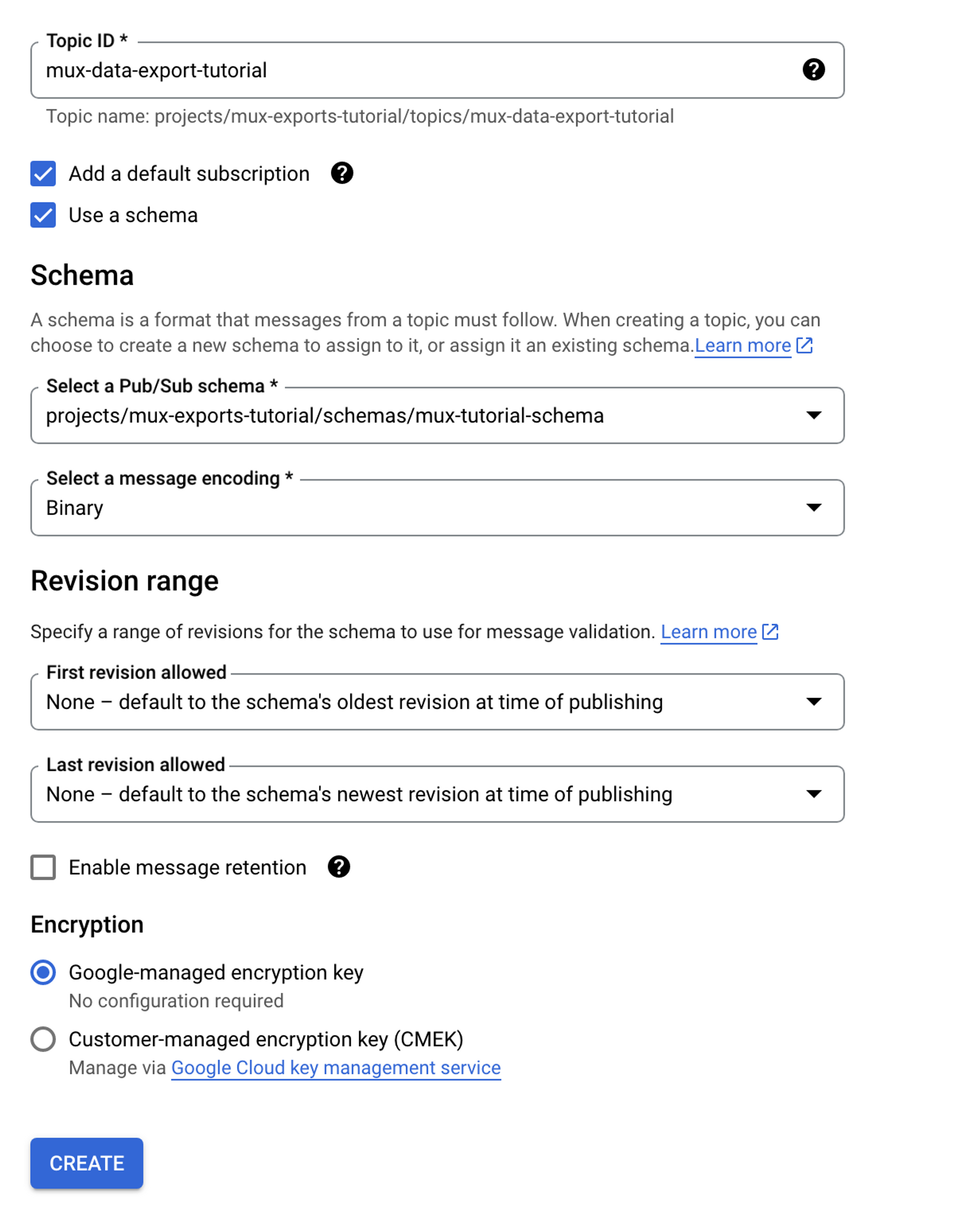

Create the topic

- Name your topic.

- Check the box for Use a schema.

- Select the Pub/Sub schema created above.

- Select Binary message encoding.

Click CREATE.

Create a BigQuery table

Before creating the BigQuery table, we must first create the schema definition from the same video_view.proto as used in the Pub/Sub topic.

Google has a plugin for the ProtocolBuffer compiler that can read protocol buffer schemas and convert them to BigQuery schema files in JSON: https://github.com/GoogleCloudPlatform/protoc-gen-bq-schema.

Install the ProtocolBuffer Compiler (https://github.com/protocolbuffers/protobuf) and this plugin, ensuring that both protoc and protoc-gen-bq-schema commands are available on your $PATH.

Open a terminal window and run the following command, substituting the paths to the output video_view.proto file:

protoc --bq-schema_out=/PATH/TO/OUTPUT -bq-schema_opt=single-message video_view.proto

This will generate a file named video_view.schema in JSON format.

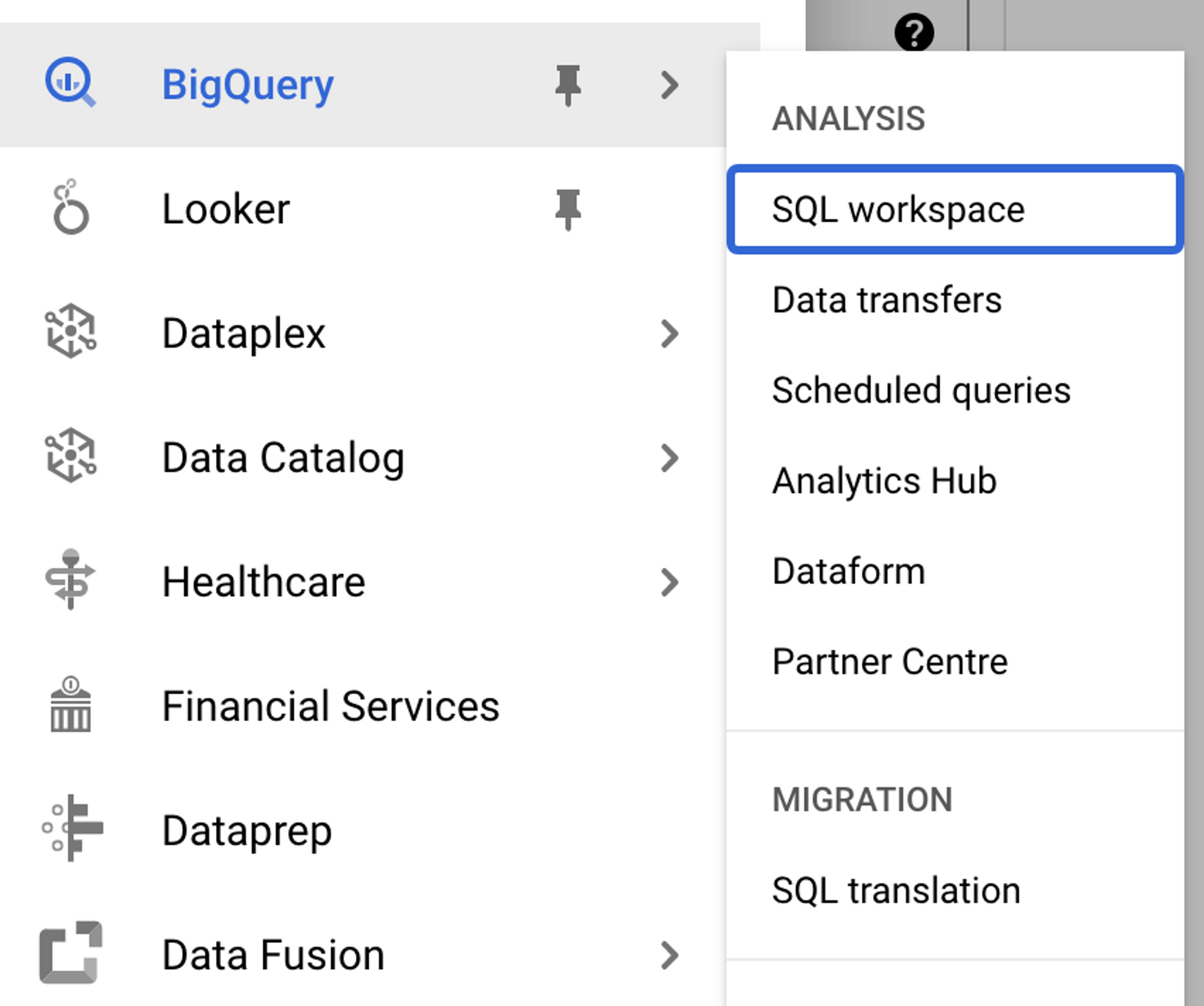

In the Navigation menu, find BigQuery and click on SQL workspace.

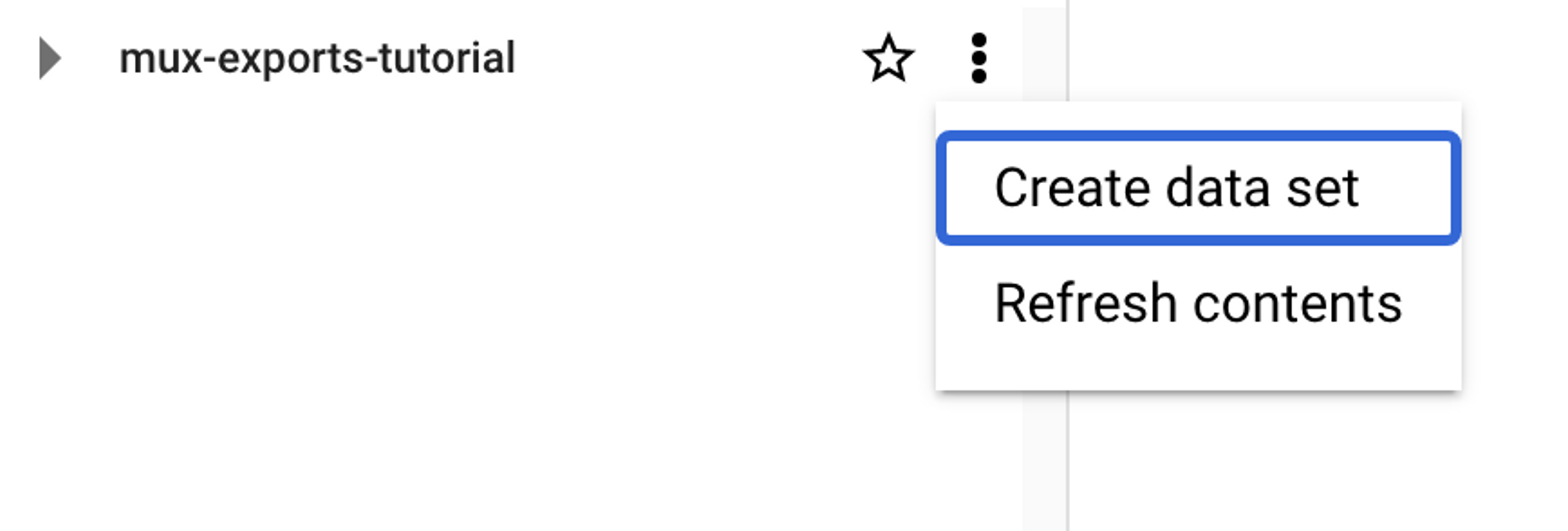

If you haven’t already, create a data set in BigQuery in which you can create your table.

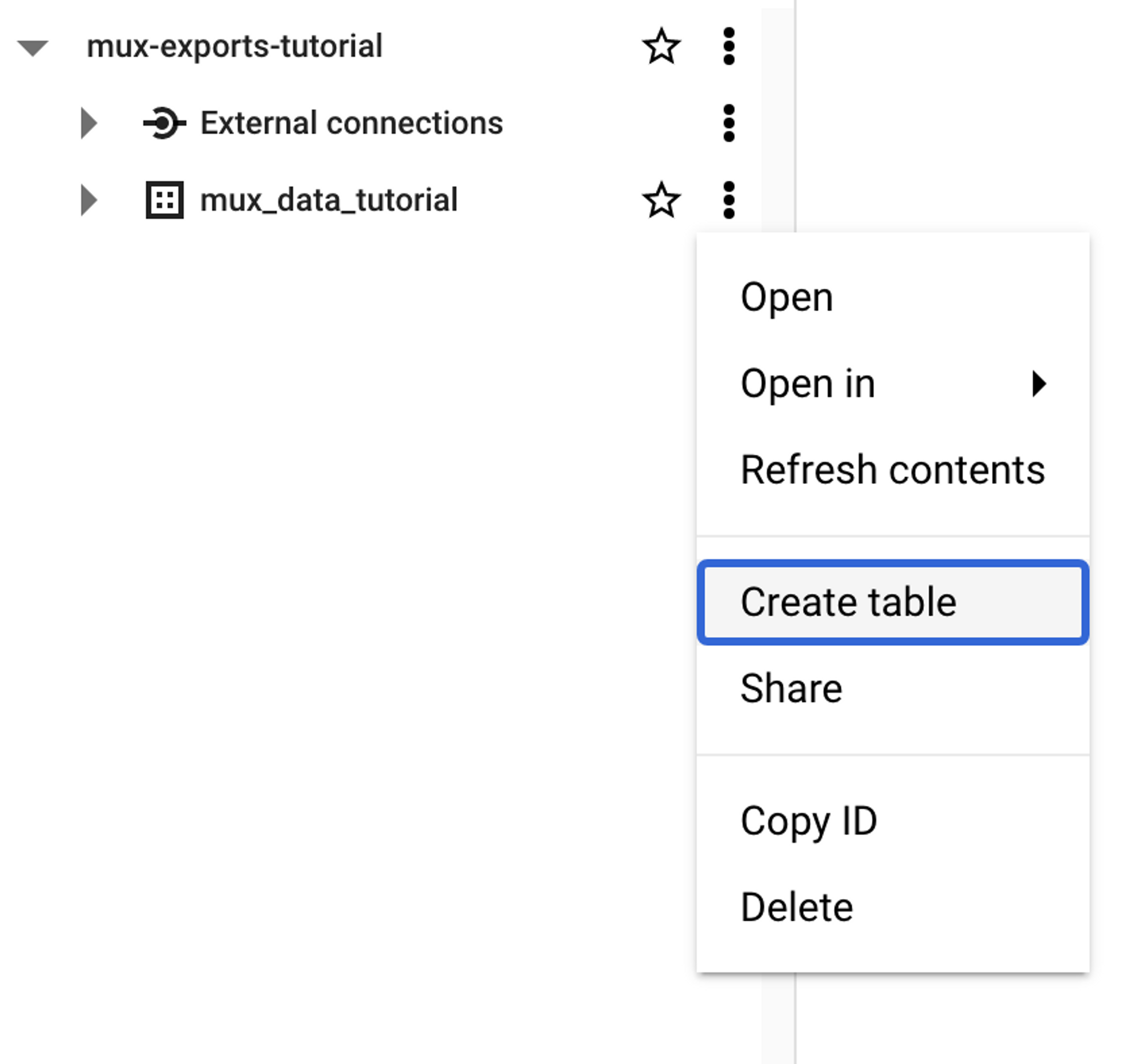

In the Explorer menu, select Create table on your data set.

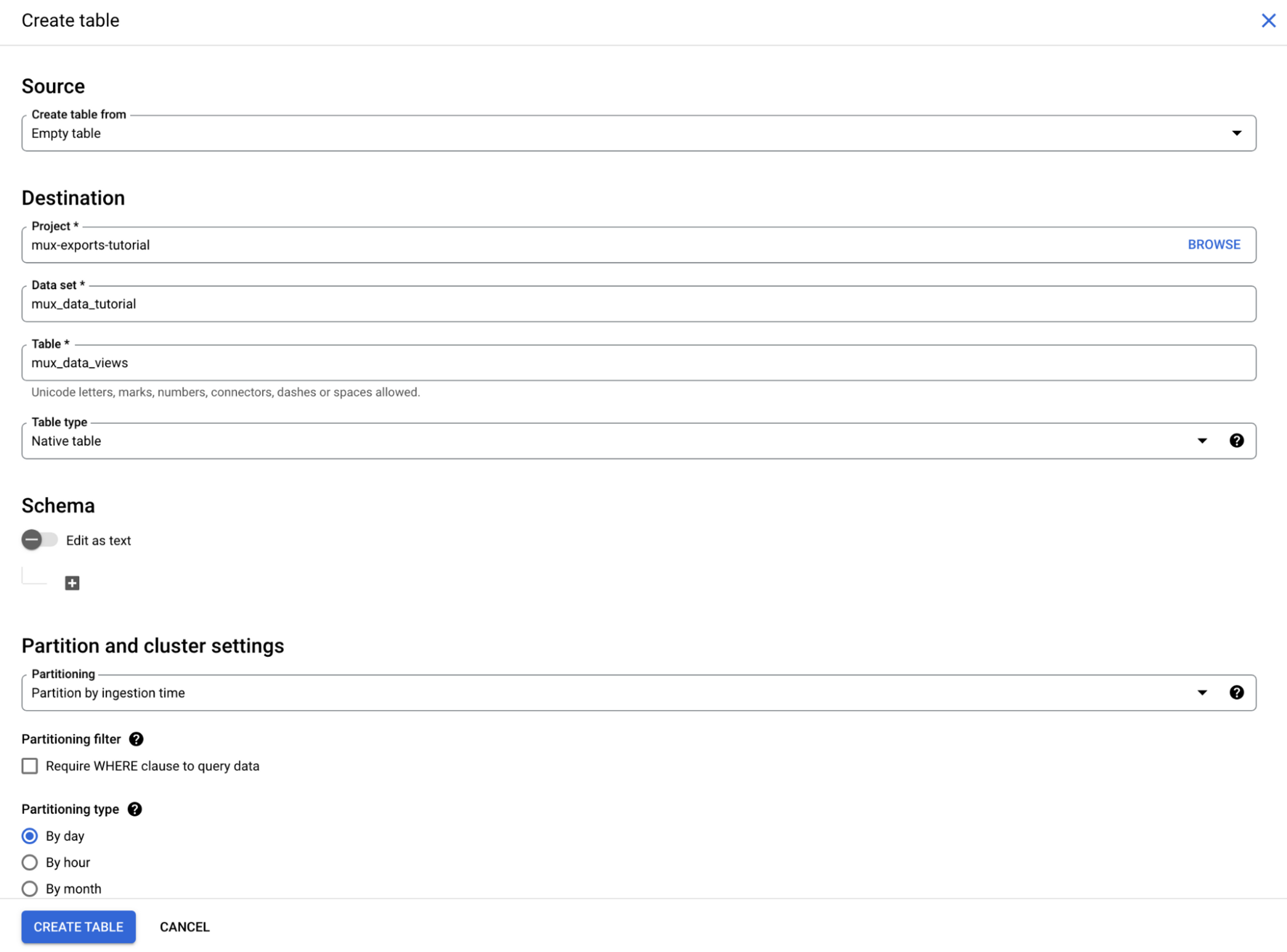

Create the table

- Set Create table from to Empty table.

- Name your table.

- Set Table type to Native table.

Partitioning your table is optional, but it is good practice in BigQuery, as specifying a partition will ensure that less data is being processed. You can force users to use it by checking the Require WHERE clause to query data box.

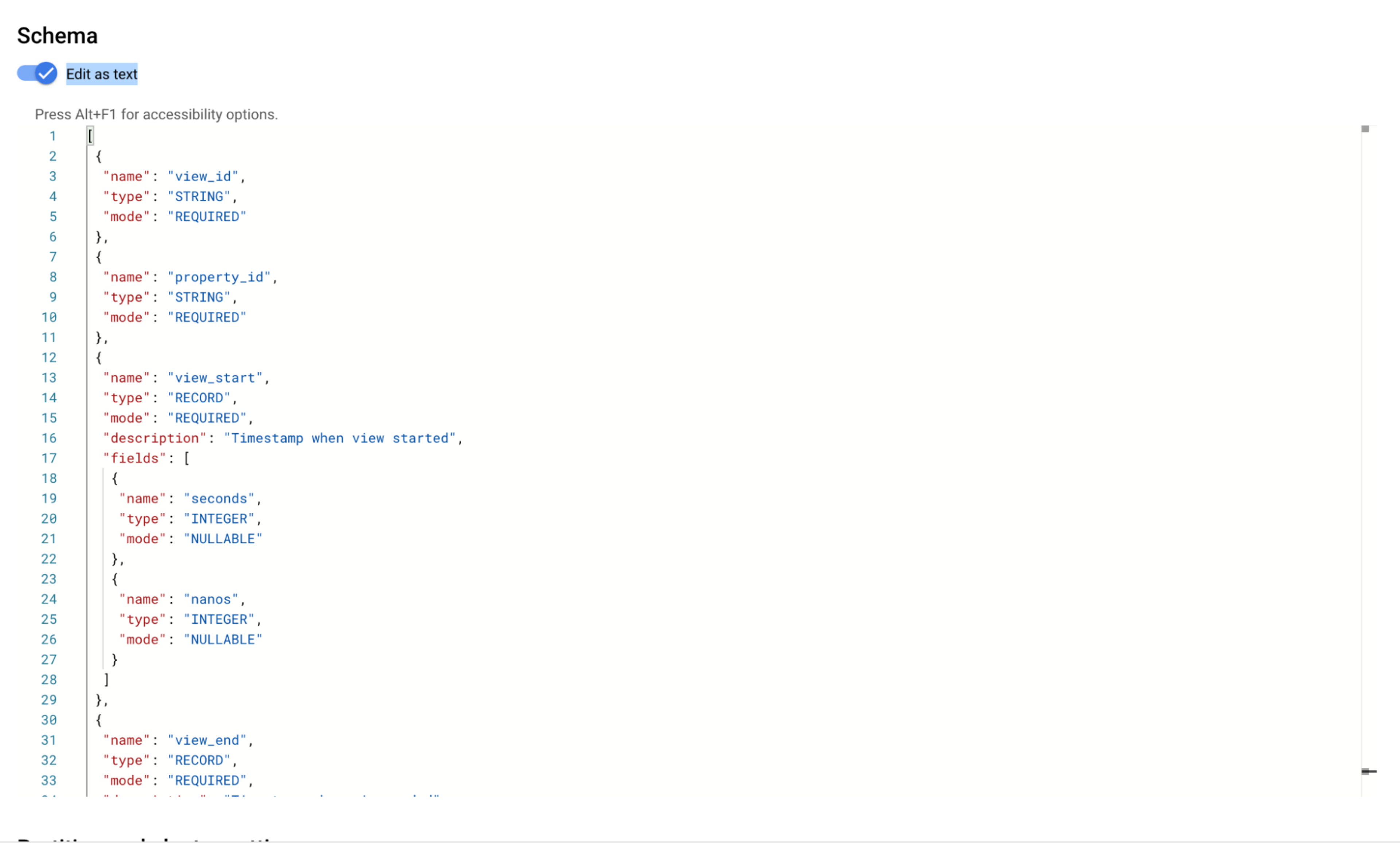

Define the table schema

- Toggle the Edit as text switch.

- Paste the JSON schema generated from video_views.proto.

Click CREATE TABLE.

Create a Pub/Sub to BigQuery subscription

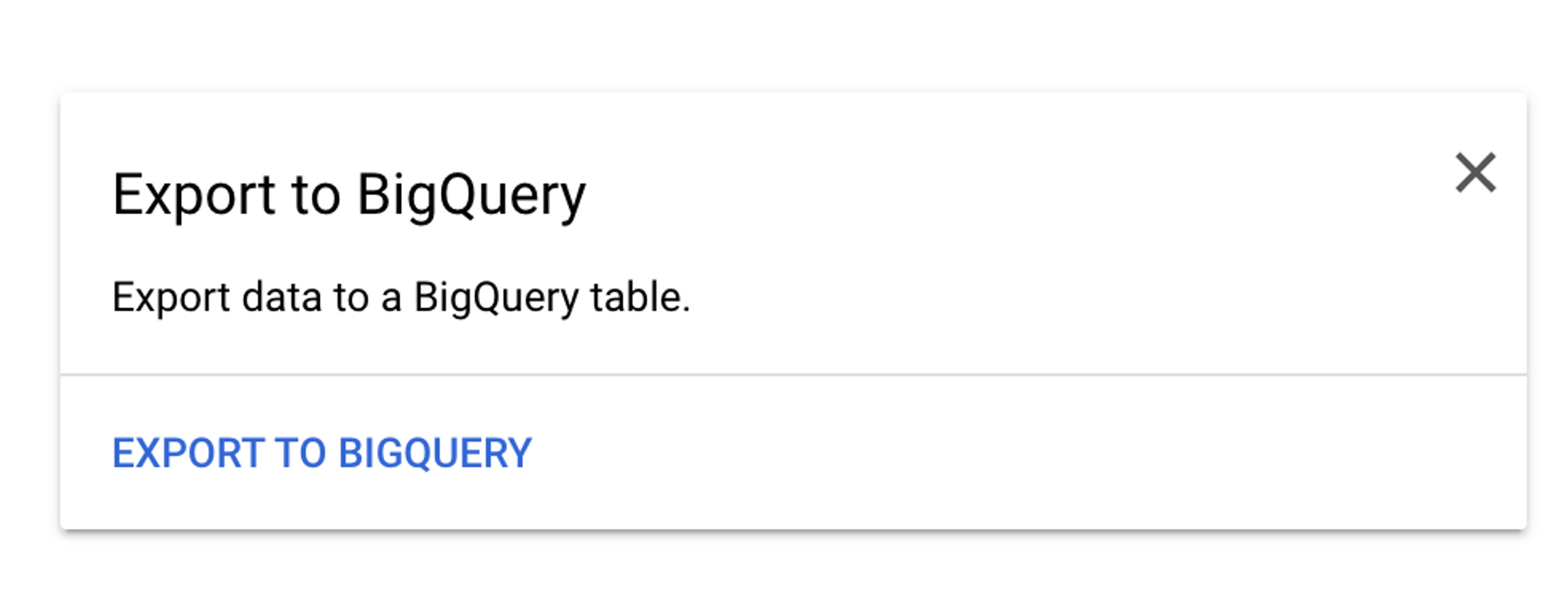

Navigate to your Pub/Sub topic and click EXPORT TO BIGQUERY.

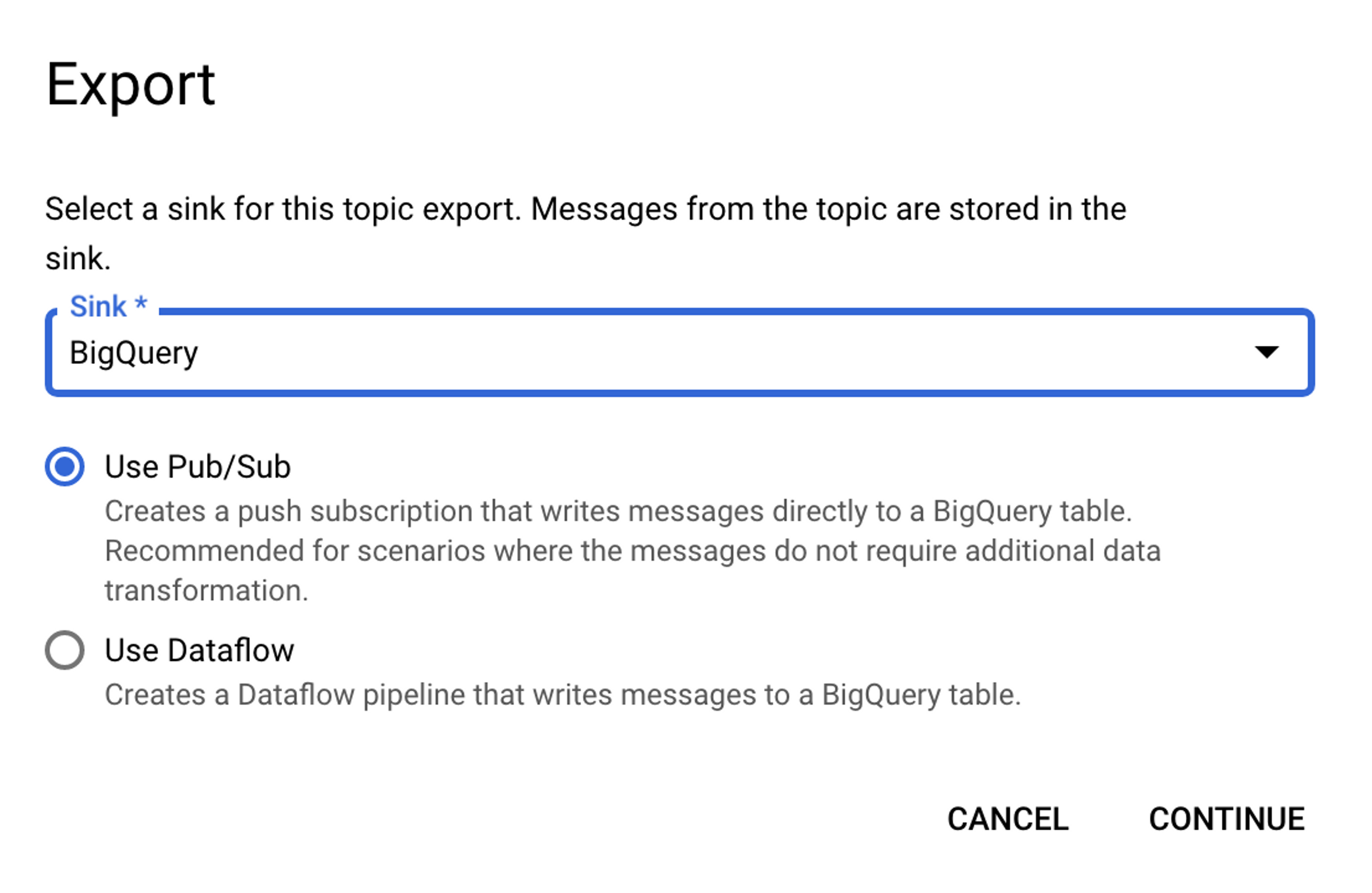

Select BigQuery and Use Pub/Sub and click CONTINUE.

Create a subscription

- Provide a subscription ID.

- Select your Pub/Sub topic.

- Select Write to BigQuery as the delivery type.

- Select your BigQuery data set.

- Enter the name of the table you created in BigQuery.

- Check the box Use topic schema.

- Check the box Drop unknown fields.

Click CREATE.

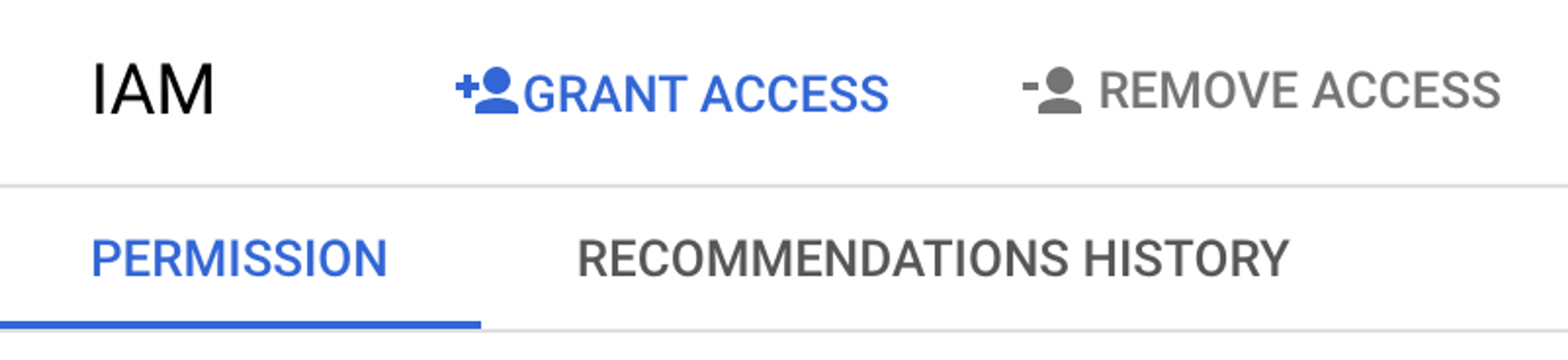

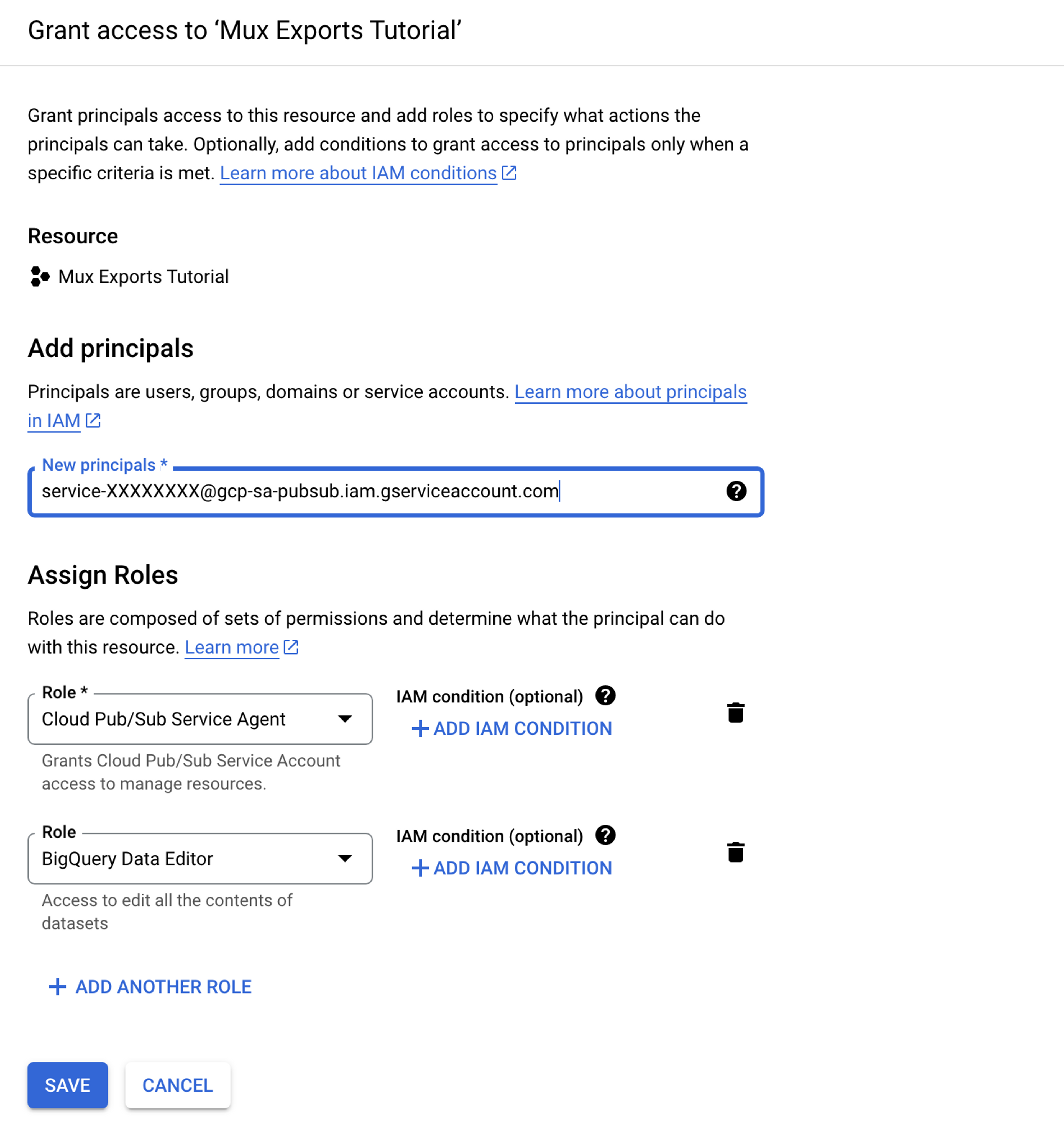

If you see an error message like the one below, then you need to grant the service account Cloud Pub/Sub Service Agent and BigQuery Data Editor roles.

Service account service-XXXXXXXX@gcp-sa-pubsub.iam.gserviceaccount.com is missing permissions required to write to the BigQuery table: bigquery.tables.get, bigquery.tables.updateData.

To grant access, start by making a copy of this service account ID.

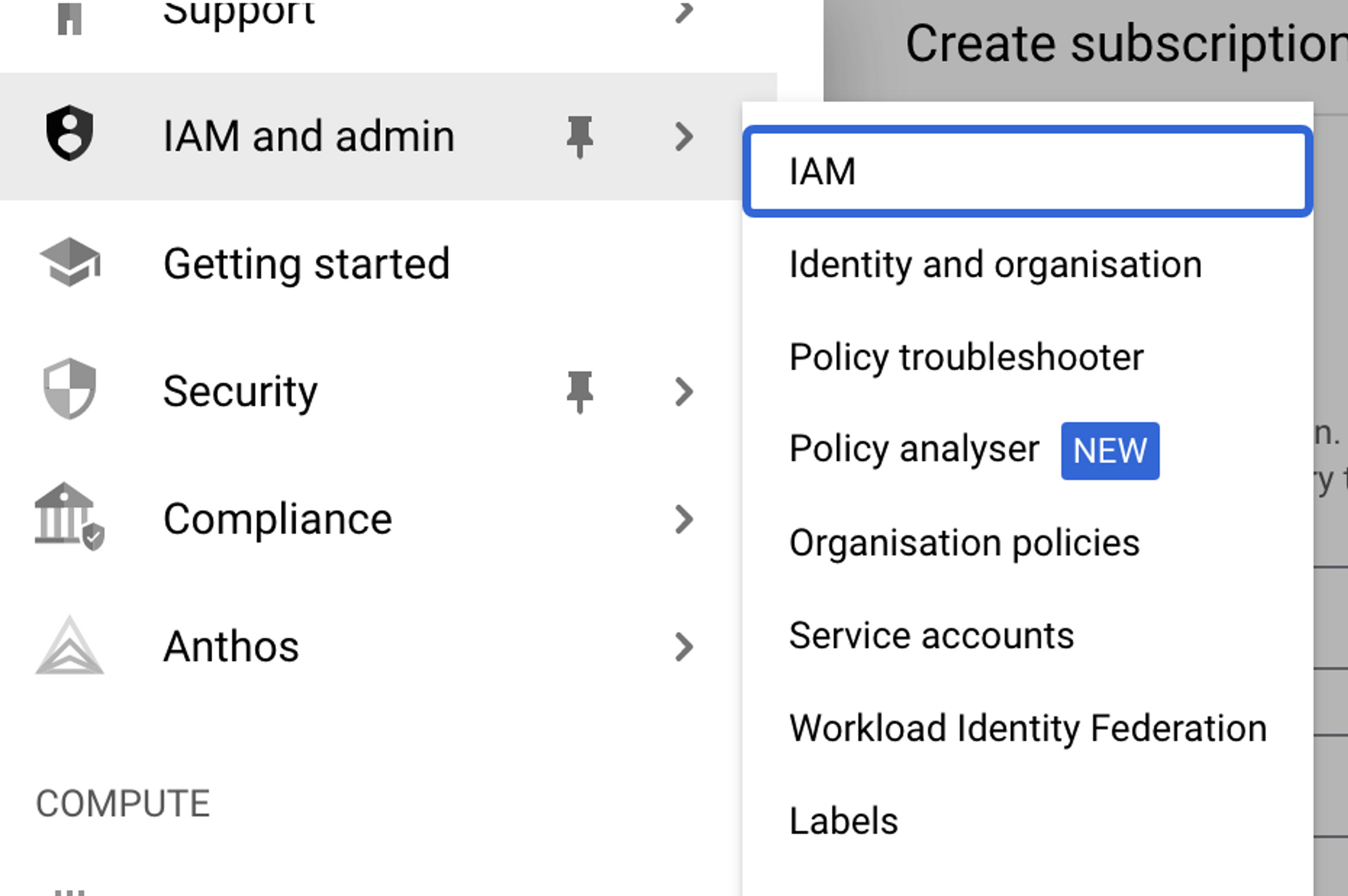

From the navigation menu, open IAM and admin and select IAM (it’s best to open this in a new tab).

Click GRANT ACCESS.

In Add principals, paste the service account ID.Under Assign Roles, select Cloud Pub/Sub Service Agent and BigQuery Data Editor.

Click SAVE.

Navigate back to your subscription and click CREATE.

Configure Mux to push exports to Pub/Sub

If you’ve made it this far, you’re now ready to configure your streaming exports on Mux.

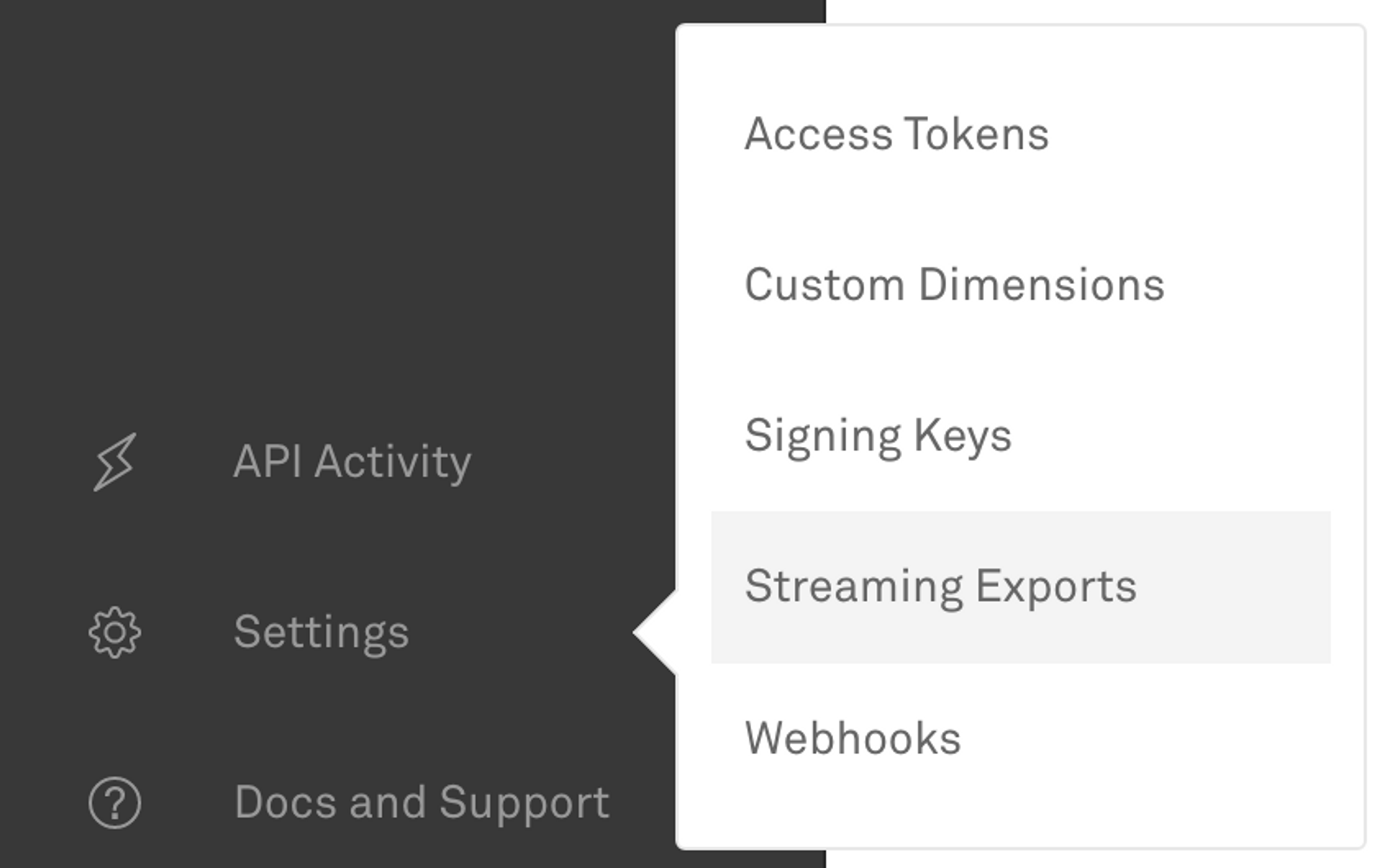

Open your Mux Dashboard at https://dashboard.mux.com/, navigate to the Streaming Exports page under Settings, and click New Streaming Export.

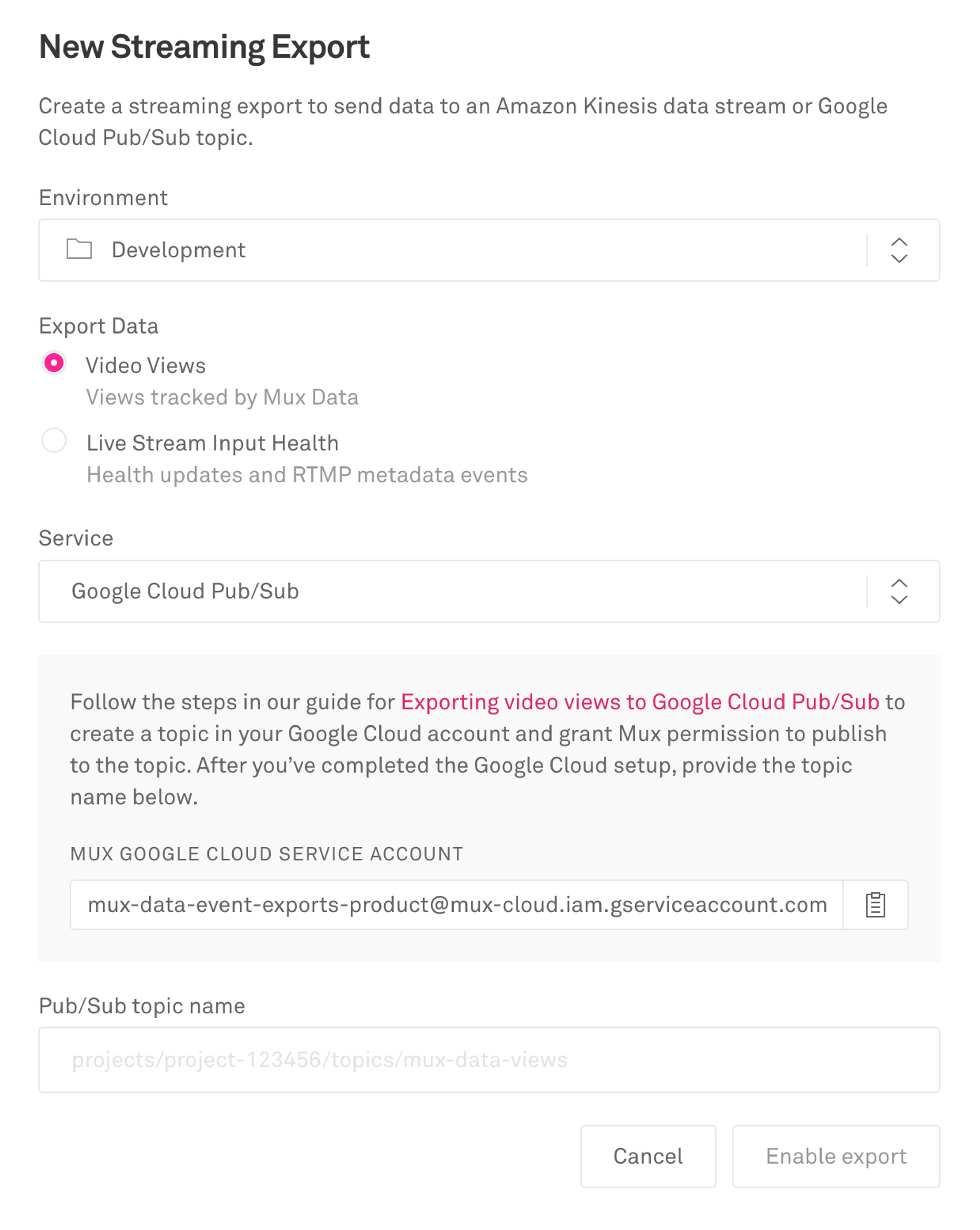

Select the Mux environment that you would like to export, select Video Views, and set the service to Google Cloud Pub/Sub.

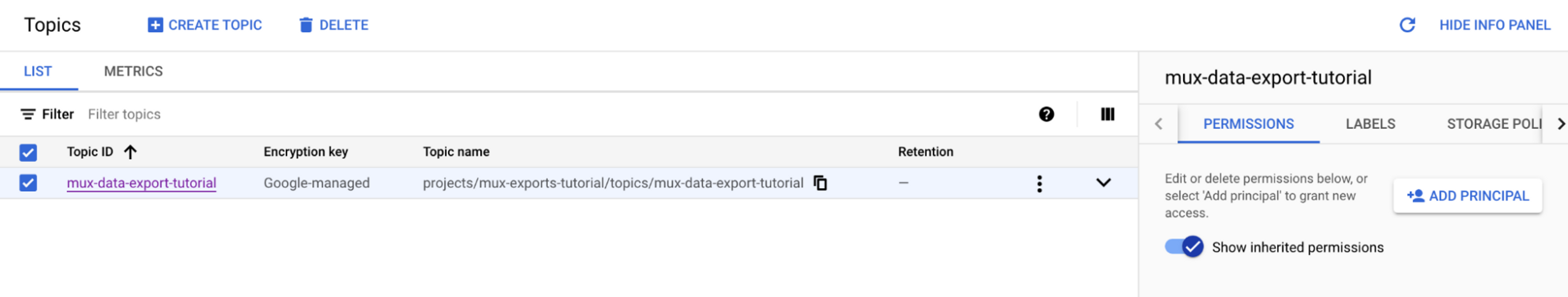

Take a copy of the Mux Google Cloud Service Account and navigate back to your Google Pub/Sub Topic on your Google Cloud Console: https://console.cloud.google.com/.

Select your Pub/Sub topic.

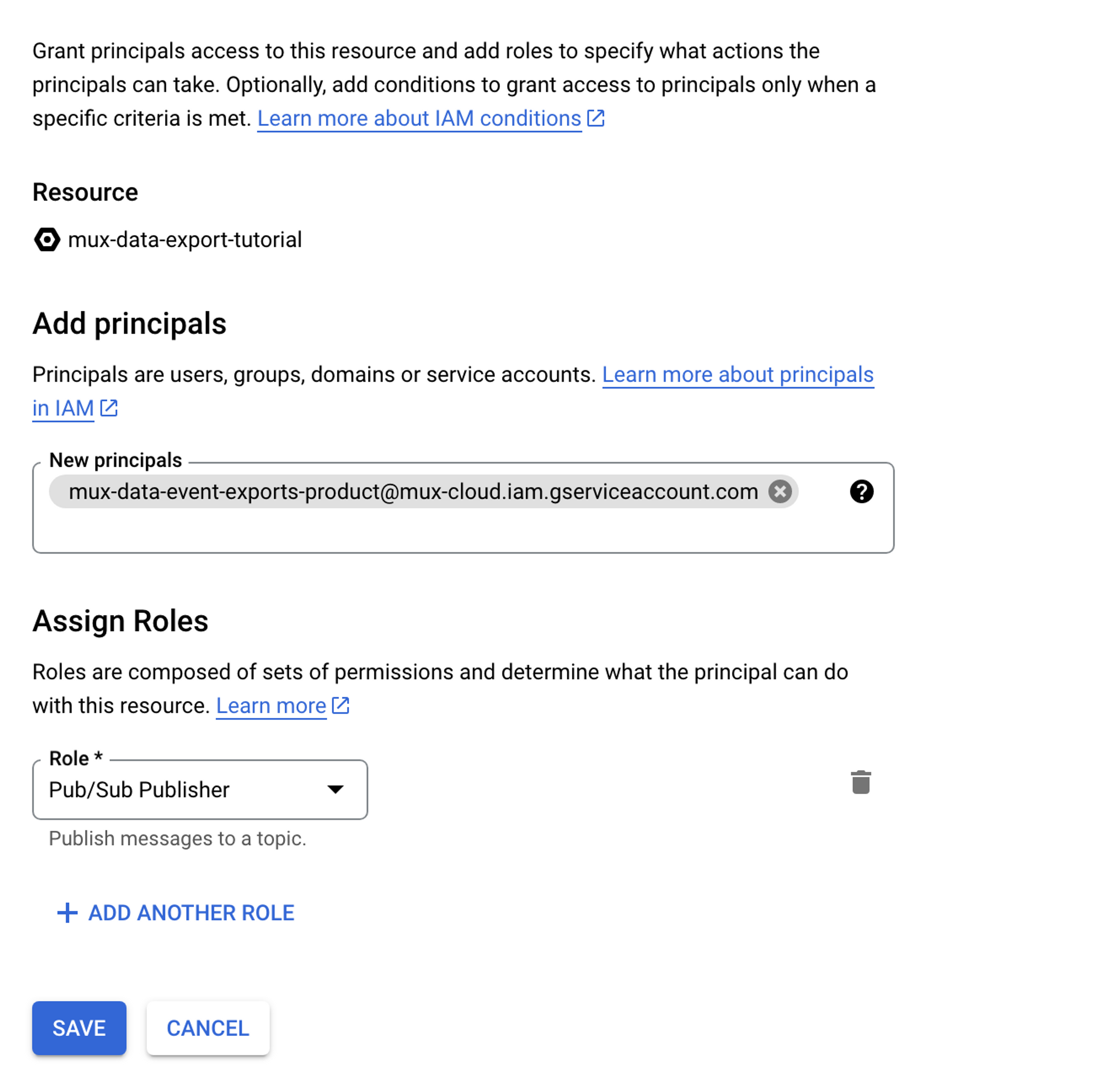

On the INFO PANEL on the right, navigate to PERMISSIONS and click ADD PRINCIPAL.

Paste the Mux Google Cloud Service Account ID into New principles and assign the role of Pub/Sub Publisher.

Click SAVE.

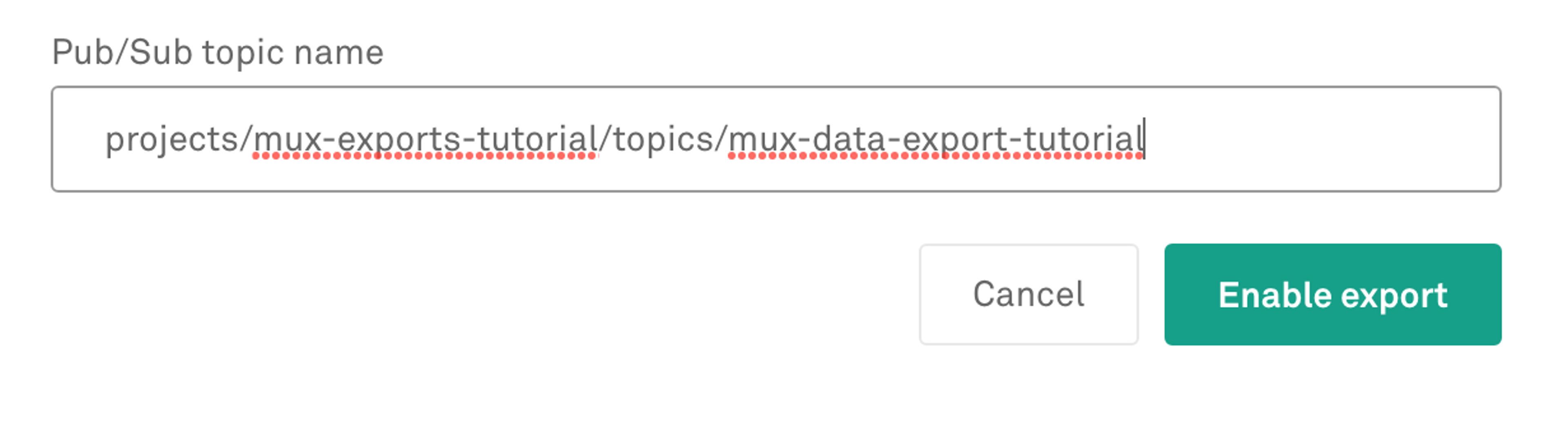

Take a copy of the Pub/Sub topic name.

Navigate to the Mux Dashboard and paste the Pub/Sub topic name.

Click Enable export.

Congratulations! You have successfully set up your Mux Video Views Exports data pipeline to push to BigQuery.

Connect to your BigQuery data source within Looker Studio

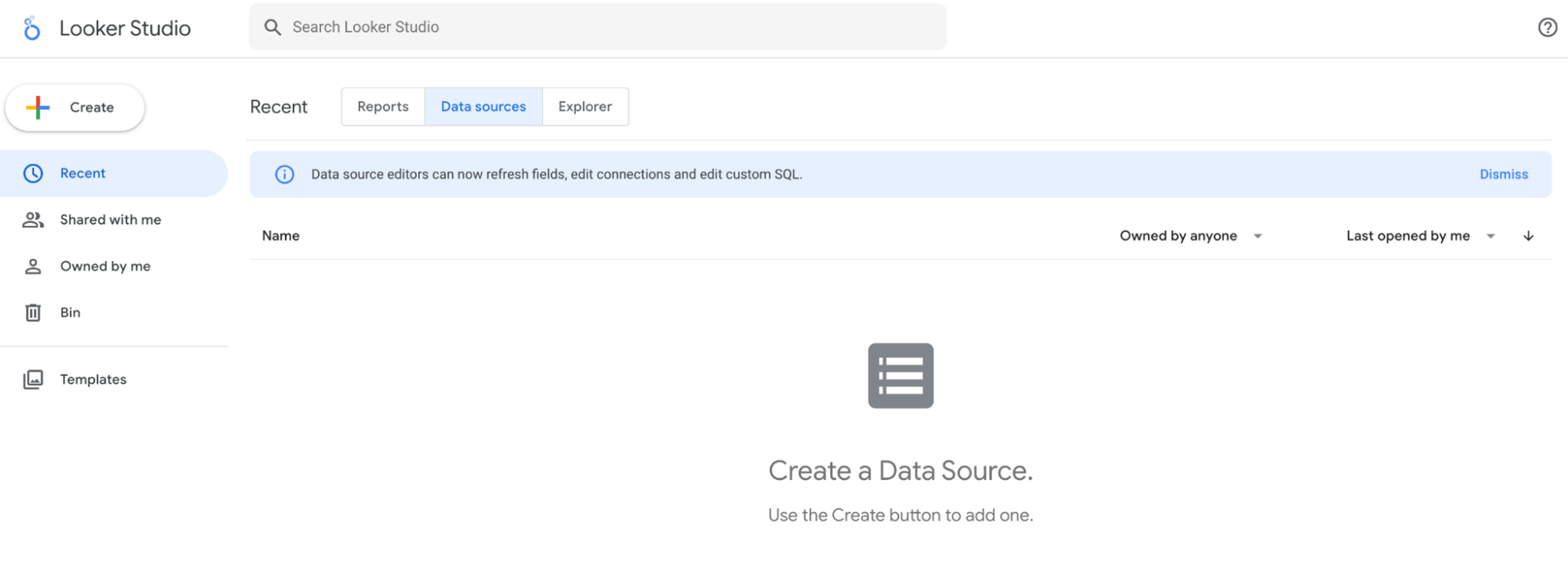

In a new browser tab, open up Looker Studio (https://lookerstudio.google.com/) and select Data Sources.

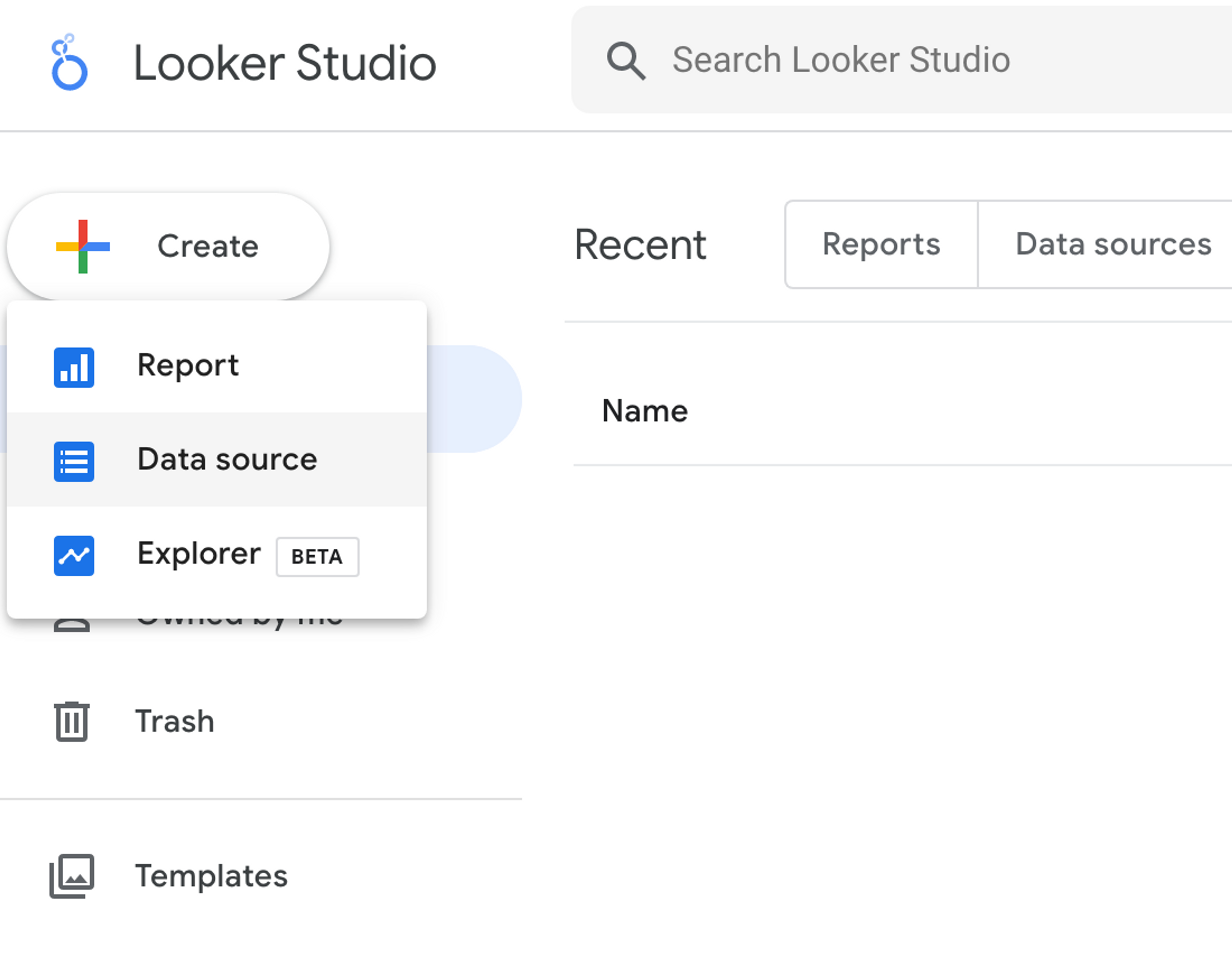

Click the Create button in the top left corner and select Data source.

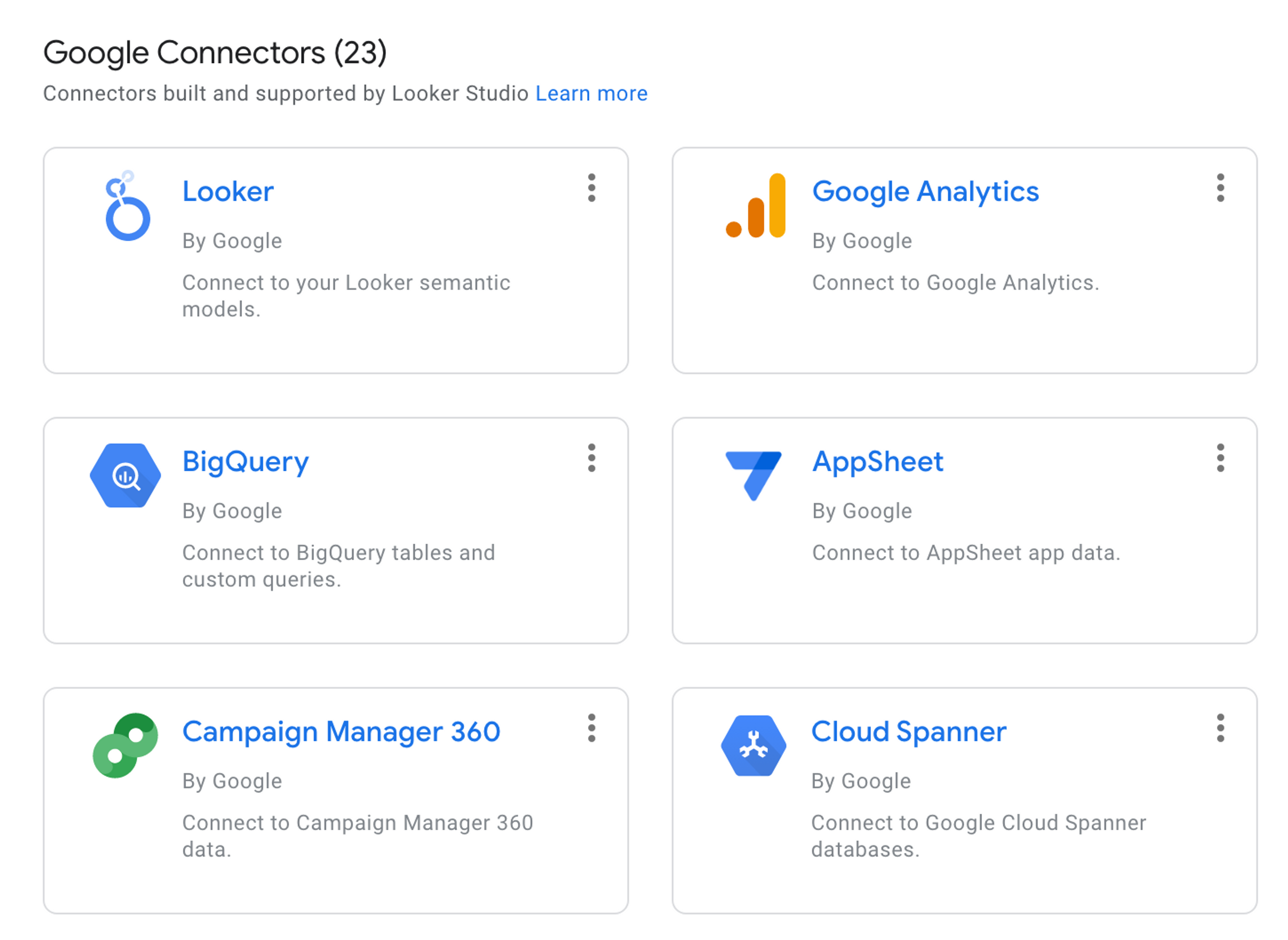

In the list of Google connectors, select BigQuery.

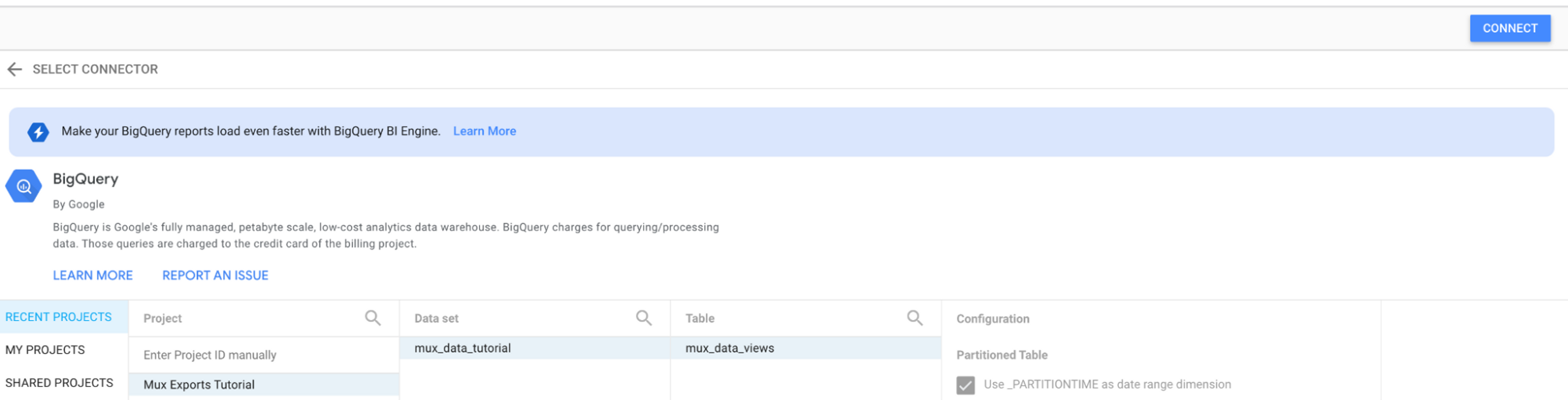

Then select the Google Cloud project, data set, and table you created in BigQuery earlier.

Click CONNECT.

You can now create a report in Looker Studio using your Mux data stored in BigQuery.

Wrapping up

So there you have it. Your Mux view data is now permanently stored in BigQuery and will be updated in real time as the views complete, and with Looker Studio, you can create powerful insights into your customers’ video Quality of Experience over the life of your product.