If you had asked me two years ago which sport a video startup needs to be most worried about, I would have said American football or basketball. My US-centric mind would never have considered that soccer would be the darling sport of stream pirates. It wasn’t until I joined Mux that I found out how much people love soccer…and how much they love to watch soccer for free. Streaming video on Mux is easy — which is a good thing! Unfortunately, that means we are a popular target for soccer pirates.

Enter the abuse detection system: our homegrown solution to identify and take down soccer pirates who try to stream copyrighted content via Mux's infrastructure without permission from the rights holder.

Log storage and selection

Our journey starts at the edge. We deliver all our videos through two CDNs (Fastly and Cloudflare). For each request we send, the CDNs provide us a record of that request. Each of these records gets enriched with more data in our CDN logs pipeline. At the end of that system, the records are inserted into a ClickHouse cluster. Originally, the ClickHouse cluster was used only for debugging purposes. With minimal changes, we were able to use the same data and ClickHouse cluster for abuse detection as well.

The logs include a lot of useful information, but the abuse detection system only cares about three things: which asset the log corresponds to, when the asset was viewed, and how the system can access the asset.

Next, we have a small Go program that is designed to query the CDN log data stored in ClickHouse every 10 minutes. These queries generate a list of assets and environments that had high viewership in the last 20 minutes. The program then runs follow-up queries to identify any custom domains associated with the environment, and also checks to see if the asset is public or signed. This information will determine how the video is accessed later in the system.

For each video in the list, we then do a lookup of the customer. The Go program uses customer data to assign each video a risk score. Some of the obvious risk factors include:

- How old is the customer account (older is better)?

- Do they pay their bills on time?

- Is the email address suspicious looking?

These factors, plus several others, go into assigning a risk score before n8n takes over for filtering and notification.

Abuse detection and alerting

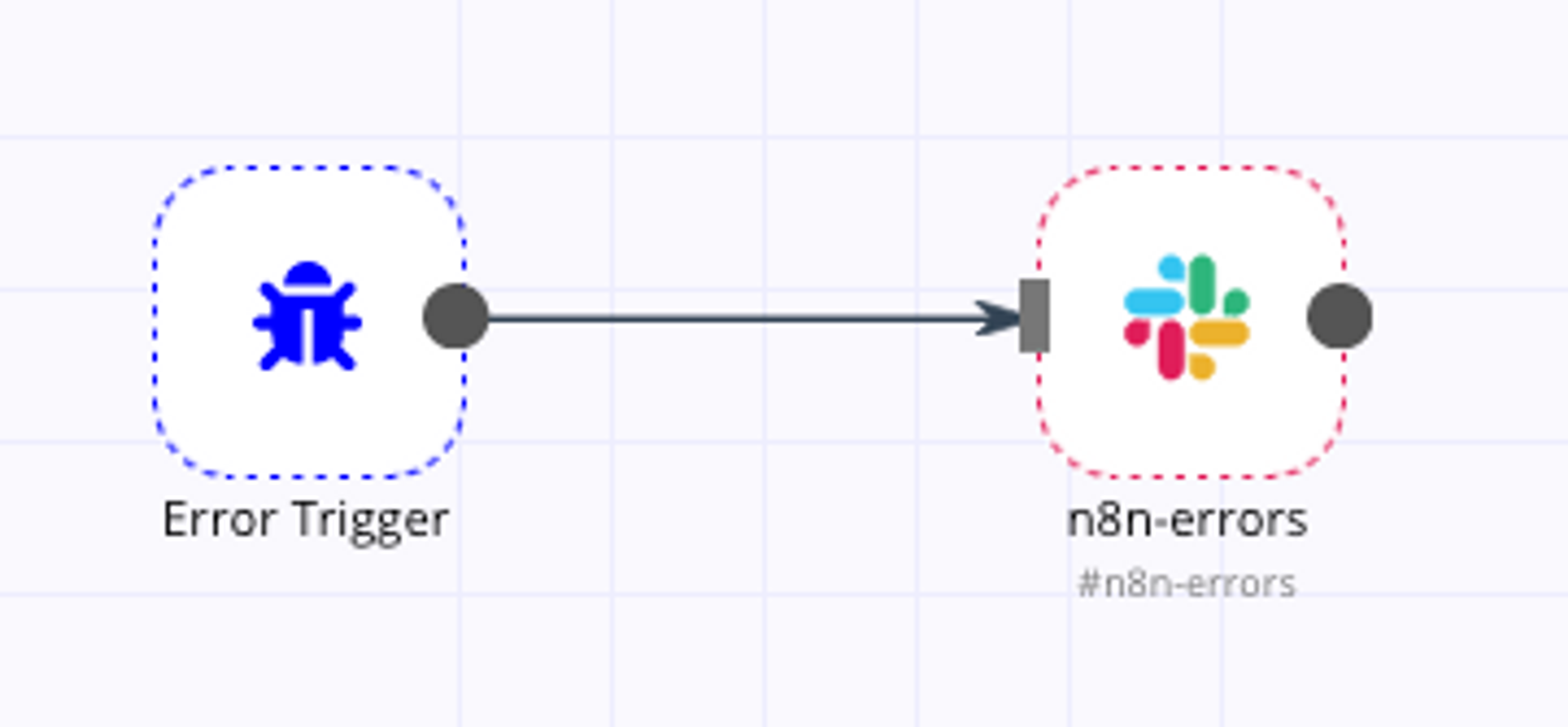

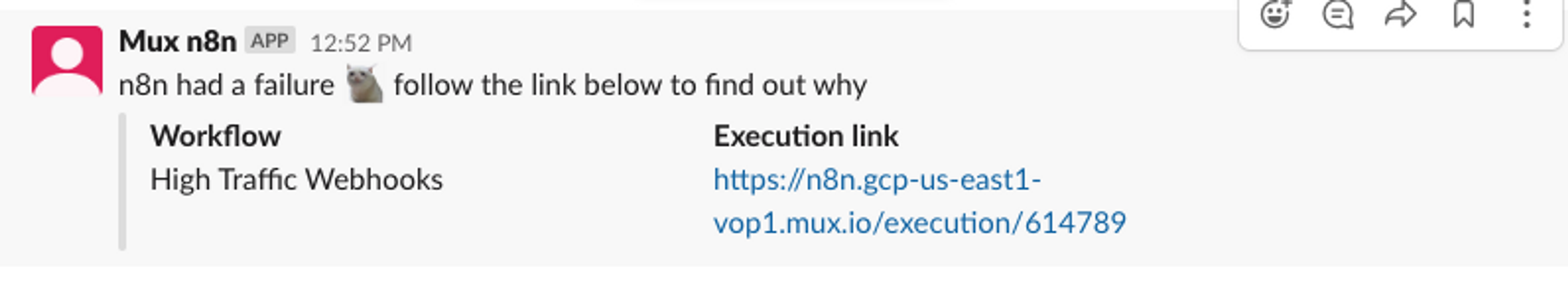

N8n is a node-based workflow automation tool. Workflows are made up of one or more building blocks — or nodes — each of which performs a specific function. N8n has a catalog of prebuilt nodes as well as support for creating your own custom nodes. We picked n8n because of the quick development time and its easy-to-debug nature. We could have built this part of the system in Go or a different language, but by using n8n’s premade nodes, we were able to build our workflows at an incredible speed. N8n also lets us visualize our workflows in a way a program built from scratch would not. We even have a workflow that gets triggered on errors and posts a link in Slack to the node that errored. By leveraging n8n, we have been able to create a set of abuse detection workflows.

We have two major workflows in n8n.

First is our soccer detection workflow. For each video sent to this workflow, we generate four thumbnails taken from somewhat random points in the video. The thumbnails are then sent to Google Vision, which produces a list of labels describing the thumbnails. Those labels are then compared to the following list of words.

"sports uniform",

"soccer",

"football",

"jersey",

"player",

"ball",

"field house",

"ball game"If a word in our list matches a word in the list Google Vision sends us, then we have a potential soccer stream that needs to be further reviewed. The word list we use is intentionally broad. This can lead to the system flagging assets that are clearly not soccer as soccer. But we would rather generate false positives than potentially miss a real soccer pirate.

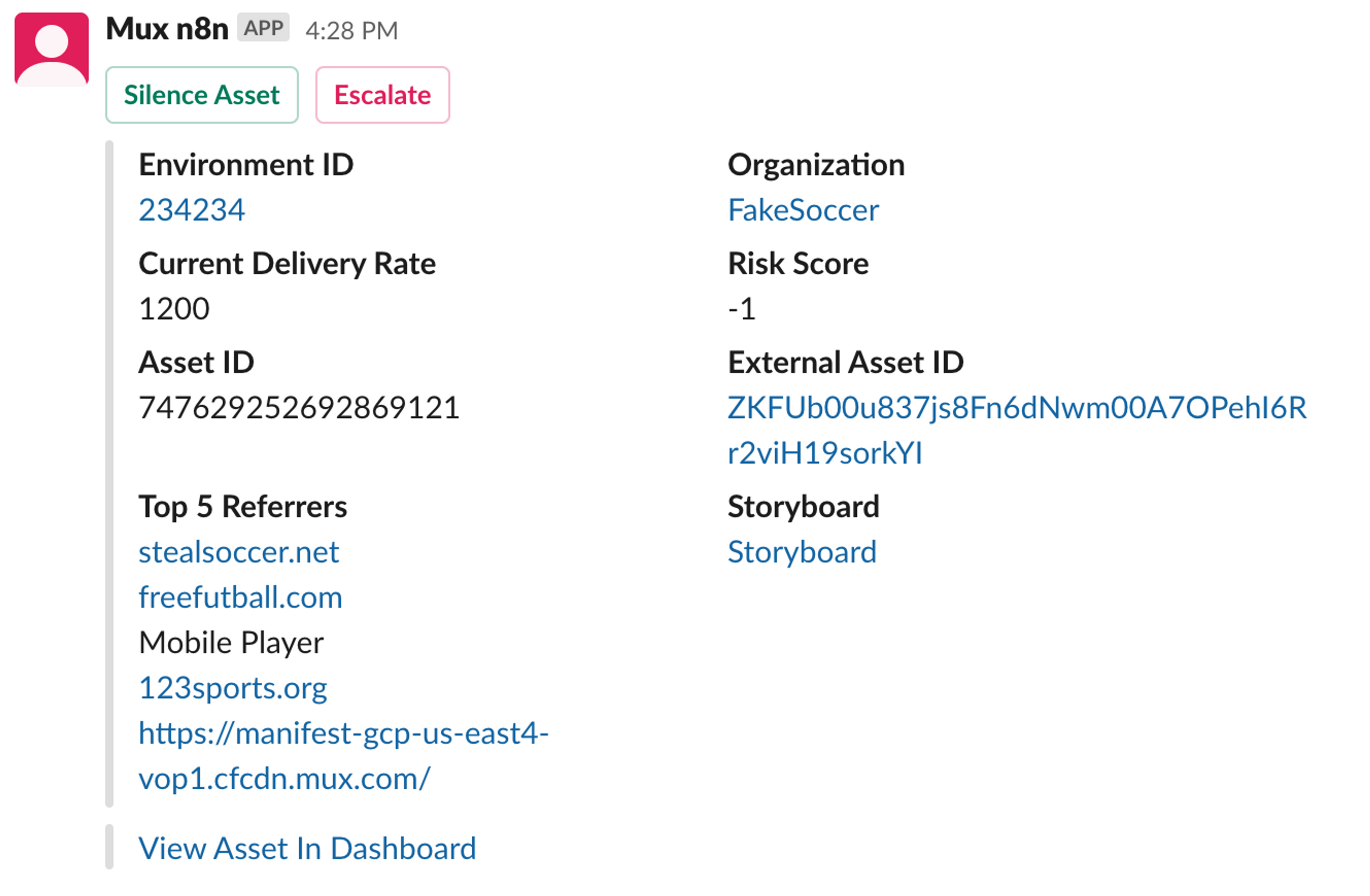

Once n8n has identified a stream that may be showing a soccer game, it creates a Slack message and an alert in Opsgenie.

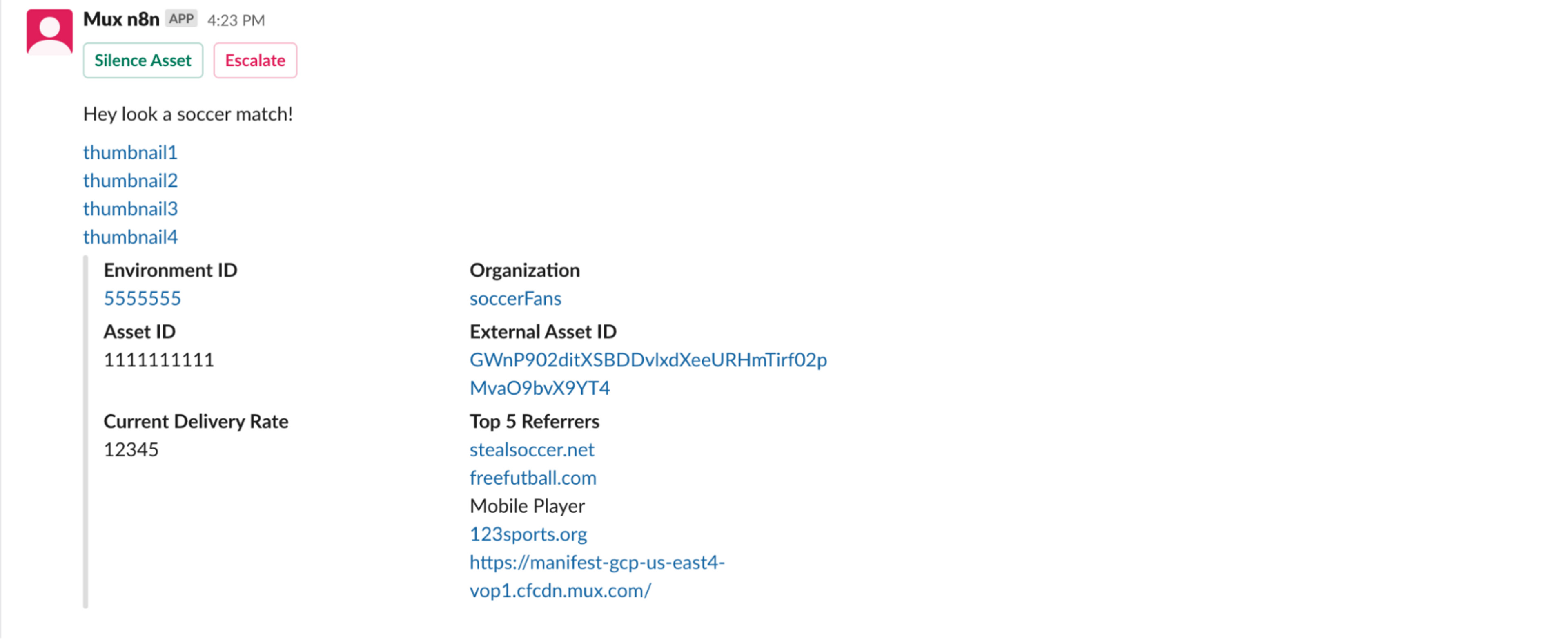

Our second major n8n workflow is our high traffic workflow. This is not specific to soccer content. Instead, it is designed to identify and show us videos that have a higher than average viewership count. The asset’s risk score, viewership count, and viewership behavior are checked in the n8n workflow. If each meets a certain threshold, then a Slack message is created. If the asset is VoD, then the Slack message will include a storyboard link. If the asset is a live stream, the message will instead have 4 thumbnails.

Taking action

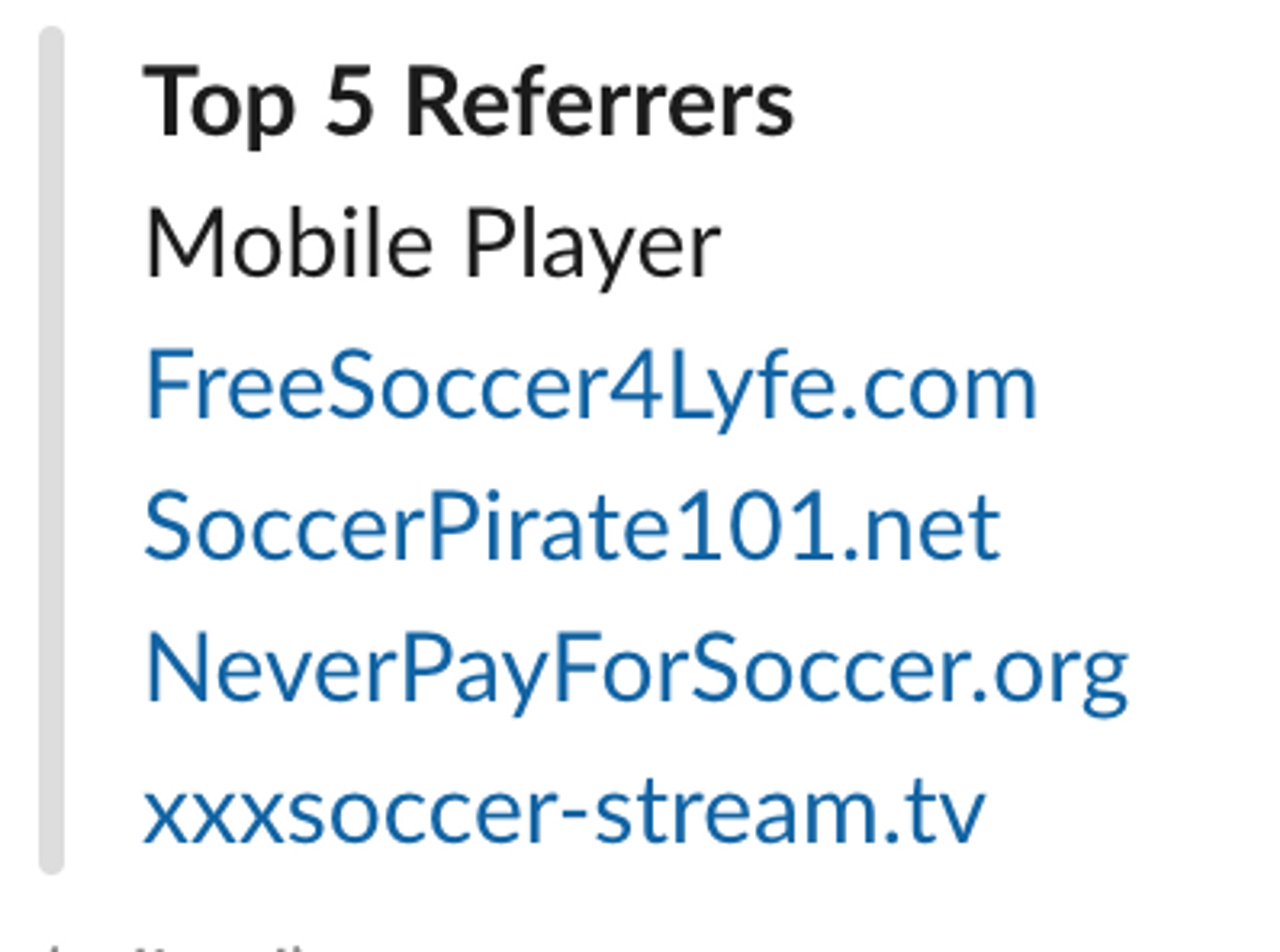

Once an alert is generated from either the high traffic or soccer detection workflow, a Slack message is created. These Slack messages are sent to a channel monitored by a team of contractors. The Slack message contains all the info we can provide to help determine if the video appears to violate our Terms of Service. The most useful data is the storyboard and the top referrers. The storyboard lets us see the content of the video without watching it. And the referrers can be powerful clues to help us determine whether a steam is legit. If the top referrers look like this

then there is a good chance it's a soccer pirate.

The contractor can escalate or silence the alert using the buttons on the Slack message. If it is a false positive, they will press “Silence,” which activates another n8n workflow that adds the asset to an allowlist, so it won’t alert again. The workflow also closes the Opsgenie alert. If the contractor believes the video may be in violation of our TOS or needs help making a determination, they will press “Escalate.” After pressing escalate or taking no action for five minutes, an alert is sent to a full-time Mux employee.

From there, the Mux employee has a couple options open to them. First, the employee will look at the Slack alert and evaluate the information for themselves. Much of the time, the Mux employee will have enough context about the customer to be able to make a determination by just looking at it. The employee can also reach out to a trusted customer to confirm that they have the rights to show the video. If the customer does have the rights to stream the video, then we can add the video to an allowlist so it does not alert again. If the customer can't provide confirmation that they have rights to the content, we can work with them to stop the stream. Finally, if the customer is a repeat offender that is uncooperative, we have policies around disabling their account.

By leveraging our abuse detection system, we have been able to cut down on the number of takedown requests we receive. Before the system was created, it was not uncommon for us to receive quite a few takedown requests in a month. Now we're pretty surprised when we receive a single request. On top of that, the system has saved us quite a bit of money. This may come as a shock, but soccer pirates tend to not pay their bills. That, combined with the fact that these streams usually have large viewership, means we incur a not insignificant cost and have no one to bill. In 2021 alone, Mux had over $750,000 in unpaid invoices due to suspected pirated streams. For an infrastructure company like Mux, this comes with hard costs. Transcoding, storing, and delivering video is not cheap. If pirated streams were not held in check, they could quickly spiral out of control and have a significant negative impact on our business. By doing our best to identify and shut down these streams, we are able to reduce our costs. The abuse detection system also provides other less tangible benefits, such as preserving our reputation. Mux doesn’t want to be known as a safe platform for soccer pirates, and our customers don't want to be associated with soccer piracy either. By investing in this system, we show customers that we take content moderation seriously.