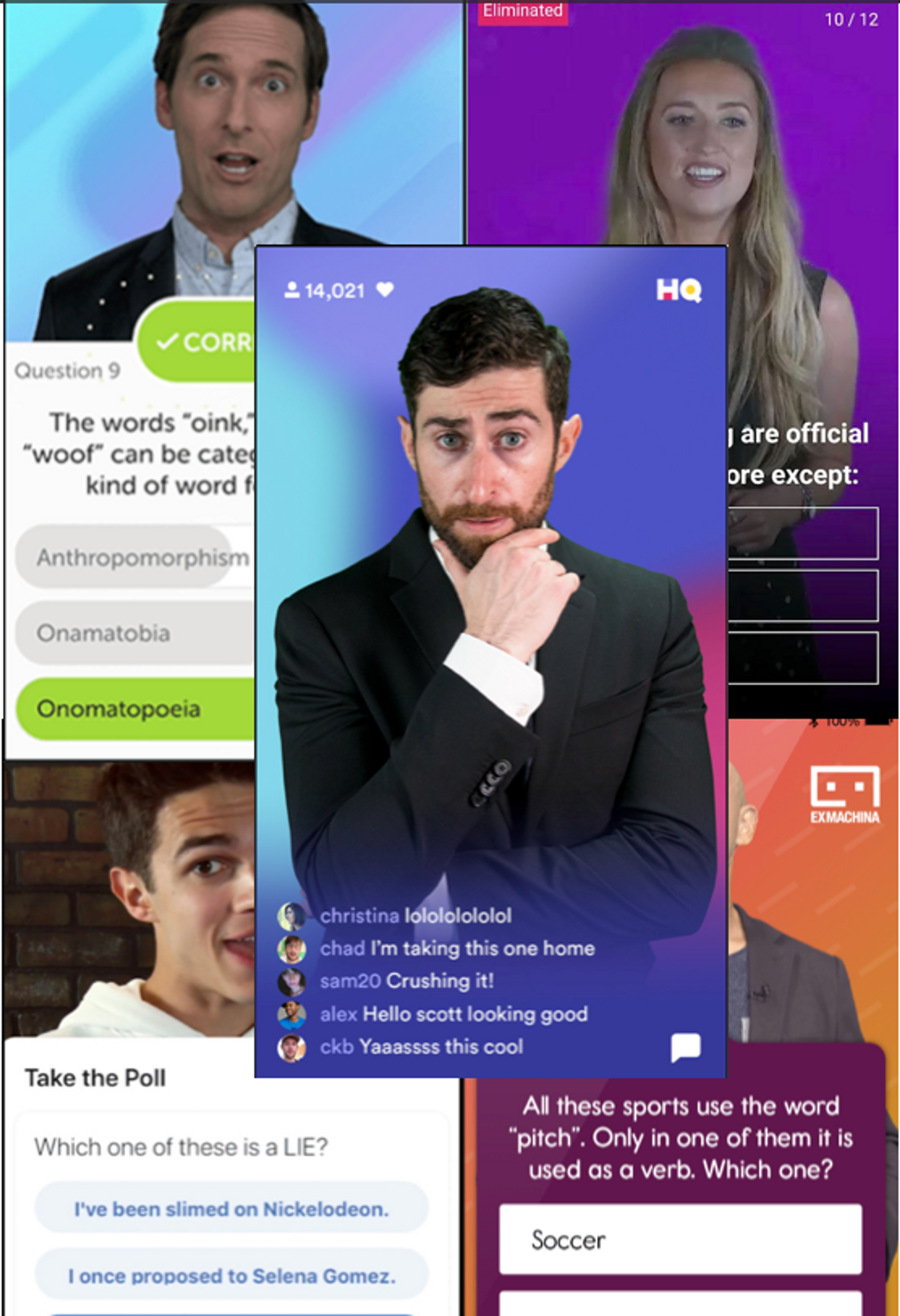

Do you remember playing HQ Trivia? It’s been about two years since the phenomenon of HQ trivia won all of our hearts and minds. At Mux, we were obsessed. At 12 noon the whole team would stop and play together. We even used machine learning to try to beat the game in real-time (tl;dr: it kinda worked, but was still really difficult to win). Of course, being video nerds we analyzed the network traffic and tried to figure out how the technology worked, how much it costs to run, and how this could be built.

Since it’s been about two years now, the world might be ready for a new and fresh live, interactive video product. If you’re working on this, we’d love to talk to you!

Steve gave this talk at O'Reilly Velocity in June, 2019.

Quick background

HQ trivia is an app for live trivia that anyone in the world can play. At certain times in the day there is a live host that asks a series of 12 multiple choice questions. If you get the question right, you move onto the next question. If you get it wrong, you’re out. At the end, all participants who made it through split the pot of $2,000-$400,000, depending on the game. At its peak, more than 1 million people would play a single game simultaneously, resulting in only a dozen or so winners. When HQ blew up in popularity - a lot of clones started popping up, as well as other apps exploring the idea of interacting with a live stream.

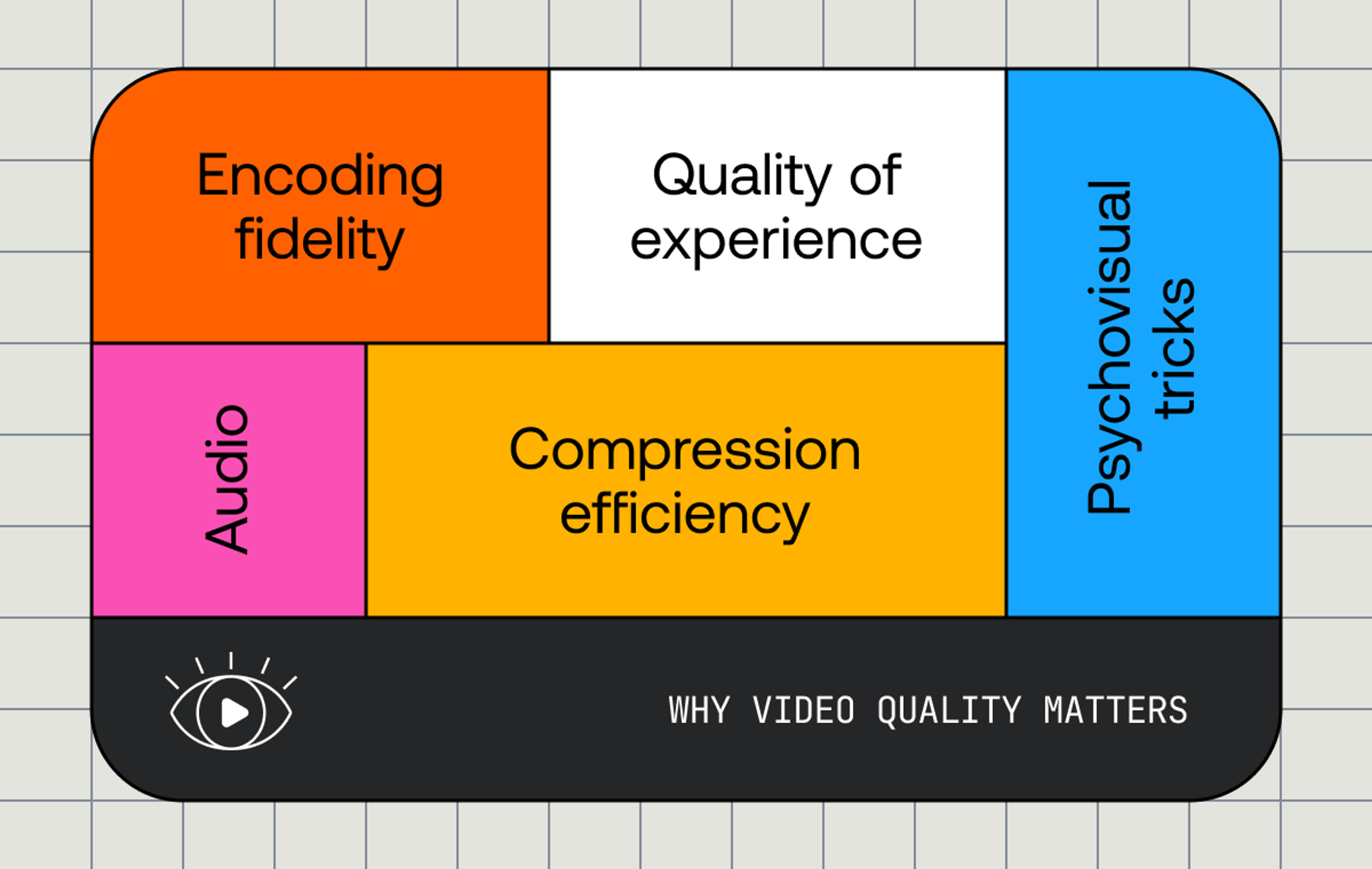

Interactive Live Streaming at Scale

We’ll call the technical challenge that HQ faced “Interactive Live Streaming at Scale”. Other apps that do this are: Twitter Periscope, Facebook Live and Twitch. In real-time, viewers can ask questions via chat, answer polls, or just tap the screen to make hearts appear. The person streaming can then answer the questions or respond to the viewers' actions, within enough time that it feels like an interactive experience for the viewer.

At-home fitness classes are similar. Cyclists, rowers or yoga students can communicate with the instructor and the instructor can see and call out people taking the class in real-time.

“Interactive Live Streaming” is distinctly different than Broadcast media and Real-time communication. It falls in between these two modes and runs into the challenges of both.

Broadcast media - think Super Bowl. Streaming large events is a relatively mature technology space. It’s difficult, but it works pretty well. For the last few years the Superbowl has been broadcast over the internet to millions of viewers simultaneously and it worked well for most people. Fox even streamed 2020's Super Bowl in 4k/UHD.

Real-time communication - think Google Hangouts. This is one to one, or many to many, including two-way audio and video. It’s a few people in a virtual room chatting with each other. It has to be interactive and extremely low latency, otherwise people start talking over each other. Apps like these use a completely different software and networking stack from broadcast media, but have been around for a little while, work most of the time (when the network allows), and are relatively mature.

Interactive live streaming - this is new territory. It has the challenges of broadcast media. HQ was broadcasting to over 1 million concurrent viewers every single game. The difficulty is that it also has some of the challenges of real-time communication. In addition to delivering the stream, HQ has to allow all the users watching to chat, respond, and send their answers to questions. There is another constraint hidden here: playback time has to be synchronized across all the clients. Broadcast media can deal with some degree of misalignment between viewers. If two people are viewing a football game and one person’s stream is 10 seconds ahead of the other person, that amount of difference is probably tolerable. For interactive live streaming, when the viewers are responding and taking actions in response to the stream, it’s more important or critically important to have synchronized client playback time.

What do you need to do Interactive Live Video?

- Real-time data framework. There’s lots of solutions here: Firebase, PubNub, Pusher, socket.io, Elixir and Phoenix or a number of other websockets solutions. This is fairly well understood.

- Low latency video at scale. This is where it gets fun, and there are no out-of-the-box solutions today, though the video community is starting to figure some out. Apple has done some recent work around low latency video streaming over HLS and we have written extensively about it here when it was first announced and more recently here when it was updated.

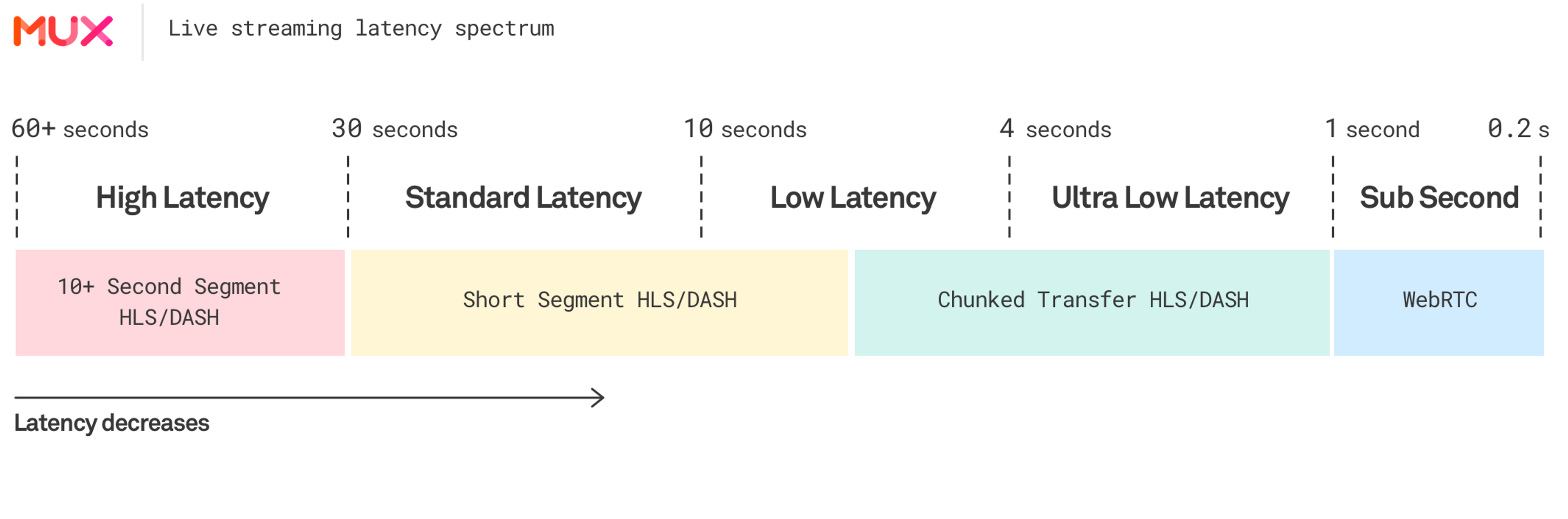

The cutoff points in this spectrum are a little arbitrary, but the spectrum and the idea itself is extremely valuable when thinking about the needs of a live streaming product. There’s always a cost and stability vs. latency tradeoff to be made here. Of course, every streaming product would love to have less latency. Periscope, Twitch and other Interactive Live Streaming products would love to get to sub-second latency but as latency decreases, cost increases and stability decreases. Most of the time we find with Interactive Live Streaming products the sub-10 second “Low Latency” range in this spectrum is within the reasonable acceptable range.

Breaking down the first part

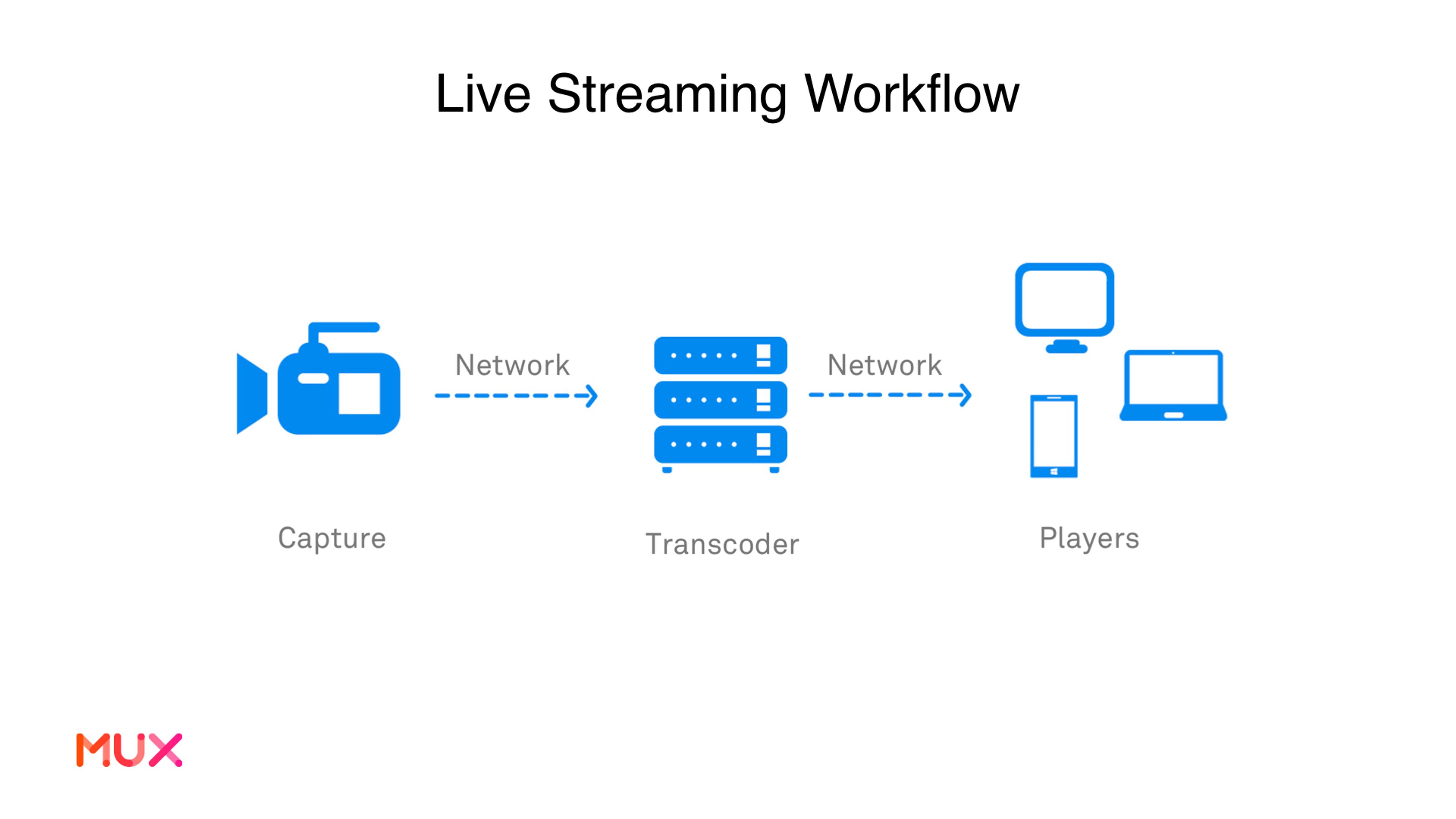

The first step is to get video from the source into the transcoder on your server.

Getting video in

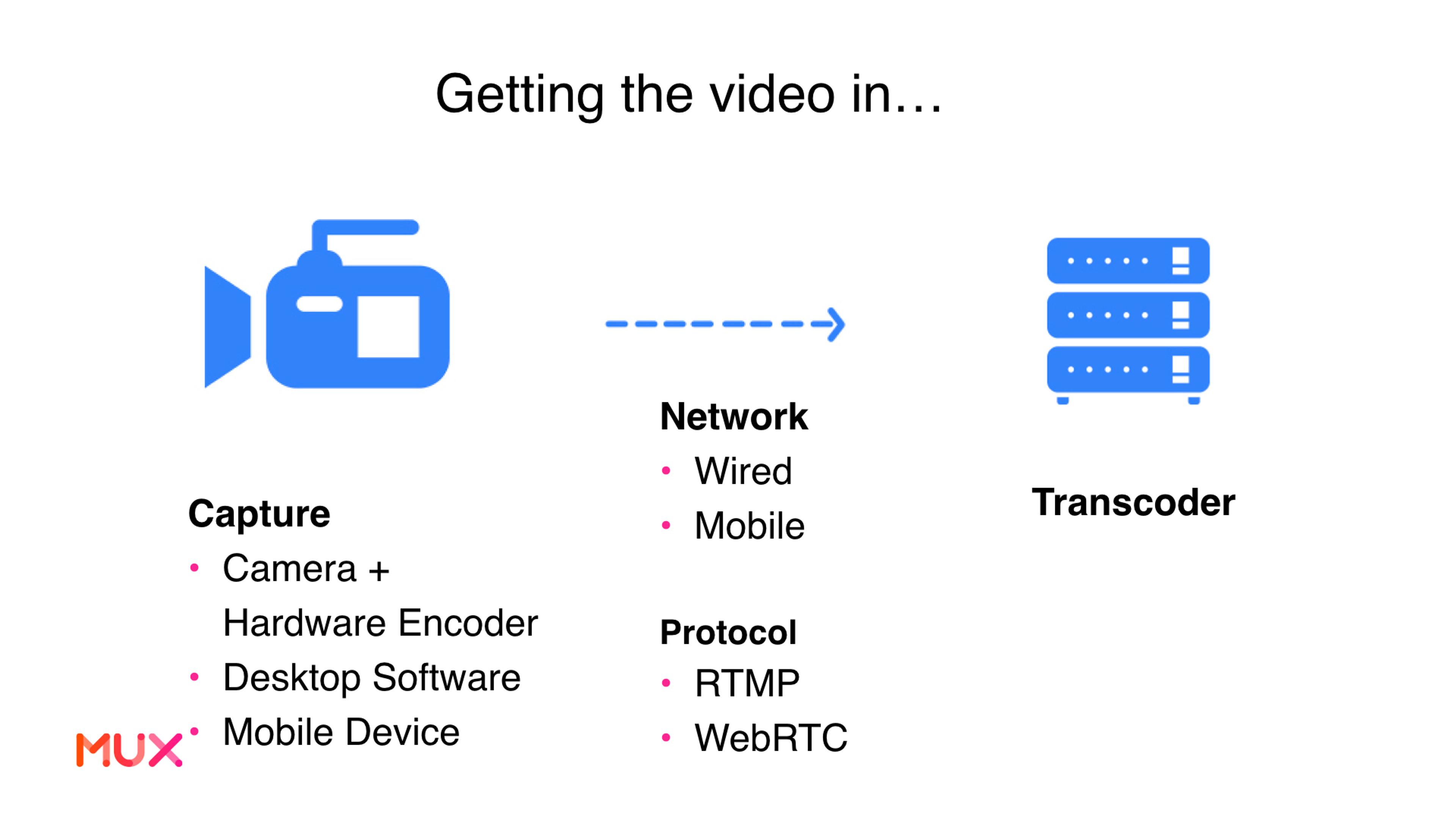

First you need to get your content captured and encoded so it can be transported over the internet.

- Camera + Hardware Encoder - if you have a studio setup this is an option. For something like HQ, this is probably what they were doing in their studio.

- Desktop Software - the most popular software for Twitch streamers is the free OBS desktop application.

- Mobile Device - if you have an application for user-generated content, like Periscope or Facebook Live, you’ll have to capture from the mobile device.

Once the video content reaches our server we’ll be running a transcoder that processes the live stream and gets it ready for delivery to players.

Transcoder

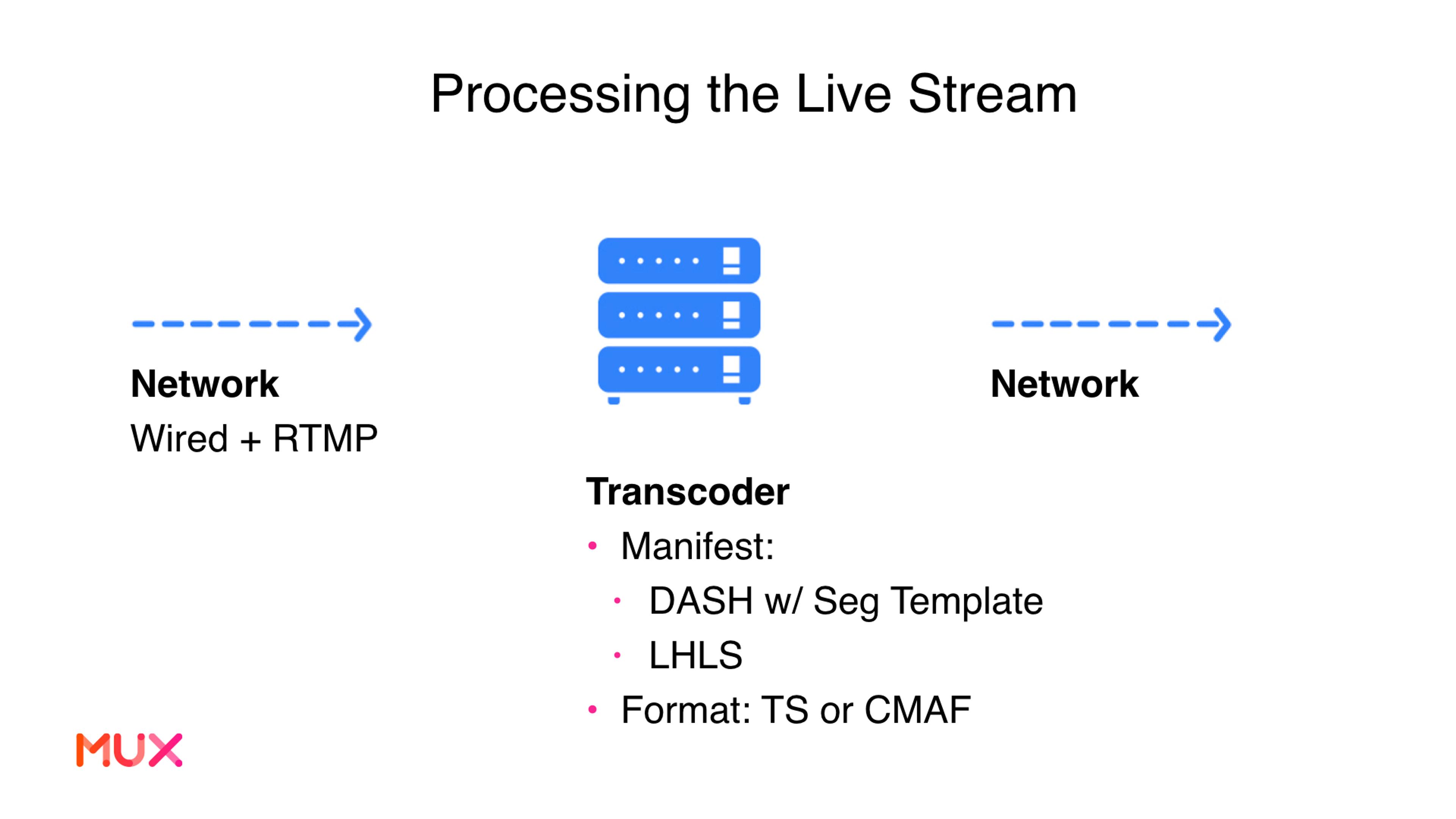

The transcoder running on our server is going to process the high-quality incoming RTMP stream and create multiple renditions of the video. The transcoder is going to create a range of quality levels, for example 1080p, 720p, 540p and make those different quality levels available for the player to pick from.

The transcoder will create manifest files for each of the different renditions. If you’re unfamiliar with DASH and HLS manifests and how all of this works for streaming video, we made a resource that explains all of that at howvideo.works. When it comes to streaming in low latency, there are two formats to be aware of:

- DASH w/ Segment Template

- LHLS

Both of these manifest formats allow the player to know what segment files are coming before they are ready, and start requesting them early. For the individual video chunks, you have two options: TS or CMAF.

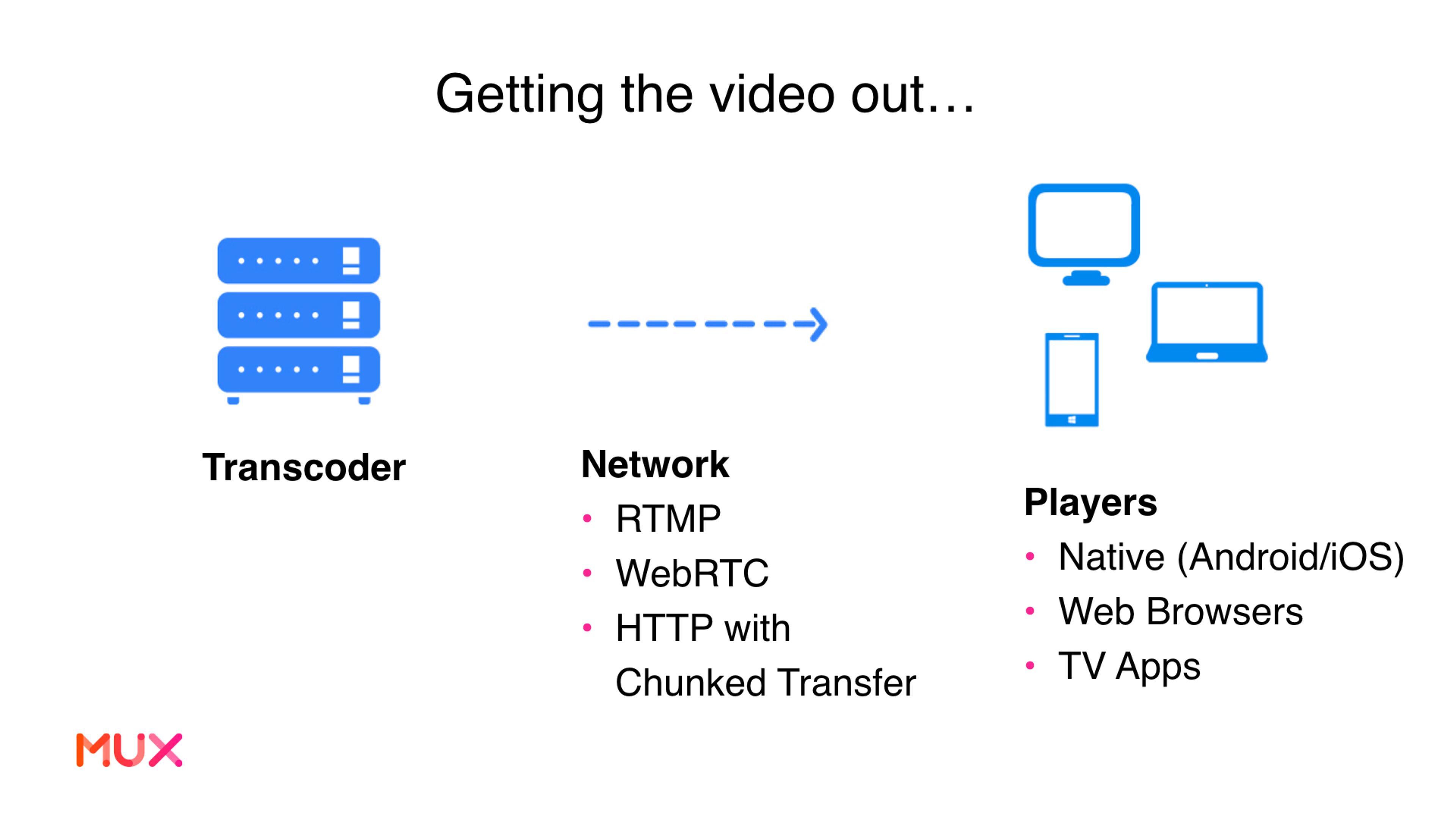

Now we have video files created, they are on our server, we have the manifest format written and now we need to get this video to our players.

Getting video out

RTMP and WebRTC both require persistent connections to the clients. In order to service hundreds, thousands or millions of viewers it requires scaling up a massive amount of servers to maintain all those connections. In the early days of HQ we could see that they were using RTMP to stream video to viewers. To support 500k to 1M viewers per game, we estimated it would cost $15,000 to $20,000 for the servers alone. Adding in the cost of network bandwidth we estimate they were spending $50,000 - $100,000 per show.

HTTP with Chunked Transfer is a much more cost effective method to send your video to the clients. Since this method uses HTTP and does not require persistent connections between the client and your media server, you can lean on traditional CDNs to do the heavy lifting. Chunked Transfer is the feature you want to leverage to get low-latency delivery. If one video file contains 6 seconds of video and you’re using chunked transfer, then the player can start downloading the first few seconds before the entire file is ready.

Summary

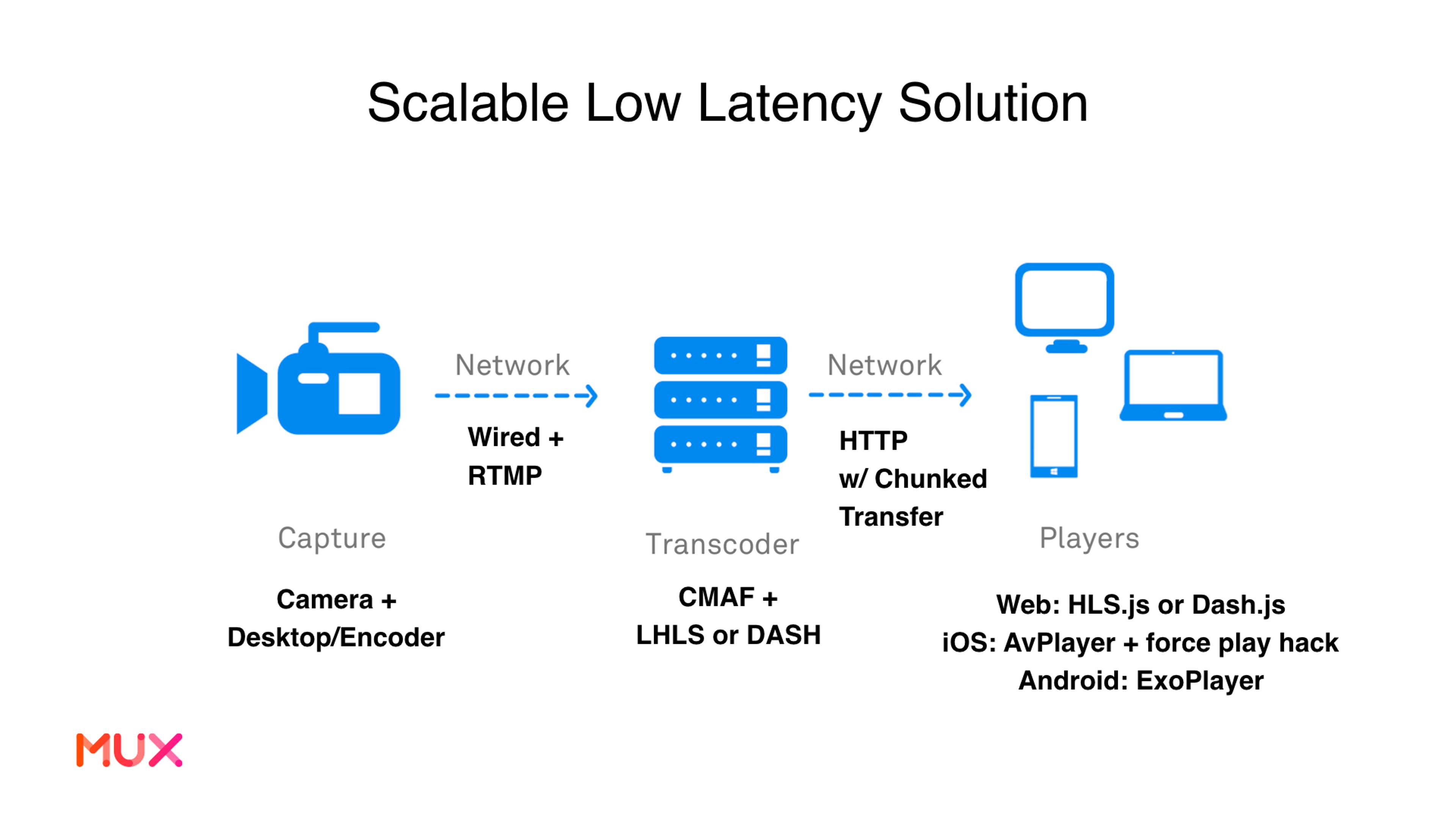

Here’s a summary of a scalable low-latency streaming solution:

What Mux is doing for low latency

Everything above applies to you building your own low-latency, interactive streaming solution. But we’re a company that makes APIs for video, so what are we doing to help you, so you don’t have to worry about running your own ingest servers, transcoders and delivery pipeline? The answer is that we have some things to help you. This is a space we’re actively working to improve and members of the team are involved closely with the standards bodies that are developing technologies to make this a reality for everyone.

- Mux has a live streaming API that will set up an RTMP ingest server and give you back a playback URL that you can use in your video players on any platform. By default you will get standard latency (~20-30 seconds).

- As part of the live streaming API there is a `reduced_latency` flag that will get your latency down to the 8-14 second range. The tradeoff is that you don’t get a reconnect window, any time a live stream disconnects, a new asset will be created. Read more in the blog post.

This is the current state of live streaming for Mux. As mentioned before, these are the two posts from Phil about Apple’s development around low-latency live:

- The community gave us low-latency live streaming. Then Apple took it away.

- Low Latency HLS 2: Judgment Day

Our team is staying closely involved with the groups developing low-latency live standards. Stay tuned for more news.