The highest possible Video Quality has long been the obsession of video engineers and movie nerds alike. The highest resolution, on the biggest screen, with the biggest depth of colour, delivered by the highest bitrate!

However, measuring Video Quality is not that straightforward. What we do at Mux is measure the change in Video Quality by seeing if its quality is worsened through unwanted video Upscaling or Downscaling. The actual delivery of high video quality is largely down to the host, or even the provider of the content. We can’t change the quality source file anymore than anyone else can once the video is uploaded.

So in this article we are going to explore what makes for good video quality - on the host’s end - and what we can do to alert you to poor quality issues - on our end.

Before we get into that though, I want to highlight the difference between Video Quality and Content Quality.

The elements of Video Quality

Video quality is comprised of: Bitrate, Codec and the Quality of the Source (including resolution)

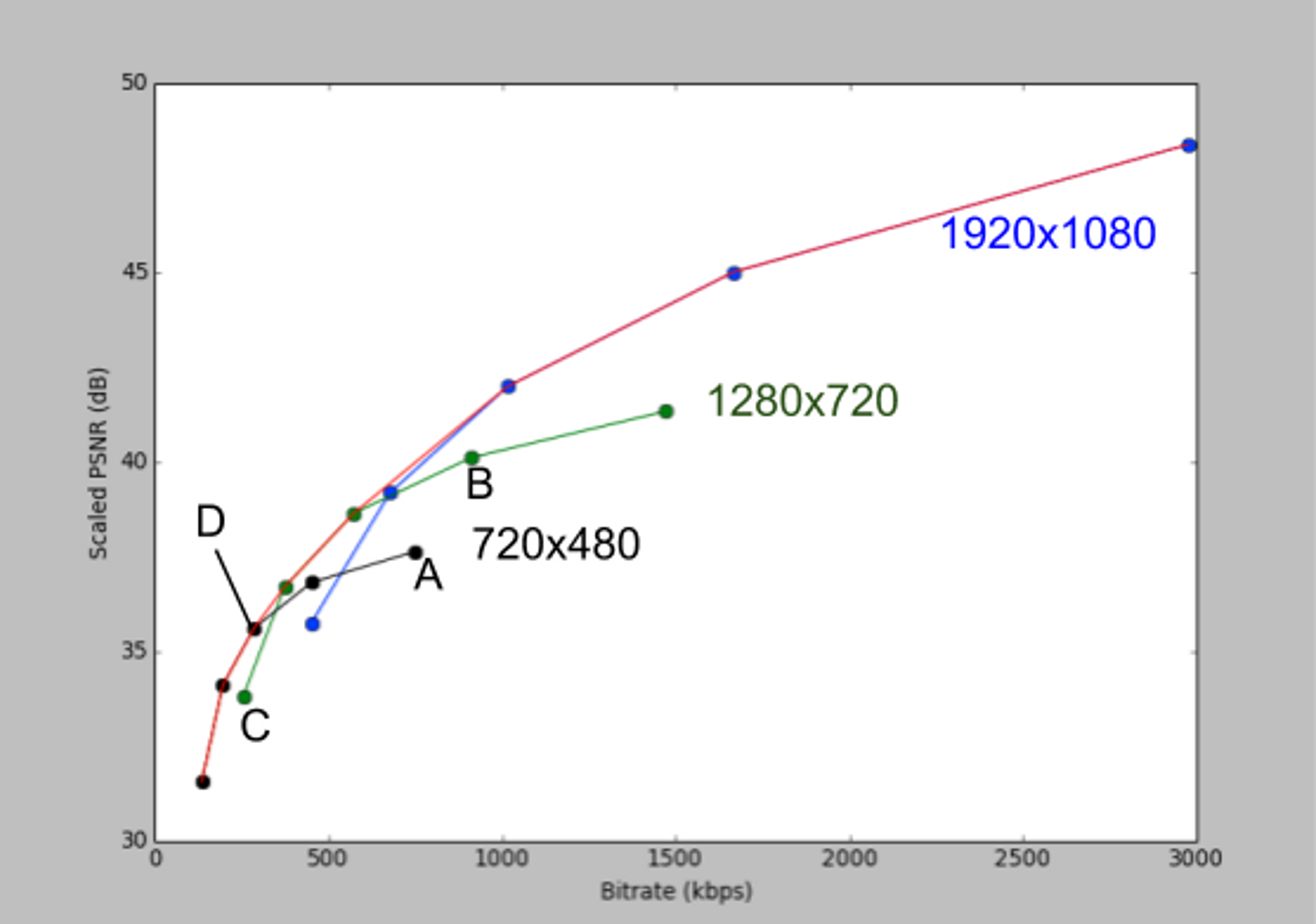

When it comes to Bitrate, we are talking about the optimal bitrate for any given video. Thanks to some wonderful work from Netflix, we have an excellent guideline to work with.

“At each resolution, the quality of the encode monotonically increases with the bitrate, but the curve starts flattening out when the bitrate goes above some threshold. This is because every resolution has an upper limit in the perceptual quality it can produce”

What this boils down to is that a 10mb encoding bitrate isn’t going to make 480p look any less like 480p. For any Resolution there are diminishing returns on the perceptible quality as you increase the bitrate. Now most of the time, if your viewer’s device can handle a higher resolution, and their bandwidth can handle the higher bitrate, without unholy rebuffering, then you can probably deliver high quality video, hassle free.

However, a Resolution of 1080p with a Bitrate of 500 kbps is considerably worse quality than 720p - or even 480p - of the same bitrate. Which is valuable information when we’re thinking about efficiency for low bandwidth and smaller screens.

When it comes to Codecs, we’re talking about H.264.

Alright, moving on…

Okay, okay...there is a little more we can say about codecs now that the H.264 versus VP9 debate is heating up. Although that is already being pushed aside by the advances in VP10, the promise of H.265, and the exciting potential of AV1. But for most people, day to day they will still be using H.264 for a while still.

The third component, the Quality of the Source is perhaps a little more abstract, but largely we mean the source file and how well it has been encoded. A lossy encode would naturally reduce the quality of the source, as would re-encoding the video multiple times.

Does it have any artifacts? Does it have any interlacing issues? Does the video have colour issues? Or was the original footage over-exposed with too much light?

There can be a lot of problems with the source file that can create problems with the video quality, and “fixing it in post” is rarely an option. To quote a Netflix blog post, “Garbage in means Garbage out”.

The components of Content Quality.

Content Quality then, is a more comprehensive view of the whole experience. We’re coming out of Videoland now, to look at the whole Island of Content.

Content Quality comprises of: Video Quality, Audio Quality and the quality of Subtitles/Closed Captions.

This is the definition from the Netflix article on High Quality Video Encoding at Scale, and I really like that they included the quality of the Subtitles and CC as part of the definition of content quality as a whole. For a great discussion on doing more with subtitles, I recommend Owen Edwards talk from Demuxed 2015.

Now, any good Videographer can also tell you that Audio Quality is absolutely crucial to good Video. If the Audio is painful to listen to, keeps skipping, or is simply out of sync, no amount of Video Quality is going to save it. People will watch poor quality video as long as the audio is still good. My favourite example of this is a Bloc Party music video from 2006 with over 22 million views that was encoded by a potato.

The Problems Video Quality Face Online

The most obvious problem for delivering video, is the bitrate at which the viewer can download the video. This is largely down to their connection via their Internet Service Provider, as well as the conditions of the actual wiring itself.

However, on the host’s end, the wonderful technology of Adaptive Streaming can be used to provide a comparable video experience with less buffering. Instead of Progressive Streaming which only has one resolution size that is only optimal for a screen of the exact same resolution, Adaptive Streaming provides an encode of several different videos - at different resolutions and bitrates - in parallel.

This allows for the optimal resolution for any given screen, as well as the ability for the stream to switch down to a lower resolution, or a more compressed version of the original resolution, to prevent buffering.

However, this is all still based on the assumption, that the original source quality was "good". Even then, pixels cannot be invented where there were none - 360p will always be 360p - and this is where Upscaling and Downscaling come in.

Upscaling and Downscaling - Harbingers of Video Quality

As we mentioned at the start of this article, at Mux we cannot tell you if your original video file was of good quality before it was uploaded, but we can tell you when it changes.

Upscaling is when a video is stretched beyond its original size, while Downscaling is when the video is squeezed smaller than it original size.

So if a 720p video is fullscreened on a 1080p monitor the pixels have been stretched to fill the new resolution therefore degrading the perceived quality by creating a less than optimal viewing experience. No matter how high the original bitrate might have been, quality has been lost.

While Upscaling is a very obvious loss in quality, blown up in front of the viewer, Downscaling can be less of an issue.

If a 1080p video is downscaled to a 360p mobile screen, you have lost quality in the sense that the resolution has been greatly reduced. This is a less apparent issue for the viewer, in fact they might not even notice, but in reality pixels have been lost and the video quality has been reduced. This is somewhat abstract though, so you may want to think of downscaling as an efficiency problem, rather than a perceptible drop in quality.

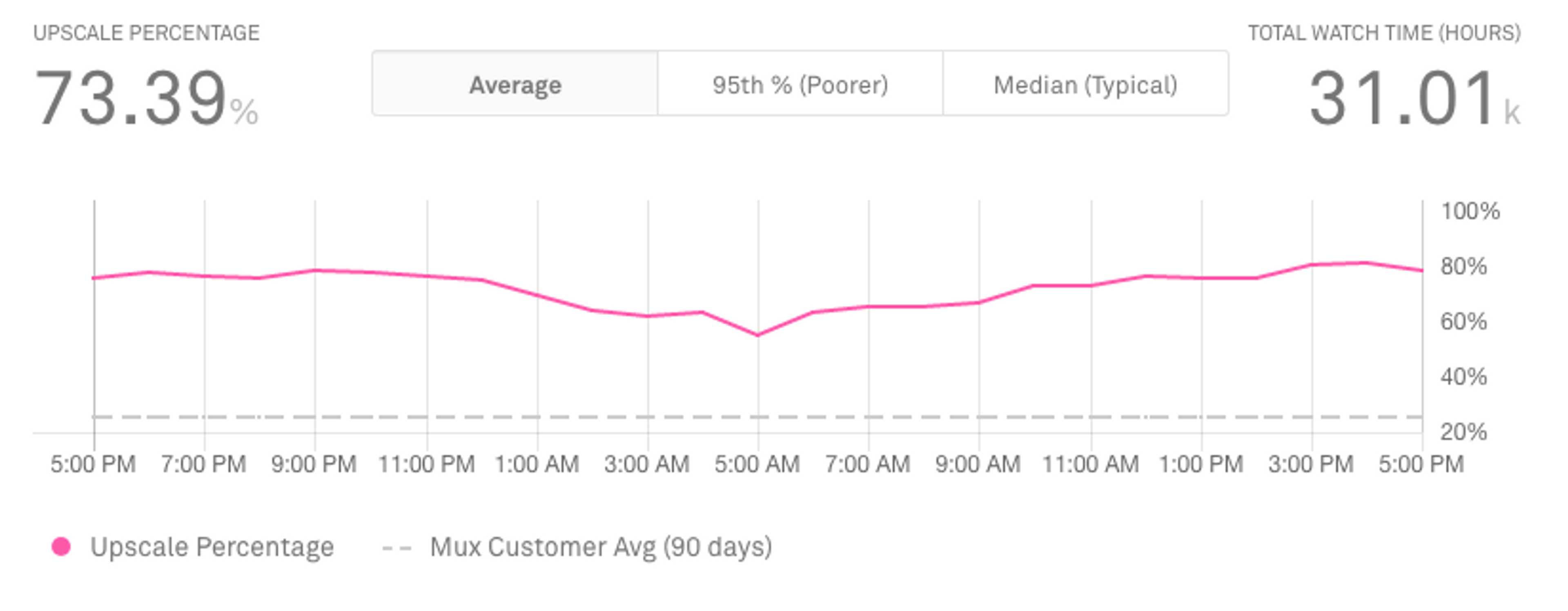

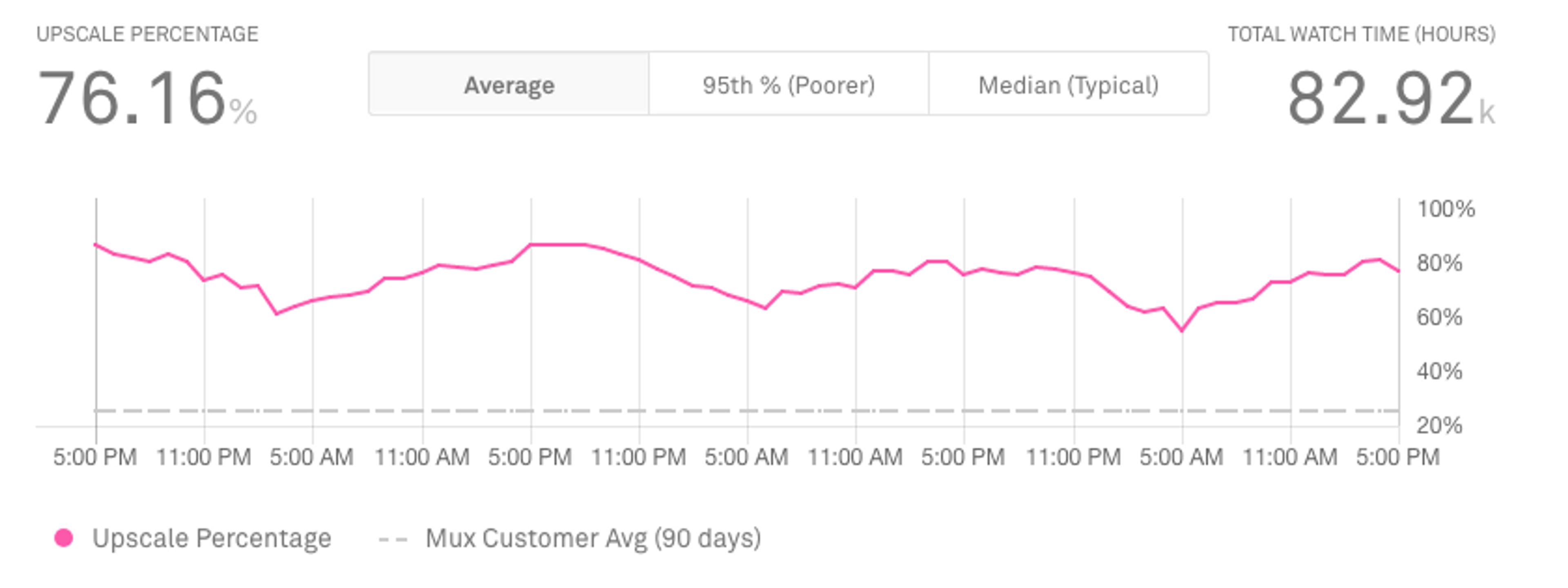

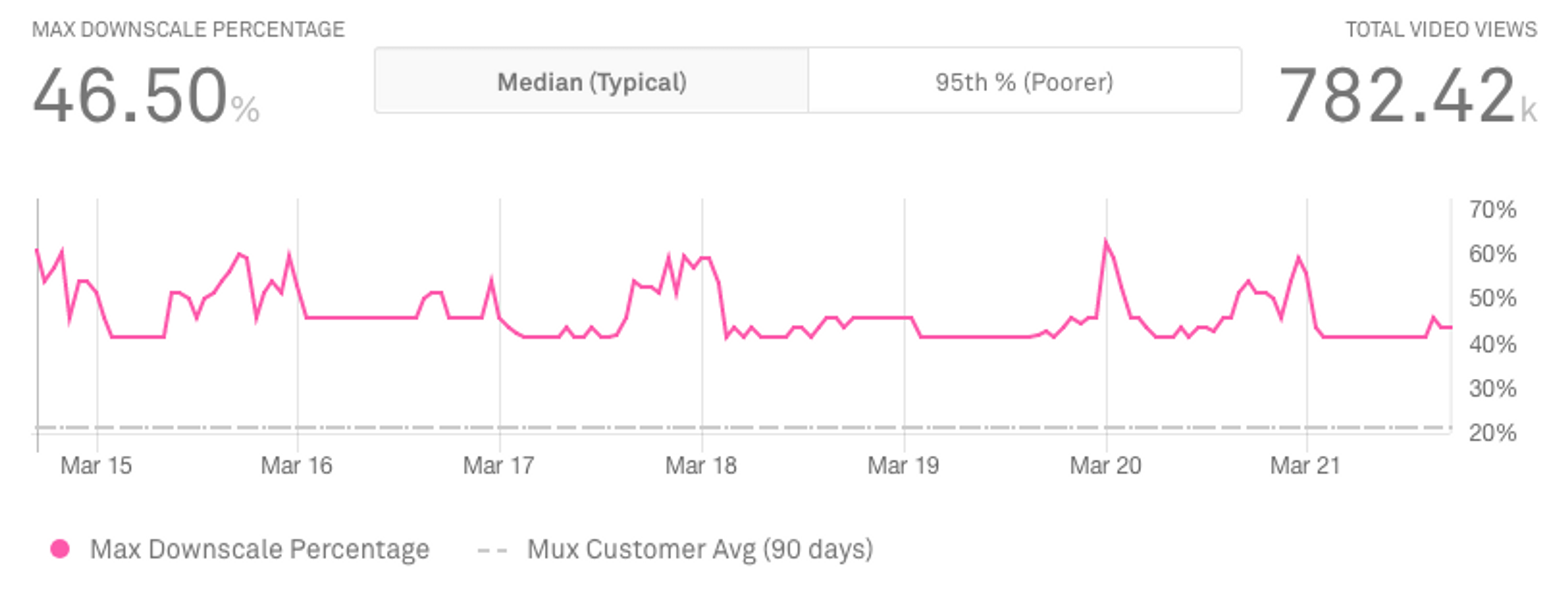

At Mux we measure the Upscaling Percentage, Downscaling Percentage, and the Maximum Upscaling and Downscaling.

Now a quick note on the maths involved here: 0% is the base value.

So 0% Upscaling means the video is playing without any deviation in quality. Similarly, Upscaling of 100% means that the video is twice the size of its original dimensions.

To be super clear, an upscaling of 25% means the video has been stretched by an amount equal to a quarter of its original size. Or to put it another way, your pancake is thinner because it’s been put in a pan that’s 25% larger than your first one. This does not mean the video is experiencing 75% Downscaling. We’re always counting up from zero in both our Upscaling and Downscaling Metrics.

When looking at your Upscaling or Downscaling metric you can view the Average, Median or Poorer 95 Percentile. Like all of our metrics, you can view this for the past 6 hours, 24 Hours, 3 days, 7 days, 30 days or for a custom range.

As part of Upscaling and Downscaling metric, we consider the amount of time this effect occurred. For pinpoint instances of Upscaling and Downscaling we can look at the Max. Upscaling and Downscaling metrics which allows you to see the greatest amount of Upscaling or Downscaling, even if it didn't last the whole video.

Once you have an understanding of how frequently, and how dramatically Upscaling and Downscaling are occurring, you can then take a look at your video delivery pipeline.

If the video you’re delivering is experiencing a lot of Upscaling, that’s quality you could have had. If there is a lot of Downscaling, that’s quality you’ve thrown away. There is no point delivering 1080p video to a device that can’t display more than 720p (e.g. phones, tablets), this would use a lot of the viewer’s bandwidth for no improvement in quality.

You can use this to direct your Adaptive Streaming Strategy, by using metrics to discover which devices your viewers use the most, and optimising your video pipeline for those screen resolutions. This allows Mux to give you a reactive view of your Video Quality as it’s distributed across the internet, but in the end it's up to the host to upload high quality content in the first place.

For the previous article in this series, check out our post on Video Startup Time. Next we'll be taking a look at Rebuffering!

For a demo of Mux, feel free to reach to out to us anytime.