HTTP Live Streaming, also known as HLS, is the most common format used today for streaming video. If you're building a video streaming application today, you should probably use HLS. Apple created the HLS standard in 2009, and it is the required streaming format for iOS devices. Since then, Android has added support, as have most other platforms.

HLS is popular for a number of reasons.

- HLS can play almost anywhere. There are freely available HLS players on every major platform: web, mobile, and TV.

- Apple requires HLS if you want to stream to iOS devices. Because you have to use it anyway, many publishers have decided to use it everywhere.

- HLS is relatively simple. It uses common, existing video formats (MP4 or TS, along with codecs like H.264 and AAC), plus an ugly but human-readable text format (m3u).

- It works over HTTP, so you don't need to run special servers (unlike old-school RTMP or the newer WebRTC protocol). This makes it much easier to scale with HLS than with another protocol.

How does HLS work?

There are three parts to any HLS stream.

First is a master manifest. This is a playlist that lists the different sizes and types available for a single video. A typical master manifest will list 3-7 individual renditions - for example, a 480p rendition, a 720p rendition, and a 1080p rendition. The master manifest is passed into an HLS video player, allowing the player to make its own decisions about what rendition gets played.

Example (skiing.m3u8):

#EXTM3U

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=2000000,CODECS="mp4a.40.2, avc1.4d401f"

skiing-720p.m3u8

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=375000,CODECS="mp4a.40.2, avc1.4d4015"

skiing-360p.m3u8

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=750000,CODECS="mp4a.40.2, avc1.4d401e"

skiing-480p.m3u8

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=3500000,CODECS="mp4a.40.2, avc1.4d401e"

skiing-1080p.m3u8The master manifest lists multiple media manifests. Each media manifest represents a different rendition of video - a unique resolution, bitrate, and codec combination. For example, one media manifest describes 1080p video at 5 Mbps, while another describes 720p video at 3 Mbps.

These media manifests are also playlists, but instead of listing other manifests, they list URLs to short segments of video. Most often, these segments are between 2 and 10 seconds long, and packaged in the MPEG-TS format, though fragmented MP4 is supported in newer versions of the HLS spec. The reason these files are segmented (or fragmented) is so that a video player can easily switch between renditions in the middle of playback - for example, if bandwidth gets better or worse.

Example (skiing-480p.m3u8):

#EXTM3U

#EXT-X-TARGETDURATION:10

#EXT-X-VERSION:3

#EXT-X-MEDIA-SEQUENCE:0

#EXT-X-PLAYLIST-TYPE:VOD

#EXTINF:9.97667,

file000.ts

#EXTINF:9.97667,

file001.ts

#EXTINF:9.97667,

file002.ts

#EXTINF:9.97667,

file003.ts

#EXTINF:9.97667,

file004.tsSo if you want to stream video using HLS, you need to do three things.

- Create the HLS media (segments and manifests). You can do this using an API service like Mux Video or Zencoder, or you can do it by hand with a tool like ffmpeg or Handbrake.

- Host the files. Put them on a HTTP server and put a CDN in front.

- Load the master manifest in an HLS-compatible player. For example, pass the master manifest - skiing.m3u8 - to an HLS-compatible player, like HLS.js or Video.js.

Where does HLS play?

Pretty much everywhere in 2018.

Web: Every major browser, either using a HTML5 video player like Video.js or HLS.js, or via native playback support in Safari.

iOS: Natively supported

Android: Supported through the Google Exoplayer project

TVs: Roku, Apple TV, Xbox, Amazon Fire TV, PS4, Samsung, LG, etc.

Where does it not play?

Web: Internet Explorer 10 and earlier (0.1% market share at the time of writing).

Tutorial: creating HLS content

For the do-it-yourself approach with ffmpeg see: How to convert MP4 to HLS with ffmpeg and How to convert MOV to HLS with ffmpeg.

The easiest way to get up and running with HLS video is using a service like Mux Video. After you've signed up for a free account, submit your first video via API (e.g. using curl or a tool like Postman or Insomnia).

Example (see the Mux Video docs for more):

# POST https://api.mux.com/video/v1/assets

{

"input": "https://storage.googleapis.com/muxdemofiles/mux-video-intro.mp4",

"playback_policy": "public"

}using curl:

curl https://api.mux.com/video/v1/assets \

-H "Content-Type: application/json" \

-X POST \

-d '{ "input": "https://storage.googleapis.com/muxdemofiles/mux-video-intro.mp4", "playback_policy": "public" }' \

-u {ACCESS_TOKEN}:{SECRET_KEY} | json_ppExample response:

{

"data": {

"status": "preparing",

"playback_ids": [

{

"policy": "public",

"id": "Wxle5yzErvilJ02C13zuv8OSeROvfwsjS"

}

],

"id": "xGwoeLBILTL1D012GHRfIAKlXJCekVaRE",

"created_at": "1522448637"

}

}Mux Video will encode and package the video to HLS. Note the playback_id - you'll use that in the next step when you actually want to stream the video.

You can also use ffmpeg or another transcoding service to encode your video to HLS.

Streaming HLS content

If you're using Mux Video, streaming the video is simple. Just plug the playback_id behind stream.mux.com and you're done. For example:

https://stream.mux.com/Wxle5yzErvilJ02C13zuv8OSeROvfwsjS.m3u8If you aren't using Mux Video, after creating the HLS stream, upload the m3u8 manifests and TS/fMP4 media files to an HTTP storage platform, and configure a CDN to deliver the media segments. You can use a CDN to deliver your manifests as well, but this can be tricky if you're streaming live video, since the live manifests are updated continuously as the live stream progresses.

HLS playback: iOS

To play HLS video on iOS, see the Building a Basic Playback App guide and the iOS playback example in the Mux docs. When you get to Step 4, set the URL to a Mux Playback URL, like this:

guard let url = URL(string: "https://stream.mux.com/{playback_id}.m3u8") else {HLS playback: Android

To play HLS video in an Android application, see Google's ExoPlayer project. While Android's native Media Player supports HLS out of the box, this support depends on the version of Android, and can be buggy depending on software and hardware combinations. As such, Google created ExoPlayer to standardize support for HLS within Android.

ExoPlayer has sample applications that should help in streaming HLS included in their Github repo. When it comes to creating your media source, pass a Mux Playback URL to the HlsMediaSource.Factory.createMediaSource(uri) call.

HLS playback: Web

Playback of HLS in a web browser is a little bit nuanced. Safari, for instance, supports HLS natively. However, other browsers do not support HLS out of the box, and require the use of a player utilizing Media Source Extensions to support HLS. You can read more about some of the available players in the Popular web players guide.

Follow the instructions for the selected player to load a video. Just be sure to use a Mux Playback URL when loading the source. For instance, see Web Video Playback for an example using HLS.js.

Alternatives

If you don't want to use HLS today, you have one good alternative and a few bad alternatives.

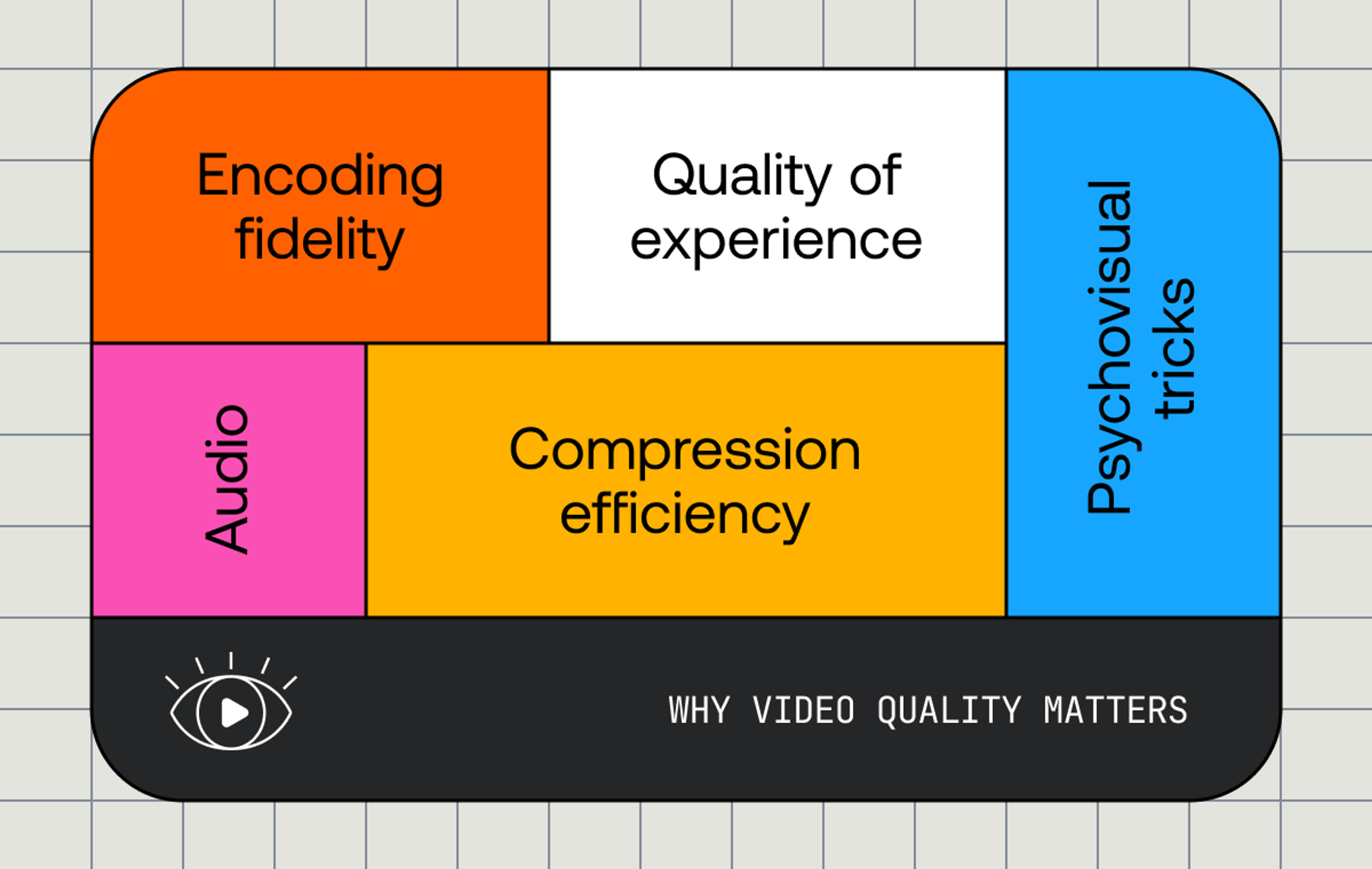

Progressive MP4 is easy to implement but doesn't perform as well in terms of Quality of Experience (as measured by a QoE tool like Mux Data. Just create an MP4 file and let the player download it progressively via range requests. The upside here is simplicity; the downside is that players can't adapt automatically to network conditions. This means viewers with high bandwidth will get lower quality video than they should, while viewers with low (or variable) bandwidth will see rebuffering.

RTMP is pretty much only used as an ingest format today and not a playback format. It's still an acceptable way to stream a live event up to Twitch or Livestream (or Mux Video), but it's no longer used for streaming out of services like these for a number of reasons (including cost, scalability, and the death of Flash).

MPEG-DASH (or just "DASH") is the real alternative to HLS. DASH is conceptually similar to HLS, but uses XML as a manifest format instead of the text-based m3u format. DASH has some advantages over HLS, as well as some disadvantages. Look for a future guide that goes into this in more detail.