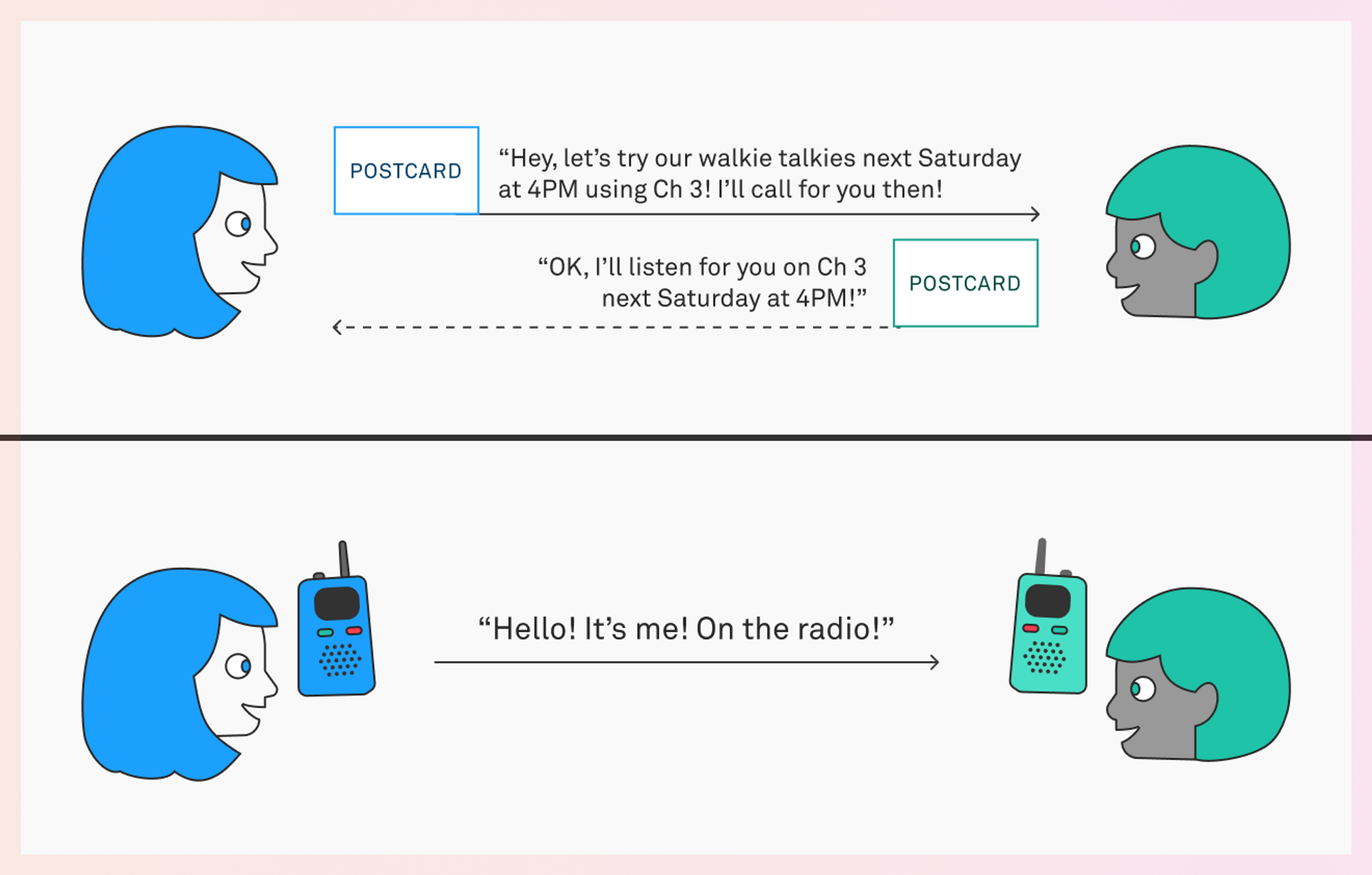

We've come a long way with the advancement of real-time communications. But many of the protocols designed today steal inspiration from tried-and-true communication methods that have been around for years. Imagine this:

It’s your 10th birthday, and you’ve just received exactly what you were hoping for: a brand new set of walkie-talkies just like your friend from down the street has! You can’t wait to see if you’ll be able to talk to each other. You open the packaging, put in some AA batteries (you found some fresh ones a few days ago and stashed them away for just this moment), and power on one of the radios.

“Ch 1,” reads the small LCD screen. Now what?

To actually talk to your friend, you need to exchange some information beforehand, like:

- When to be at the radio listening

- Who’s going to call first (so you don’t accidentally talk at the same time)

- What channel to use

- What “privacy code” setting to use (whatever that means!)

Luckily, your friend is here at the birthday party, so before they go home and get their radio out, you write a note on the back of an envelope: “Listen to Channel 3 @ 4PM”

At the designated time, you power on your radio and press the push-to-talk button: “Hello, can you hear me?”

A moment passes… and then, through a bit of static, the reply comes: “Yes! I can hear you! It’s working!”

You’ve established a real-time communication channel.

But what does this have to do with video, you may ask?

It turns out that this exchange is not so dissimilar to an exchange of information needed to start a WebRTC session.

Think for a moment: What if your friend wasn’t at the birthday party? What if they were on vacation in Florida, and you weren’t sure when they’d be home to try out your new walkie-talkies? You’d need some other method of communication to exchange the information you would have written on the back of that envelope. So it is with WebRTC….

But what is WebRTC?

WebRTC is a collection of protocols and APIs that make up the Web’s answer to Real-Time Communication — a way of sending and receiving media (primarily audio and video) with extremely low latency. You might be familiar with real-time communication tools like Zoom or Google Meet. In fact, both of those are based on WebRTC!

WebRTC is different from the technologies used to stream most of the other on-demand and live video content available on the web today. Chunked streaming protocols like HLS and DASH optimize for high quality and cost-effective distribution by allowing chunks of content to be cached on intermediate HTTP servers like CDNs. The tradeoff for these benefits is that there are limits to how low the overall end-to-end latency can be between a content provider and consumers. At Mux, we’re pushing those limits with low-latency HLS (LL-HLS), but even LL-HLS still isn’t low enough for comfortable video chat or teleconferencing.

Enter WebRTC. Drawing on a lineage tracing back through the telecom industry and the domain of VOIP and multicast media distribution, WebRTC is designed to optimize for low latency above all else. Note: This means that it’s harder to achieve the same economies of scale that chunked streaming can provide, and that in the face of network congestion, WebRTC implementations tend to prioritize maintaining low latency over maintaining high resolution. You won’t see buffering or be delayed in hearing the last thing someone said, but the video might not look as clear if you hit a patch of poor cell service.

OK, great! So now I know how WebRTC is different from chunked streaming, and I have a use case where extremely low latency is very important to me. How can I use WebRTC? It’s already implemented in all the major web browsers, so It should just be plug and play — anything that can “do” WebRTC should be compatible with anything else that can “do” WebRTC, right? …right?

Well… yes, and no. As with many questions about technology, the answer is: It depends.

But what does it depend on?

Signaling. The main hurdle for WebRTC interoperability is signaling.

What is signaling? Why is it a problem? Aren’t there standards?

Well, yes. There are standards. WebRTC specifies requirements for many aspects of the system, but one thing that was deliberately left out of scope is how participants exchange the key bits of information that allow them to establish connectivity and start sending media back and forth.

One of the protocols WebRTC borrows from earlier work on VOIP technology is the idea of describing media streams and how to access them using Session Description Protocol (SDP). Essentially, one participant will send an SDP Offer outlining what it would like to send and/or receive, and the other will send back an SDP Answer confirming what it is capable of sending and/or receiving — just like exchanging information with our friend to arrange to talk to each other on our walkie-talkies.

OK, so this sounds like it’s all pretty well laid out. Where’s the trouble?

Well, the requirements for the content of the SDPs are clear. And the requirements for exchanging them in a particular order and what to do in case of disagreements are also clear. But exactly what transport (or means of exchanging that information) ought to be used between the participants… is left as an exercise for the reader.

Really? Why?

Think back to making arrangements for using your walkie-talkies. If your friend is there in person, you can talk to them or give them an envelope with those details directly. But if they’re in Florida for the month, you might need to call them at the number they left for their grandma’s condo and exchange the information over the phone, making arrangements for the day they get home.

Or maybe you don’t have a phone number, but they sent you a hilarious postcard of an alligator riding a bicycle and shared their grandma’s address, so you could write to them with the information.

There’s a similar variety of options available to WebRTC peers. You might copy and paste SDPs back and forth from one browser tab to another while testing. You might connect to a WebSocket and send an SDP wrapped in a JSON message to be relayed to another user connected to the same WebSocket server. Or maybe the other peer is a media server or selective forwarding unit (SFU). Just like with your friend and the walkie-talkie plans, there are a lot of ways you might want to exchange SDPs.

In practice, media at scale usually ends up flowing through SFUs or multipoint control units (MCUs). The companies providing those services will also provide a means of signaling, often using a WebSocket connection as the transport and keeping that connection open for the duration of a session so additional out-of-band signaling can be done. The exact details of what gets exchanged over that WebSocket connection tend to be proprietary and service-specific. With Mux Real-Time Video, for example, we use a WebSocket for SDP exchanges (WebRTC signaling), but we also provide messages for things like active speaker events and information about new participants who have joined a space on that same WebSocket.

It’s not bad to take advantage of a connection you already want to have for these other events, but having so many different proprietary methods of exchanging SDPs can make certain types of interoperability challenging. Nobody is going to want to invest the development effort in building an embedded system (say, an action camera or a drone) that can speak to only one WebRTC platform, for example. In large part due to the lack of interoperable signaling, many would much rather avoid WebRTC altogether and continue using something already widely deployed like RTMP as an ingest protocol for ultra low latency video streams.

Enter WHIP.

WebRTC-HTTP Ingest Protocol (WHIP)

The WebRTC-HTTP ingest protocol (or WHIP) aims to provide some of the convenience of RTMP while maintaining some of the benefits of WebRTC. Another whole post could be written on why many video engineers would like something better to replace RTMP, but why is it still so prevalent as an ingest protocol?

My opinion? Interoperability and relative ease of configuration.

Think of two open-source tools commonly used to send video to some kind of media server or broadcast platform:

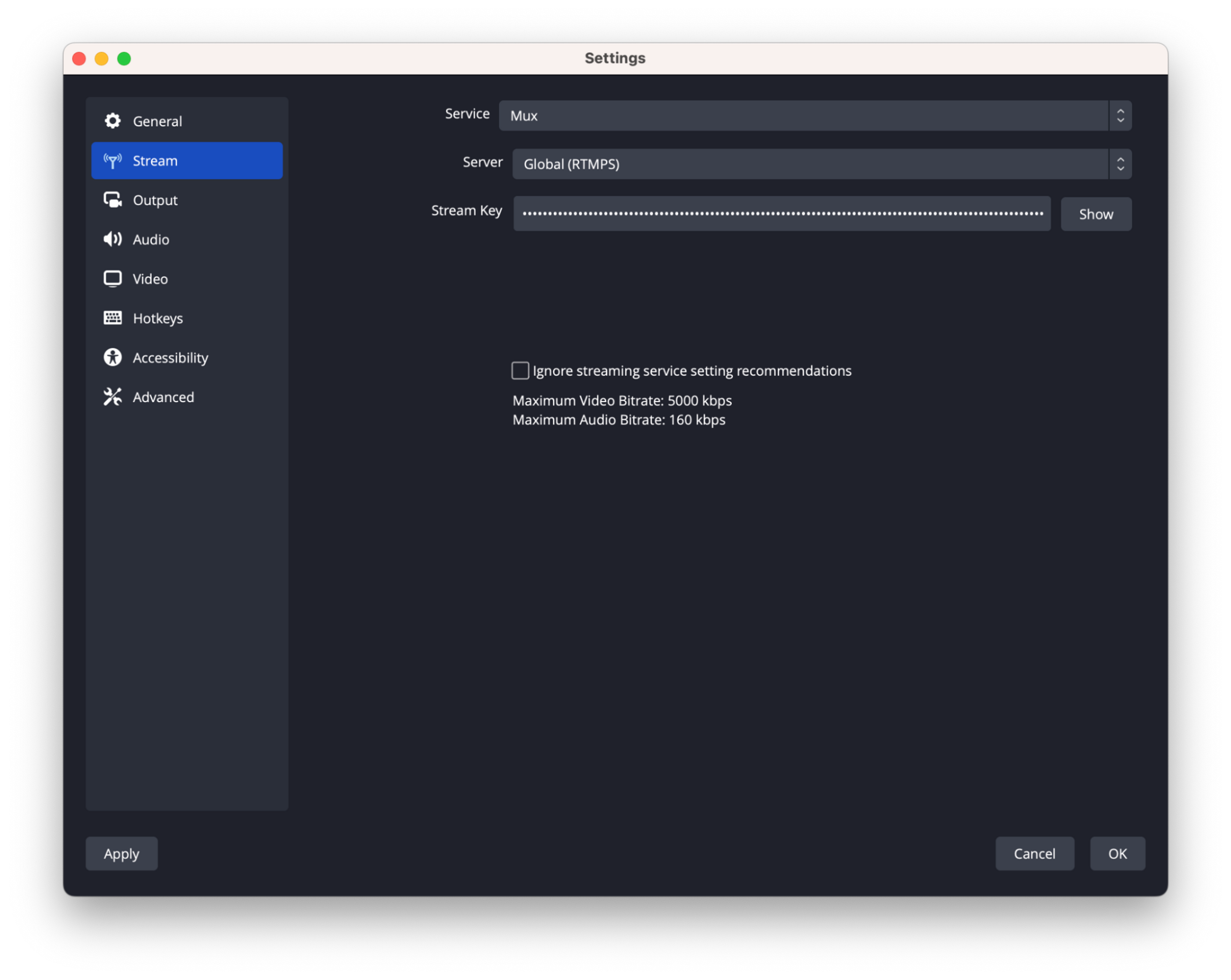

With ffmpeg, you can encode what you need to ingest RTMP into a simple URL:

ffmpeg … -f flv rtmp://global-live.mux.com:5222/app/{STREAM_KEY}

Or into another platform the same way:

ffmpeg … -f flv rtmp://ord02.contribute.live-video.net/app/{stream_key}

Similarly, in OBS, the base of these URLs can be preconfigured so that most users only need to provide their stream key to start broadcasting.

Setting aside for the moment the complexity of implementing the rest of the WebRTC stack outside of a browser, how would this configuration and go-live workflow look for WebRTC? Without something like WHIP, there isn’t a good answer here. There is no well-defined endpoint to point a video contribution client toward.

How does WHIP work?

Author’s Note: at the time of writing, the latest version of the draft specification was draft-ietf-wish-whip-06.

OK, so now we can see the need for WHIP, and we have a point of reference (with RTMP) for what a relatively straightforward ingest workflow could look like. How does WHIP work?

By narrowing the scope from the broad diversity of potential applications that WebRTC can support down to only ingest or video contribution workflows, WHIP is able to make some useful, simplifying assumptions.

For example, we know that the client who wishes to publish a media stream will initiate the connection and that there will only be a unidirectional flow of media. (In WebRTC parlance, this would be considered a sendonly stream rather than a sendrecv stream.)

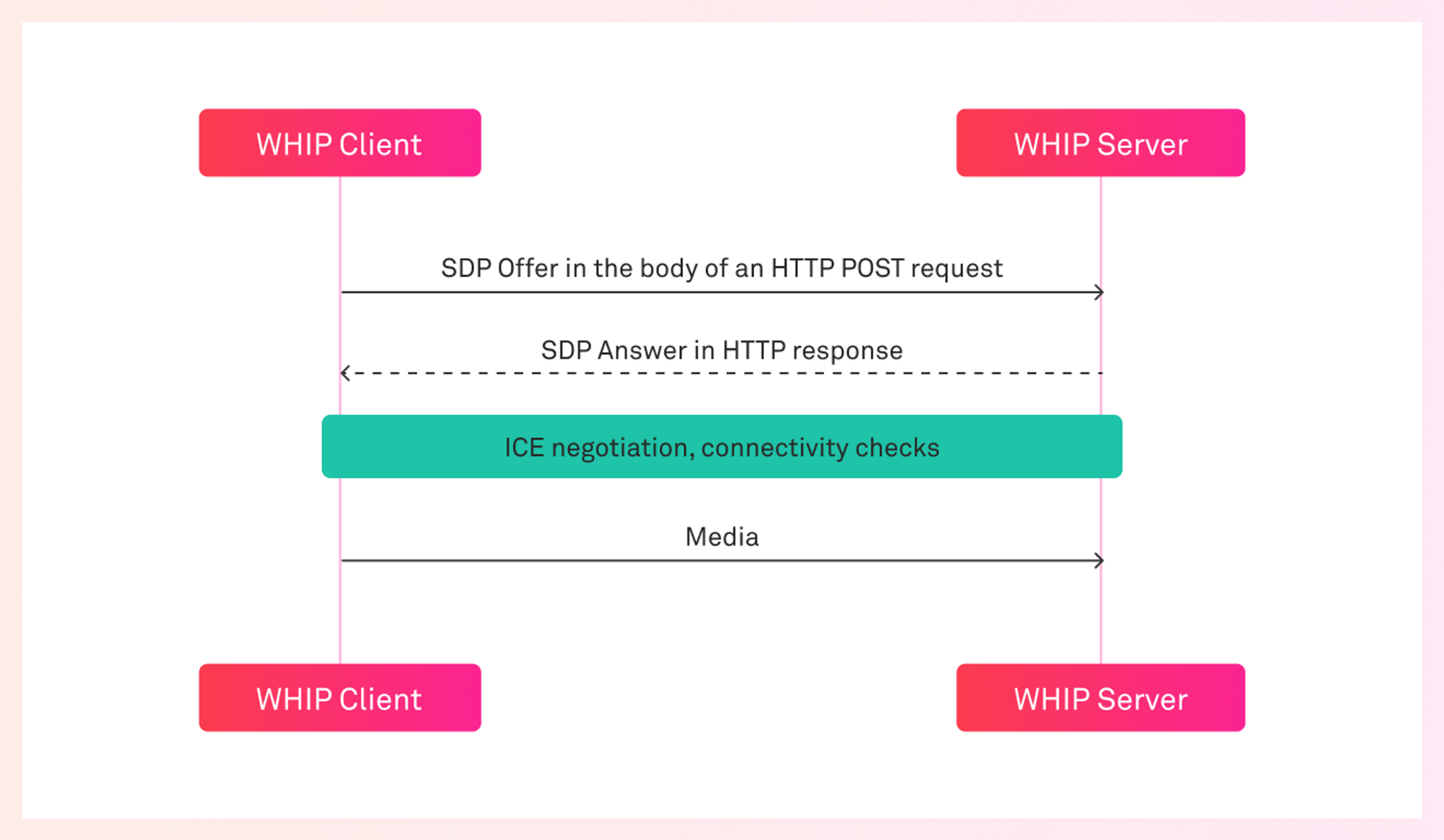

This means that we can have the publisher (a.k.a the sender or WHIP client) be the party to generate an SDP offer that describes the media they wish to send. Then, the WHIP server can reply with an SDP answer, ICE negotiation can determine the details of where and how the media should be sent, and the media can start flowing.

SDP Offer/Answer : HTTP Request/Response

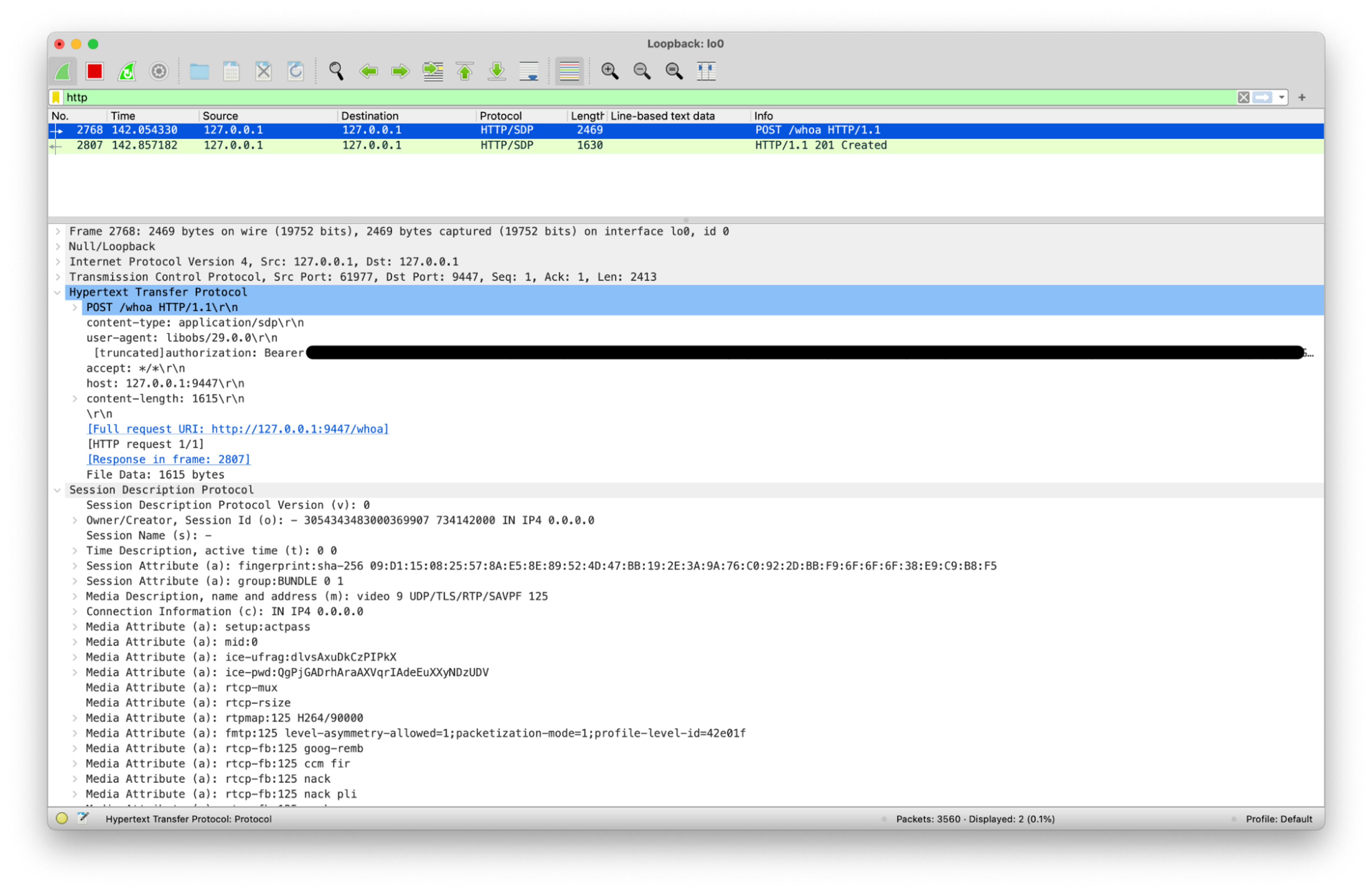

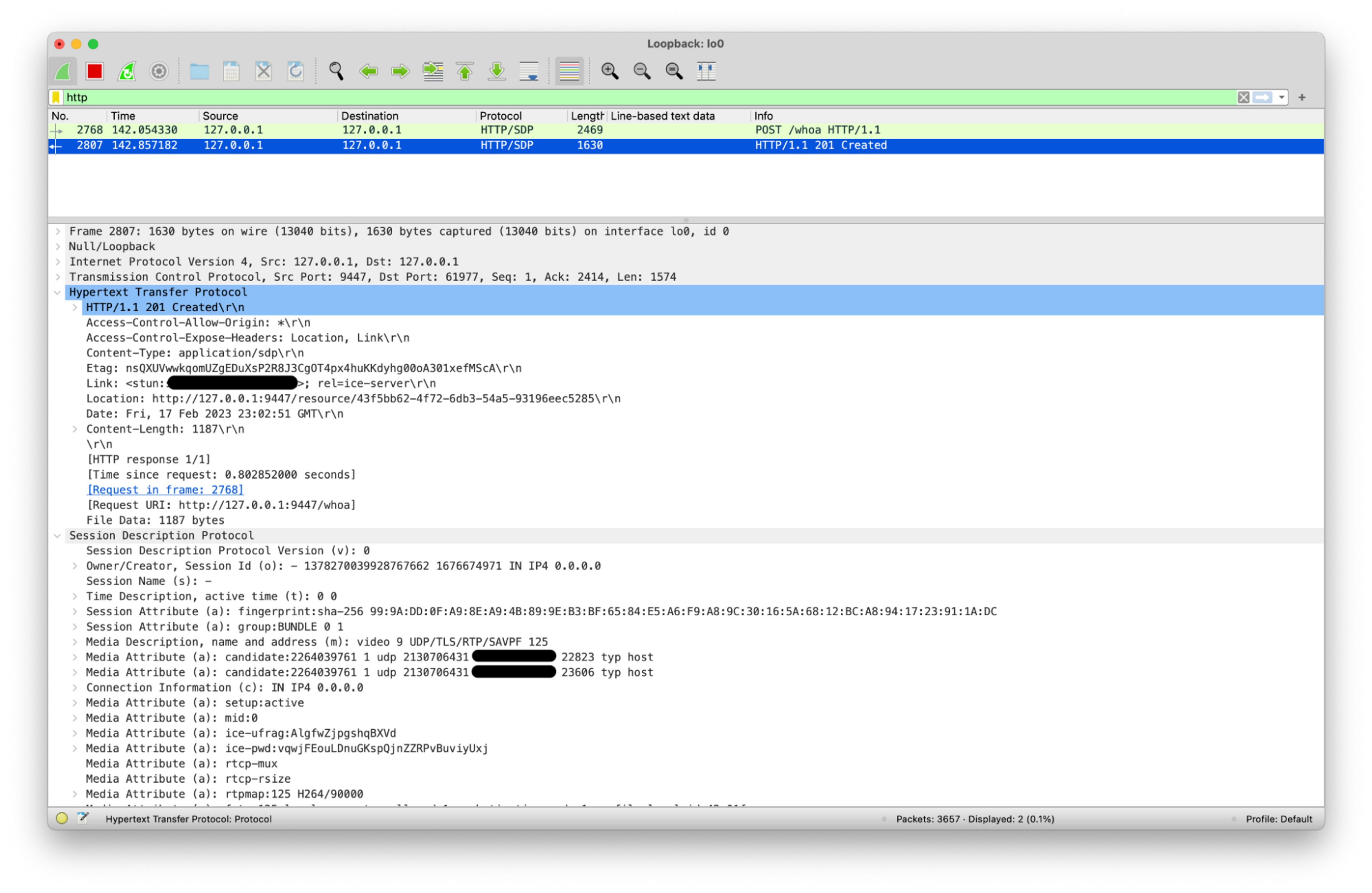

In the simplest case, WHIP is essentially using an HTTP POST request to send an SDP offer and receiving an SDP answer in the HTTP response. It doesn’t get much simpler than that!

Here’s what this looks like:

And that’s it! We can now provide a well-known endpoint for WHIP client configuration (similar to RTMP), and WebRTC can be used to ingest media.

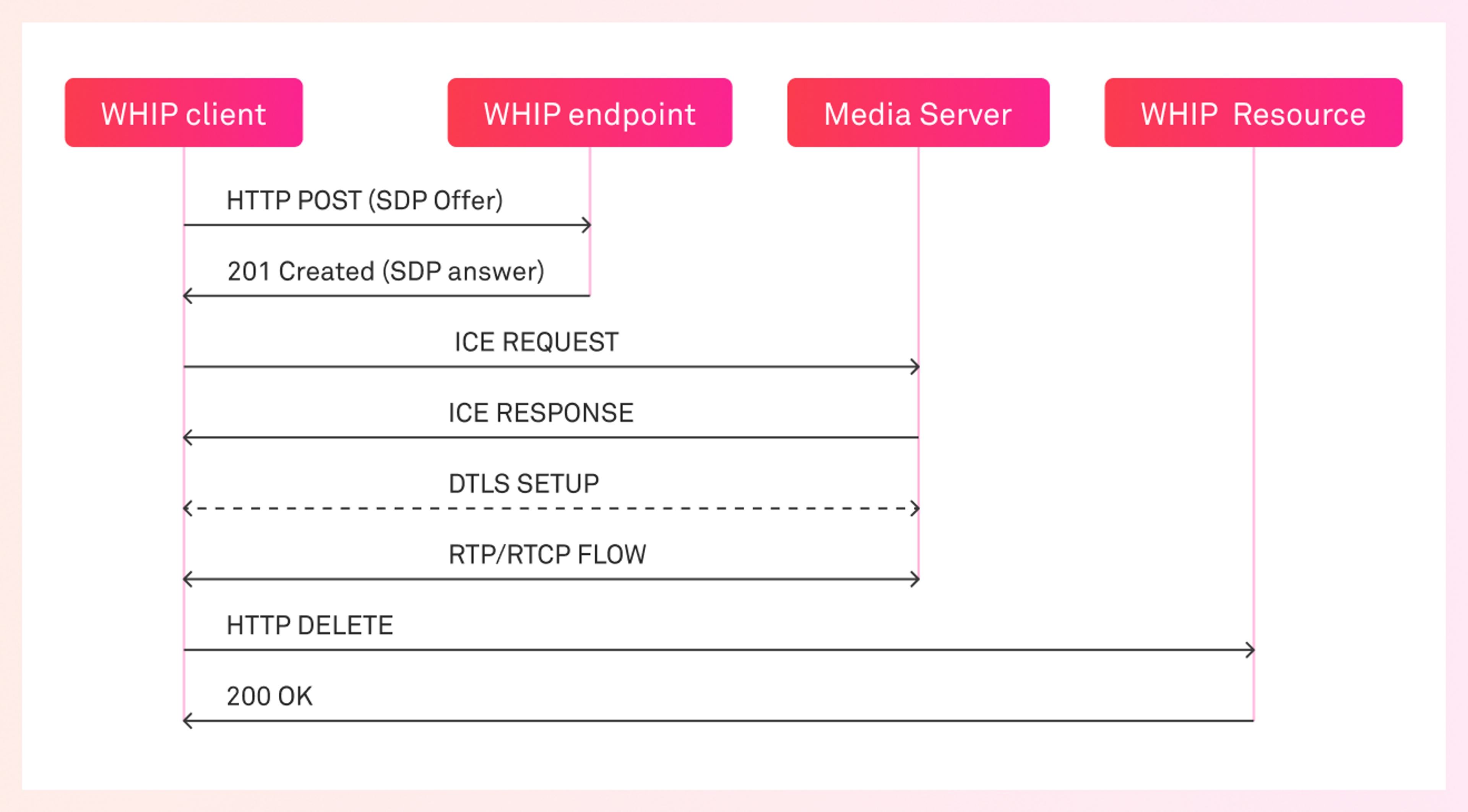

Here’s how the WHIP specification itself represents this:

A few things are worth noting:

The WHIP endpoint can be implemented by a service that is distinct from the media server itself. For Mux Real-Time Video, we already have separate services for media and signaling that communicate on the backend. This allows those components to scale independently, which is a useful property when the load characteristics and resource demands associated with these services may vary.

The Media server still handles all of the usual ICE and media traffic — the core WebRTC stack doesn’t require any changes to be compatible with WHIP.

The WHIP resource is just a handle provided when a session is created so that additional communication can be tied back to that specific session. This flow illustrates how the WHIP resource can be used to tear down a session with an HTTP DELETE.

Implementing WHIP

So what does WHIP look like in practice?

Last fall, the Mux Engineering team had a Hack Week — a time when we break from routine to get revitalized by working on something fun and exciting. The idea is that Hack Week projects should relate to the work we do at Mux in some way, but the requirements are pretty open ended. Engineers are encouraged to collaborate with other teams they don’t usually get to work with and to have as much fun as possible making something to share with everyone at the end of the week.

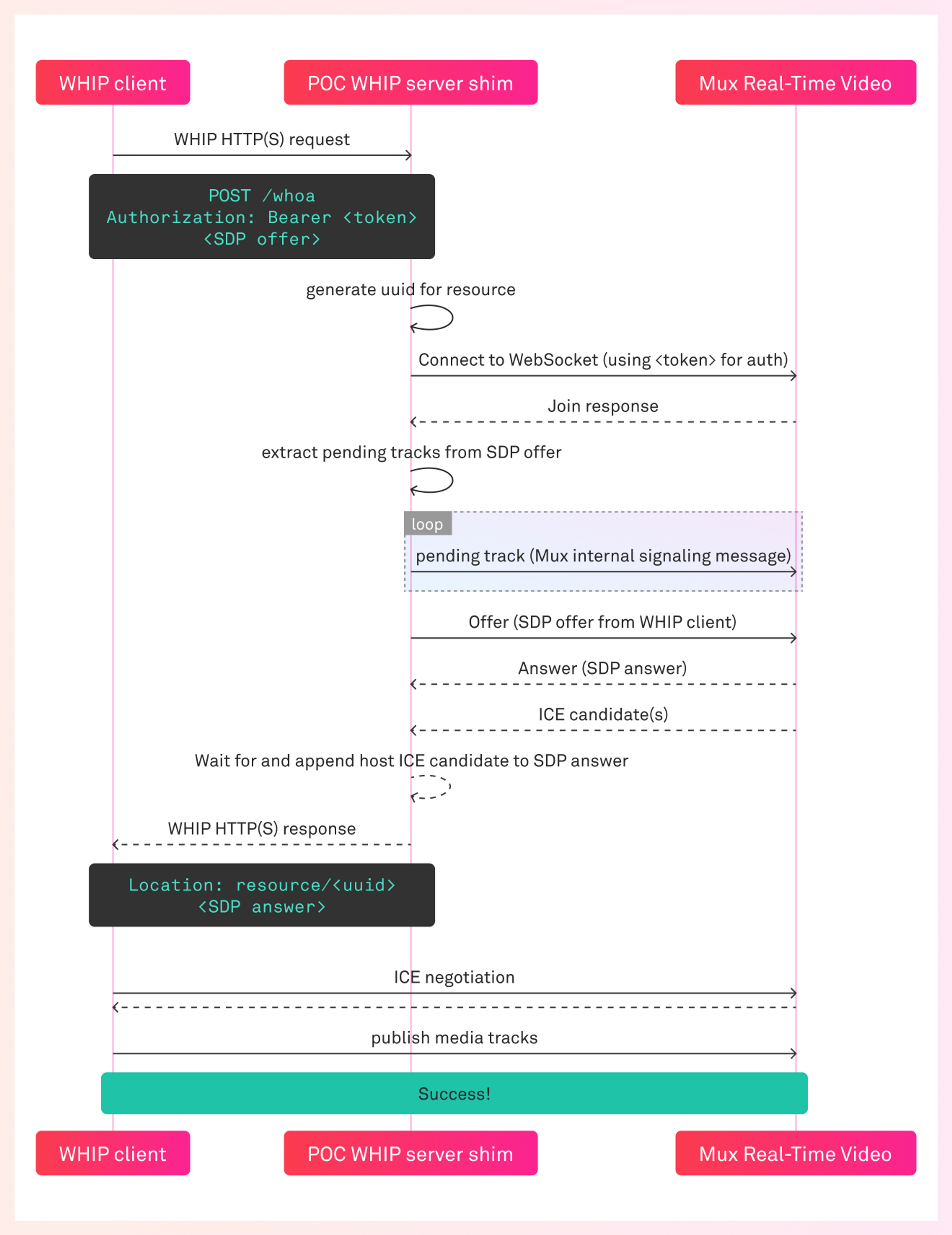

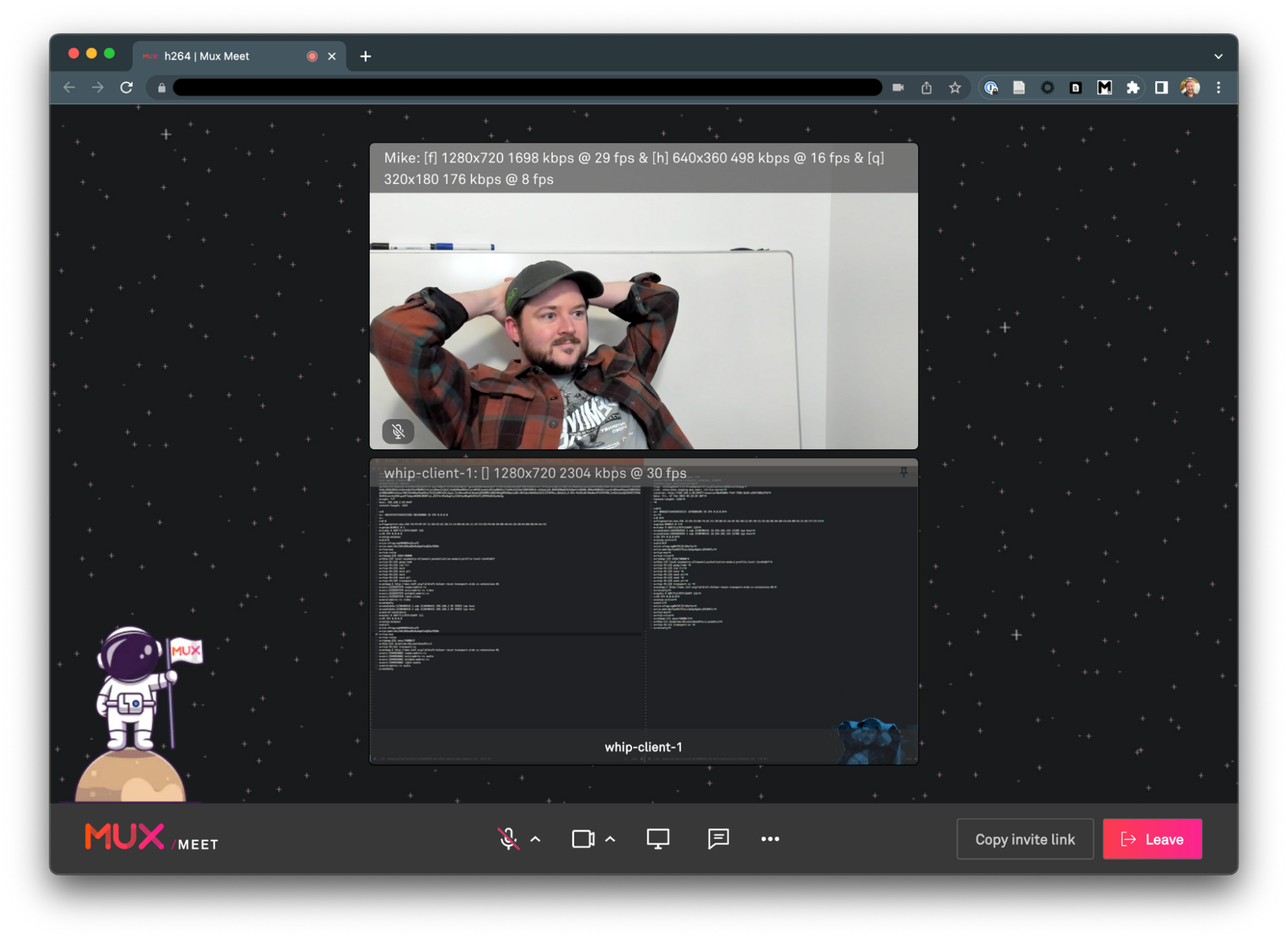

For this past Hack Week, I worked with Phil Cluff and Bobby Peck to build a kind of proof-of-concept adaptor shim to allow WHIP clients to publish video into Mux Real-Time Video spaces.

Essentially, our shim accepts an HTTP POST request from a WHIP client, opens a WebSocket connection to Mux Real-Time Video, uses the SDP offer from the POST request to construct an offer message, and sends that message to the WebSocket. The shim then takes the answer message received from the WebSocket, extracts the SDP answer from it, and sends the SDP answer back to the WHIP client in an HTTP response.

Since this was a Hack Week project, it’s not the cleanest, most elegant, or most complete solution; but it did prove out the concept, and we were able to connect WHIP clients to Mux Real-Time Video this way.

One of the main clients we used in our testing was the WHIP client created by Eyevinn Technology. Phil used this client to build a testing page on codesandbox.io so we could quickly and easily validate changes.

We also tested with Sergio’s original whip-js library.

WHIP is still a WIP

The WHIP specification is a work in progress. It hasn’t quite made it to RFC status yet, but at this point it’s unlikely to undergo any major revisions before publication, and already there are a growing number of experimental implementations available.

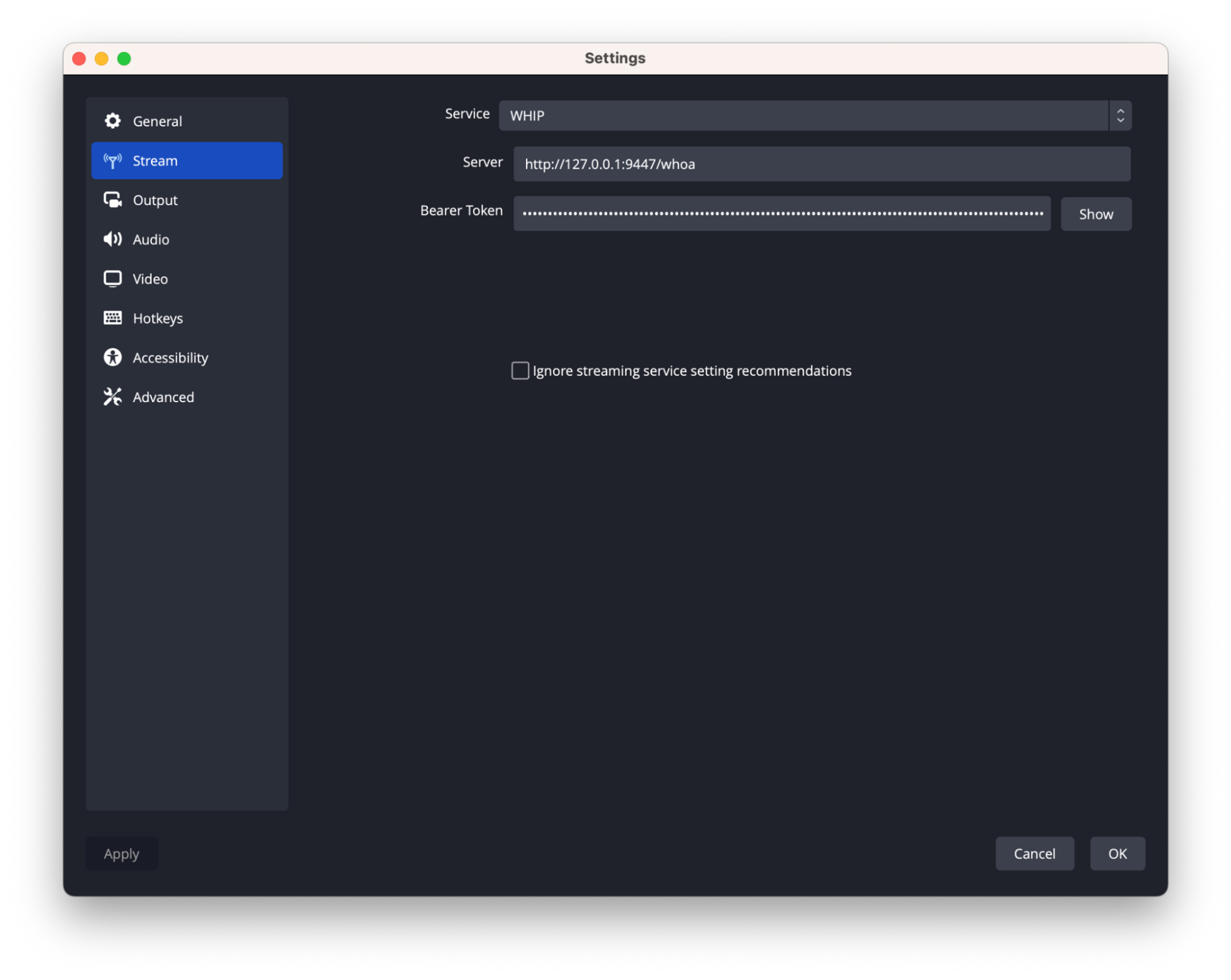

For example, while writing this post, I also tested our shim with a work-in-progress PR for OBS that adds WHIP support as well as with gstreamer, which now has WHIP support.

It’s worth noting that both of these projects are using the new Rust webrtc-rs library for their WebRTC implementation rather than libwebrtc, which for many years had been the only option for C/C++ projects. I’m excited about this new era for the WebRTC ecosystem!

Is WHIP on your wishlist?

What do you think? Do you have a product or service that would benefit from being able to send media over WebRTC with a standardized mechanism for initiating sessions? Maybe it’s a new type of action camera or a new drone. Maybe this gives you a way to relay WebRTC sessions into a provider that can scale out egress to a much larger audience. Maybe you just want an easy way to live-stream walkie-talkie reviews and tutorials with sub-second latency.

Is WHIP something you’d like to use with Mux Real-Time Video? Let us know!