Today alongside our friends at Touchstream, Datazoom, and Fastly, we’ve published a white paper packed full of tips to help you stream huge scale events successfully.

Get the white paper here!Unfortunately, while writing the white paper, I went a little overboard (OK, a lot overboard), and had to cut out a lot of content - this is a big topic that we can’t understate the value of. Well, I didn’t want you all to miss out, so you can read the full unabridged version of the Mux piece of the white paper below!

We decided to spend our part of the white paper talking about how to perform huge scale live streaming events that work flawlessly. Think Super Bowl or World Cup-scale events - the type where you just can't afford for them to fail!

Some of these points are things that Mux are doing today, and some of them are things we’ve got coming in the pipeline. Be sure to keep an eye on the Mux blog for more announcements in the coming months!

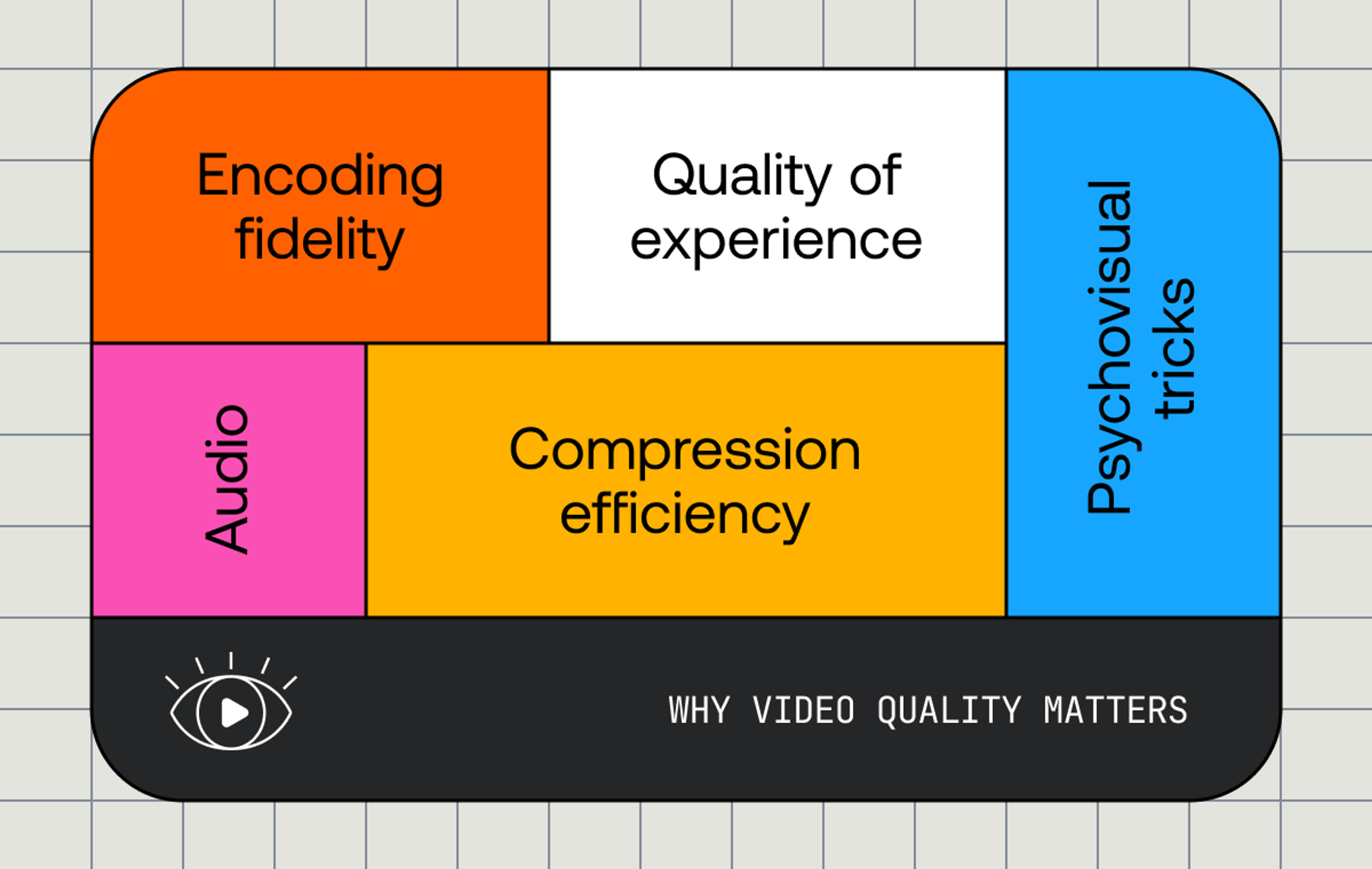

1) Monitor your QoE, and use it to make informed decisions

When you’re streaming a live event at scale, you need to deeply understand the experience your users are having - this is called quality of experience (QoE). This is much more than synthetic monitoring and quality of service (QoS). You can process and aggregate every CDN log you have, and every piece of data coming from your encoder, your packagers and your storage, but this doesn’t tell you what viewers are experiencing. The only way to gather real quality of experience data is by collecting metrics on the user’s own device.

QoE tools, such as Mux Data, allow you to monitor the experience of your viewers in real time which not only lets you see when there’s a change in the end user experience, but in a way that lets you dig into and understand the cause of that disruption.

In general the 5 critical metrics you need to be looking at for a live event are:

- Playback failure rate - How often are viewers streams failing?

- Exits before start - How often are the viewers closing the page or app before playback starts?

- Rebuffering rate - How often are users buffering for, and how long are they buffering for?

- Video startup time - How long is it taking for the video to start playback?

- Concurrent viewers - How many people are watching at a given time?

OK, so “Concurrent Viewers” isn’t technically a QoE metric, it’s an engagement metric, but a super important one. There are also other really important metrics you should be tracking in your QoE as well, but we think these are the ones that you should be watching like a hawk during live events - so much so that these are the ones we show you on the Mux data real-time dashboard.

A spike or dip in any one of these metrics indicates that you have a problem somewhere in your delivery chain - but that’s just telling you the effect of some affect happening in your stack. To truly understand what’s going on, you need to be able to dig into every data point in your graphs to pinpoint the issue - is it a particular ISP? a particular CDN? a particular device? Without the ability to quickly drill down into your QoE data, you can’t make informed decisions about any reactive changes you may wish to make - for example routing more viewers to an alternate CDN.

A great QoE tool shouldn’t just track these data points, it should present them to you in a way that is understandable by everyone in your organization - and this needs to be a key piece of your war-room buildout for your highest profile live events. A war-room is a critical part of the process of operating high scale events, and a great, understandable QoE tool can help enable your customer support, operations, and engineering teams to work together in the war-room environment. The best QoE tools also let you access this data programmatically in real-time, allowing you to drive features such as multi-CDN switching based on the data coming from your end viewers.

Looking to the next logical generation of QoE tools, we’re expecting to see QoE data collection points scattered throughout the ingest and delivery workflow, much like QoS tools have started to be. This will help achieve automated analysis of things like referenced picture quality analysis using tools like VMAF, SSIM or PSNR, and automated verification of audio / video sync to maintain the quality of experience all the way from source through to the end user’s device.

2) Tune your ABR ladder for your content and your audience

While per-title solutions are becoming the norm for on-demand video streaming, we’re still a way from a unified approach to delivering a per-scene encoding strategy that works well across all platforms for live video streams. However, this shouldn’t stop you from adopting an optimized ABR ladder.

For large scale planned live streaming events, you have the opportunity in the run up to the event to plan your adaptive bitrate ladder around your test content - at the end of the day, last year’s World Cup looks just like this year’s from an encoder’s point of view!

However, in 2019 and beyond, you should be looking deeper than just the content to decide how your adaptive bitrate ladder should look. You need to know how your viewers are viewing your content to make educated decisions about your ladder layout - this includes taking into account things like the distribution of the viewer-base’s bandwidth capabilities, and the devices that they’re viewing on.

What’s great is that the data points you need to make these decisions are the same data points as the ones that you’re hopefully already tracking for your QoE data - if you analyze that data correctly you should be able to make some really well educated decisions about where to place your renditions in your adaptive bitrate ladder. In the next couple of years we also expect to see the emergence of solutions which can change the adaptive bitrate ladder in real time as the viewer conditions change.

3) Leverage multi-CDN and shield your origin

The days of leveraging a single CDN for your business critical live events are over. Customers expect flawless delivery on every platform in every geography, and for every second of every event, which simply isn’t possible with a single CDN vendor. It’s well understood that some CDNs perform well in some regions and for some ISPs, while others perform better in others. Beyond this, using multi-CDN allows you to become resilient to the failure of one or more CDN partners on a local, national, or global scale.

Modern multi-CDN solution architectures are incredibly powerful - platforms like Citrix Intelligent Traffic Management (the startup formerly known as Cedexis) can control request routing on a highly granular level using data from a variety of sources (including Mux data!) to allow segment requests to be routed to the best CDN, for that end user, at that exact moment. This is known as “Mid-stream” CDN switching, and allows requests to route around congestion on a particular ISP or pinch point, as well as a more general CDN failure. However, multi-CDN and CDN switching come with two major challenges - cost and origin scale.

Cost: There are two big impacts on your cost model when you move to using multiple CDNs. The first is that your ability to negotiate your data rate with a particular CDN becomes limited. Most CDNs are more flexible on pricing as the commit volume increases, so as you bring more CDNs into the event, you’re limiting your ability to commit on large volumes. The other, larger challenge comes in the space of origin data egress. Because you have more CDNs reaching back to your origin, you’re going to be egressing many times the amount of data out of that point. Egress data rates also tend to be an order of magnitude more expensive for every gigabyte when compared to edge delivery.

It’s also worth remembering that while there’s a large financial cost to using multiple CDNs, there’s also a significant operational overhead as your team has to manage and operate them. This human cost shouldn’t be underestimated, and should be taken into account as you decide how many CDN partners you’re going to be using in a high scale event - remember that your team size may be a limiting factor here.

Origin Scaling: Because you’re now having multiple CDNs reaching back to your origin for cache misses, you need to appropriately scale your origin up. At first this doesn’t sound like the end of the world, but when you start to take into consideration that this might mean scaling your origin by 5x if you bring 5 edge CDNs to the party, this becomes expensive and introduces new scaling challenges for those services.

Thankfully, there’s an increasingly popular solution to these challenges - leveraging an origin shield. Origin shields are caching layers that sit between your CDNs and your origin, folding down incoming requests from many edge CDNs into one request at the origin level. One great product here is Fastly’s Media Shield, which is one of the few fully supported solutions to building an origin shield today. A great origin shield can reduce origin load by 70 to 80%, even with a well performing edge CDN in front of it.

The biggest problem with leveraging an origin shield is that of introducing a single or small number of points of failure into your infrastructure. You should consider testing the best way to mitigate this risk - that could include folding down your CDN origin requests into two shield data centers, rather than one, or having an automated or manual fallback strategy, where in the case of a failure of the origin shield, CDNs would swap back to using direct origin egress.

4) Have redundant ingest, encode, and delivery paths

As we discussed, multi-CDN is critical to the delivery of a high scale live event, but multi-CDN only really helps with failures on the last mile of delivery. For the biggest live events you need to be architecting your end-to-end streaming stack for high resilience. This means that your encoders, storage, packagers, network links, and everything else you can think of, also need to be redundant.

Depending on the scale of your event, this obviously has limits. Are you going to rent redundant satellite uplinks and generators for your CEO round table? Probably not. For the Super Bowl or the World Cup? Most definitely.

For ingestion, one thing that makes this challenging is the lack of control you have over how your traffic is routed on the internet. While you might have 2 redundant encoders, if you can’t get your input to them over disparate internet paths, you can’t survive a network outage or congestion event. Generally the best solution here is by lighting up multiple fiber connections with different vendors where your signal originates, and make sure that they move through disparate internet exchanges to their ultimate destination.

With your redundant encoders it’s important to have them timecode synchronized and input locked. This allows your redundant encoders to make the same encoding decisions to make sure that a segment coming from encoder A and encoder B have continuous timecodes and B/P frame references, so in the case of a viewer moving between one encoding chain and another, they don’t experience a visual discontinuity.

If you’re building your main workflow in the cloud it’s important to remember to run your redundant encoders, packagers and storage in disparate regions, and not just availability zones. Thankfully most modern cloud platforms provide you with easy tools to do this, but be careful - some cloud products may not be available in the most convenient regions for where you’re broadcasting or delivering - be sure to check this as you evaluate vendors!

One popular approach we’ve been seeing more of in the industry is utilizing fully cross region packaging and storage architectures, where each encoder is outputting chunks of video to not only its own region, put to the alternate region, and vice versa. This is great because it allows you to build a fully redundant packaging chain by allowing your packagers to access content from your redundant regions too.

If you’ve built a fully redundant chain, great! But the first time you use it shouldn’t be when you have a failure - if you have 2 redundant chains, you should be load balancing your viewers between each of those chains in the normal use case. This not only helps you be confident that your redundant service works as expected, it also allows you to keep your caches in every redundant region warm so that a sudden failover doesn’t cause a cascading failure.

5) Build and foster collaborative vendor relationships

Vendor relationships are a cornerstone of producing a reliable, scalable event. There’s a few pieces to this.

First, your vendor relationships at the most fundamental level need to be collaborative, not combative. Both parties need to understand that they’re in a win-together, lose-together situation, on high-scale live events. This can be a challenging situation because at the end of the day, these are business relationships, and cost can play a big piece in how a relationship is built. Generally it’s a good idea to try to separate your cost negotiations from your active engineering relationships to ensure collaborative interactions at the engineering level which are critical to high-scale success.

Second, for huge scale live events, you’re likely going to have multiple competing vendors delivering the same functionality (If not, you might want to read point 3 again!). It’s critical that the win-together, lose-together attitude extends into game day relationships between traditionally competitive vendors. For example, one CDN vendor running into issues for a particular ISP should have no issues with their traffic being routed to a competitor to maintain the QoE to the end viewer.

Third, you need to be co-ordinating and actively planning your live events with your vendors. There are varying schools of thought at what constitutes a large enough event to justify engaging your vendors, some say a few tens of thousands of viewers, others give numbers in terms of gigabits of edge traffic. For huge business critical events though, you need to make sure that all your vendors are informed of your traffic plans, and actively engaged on the day with established, tested routes of escalation.

In some cases, buying reserved capacity on CDNs is a good idea, but this can prove incredibly costly if you don’t then use that capacity. It can also be hard to estimate requirements meaningfully since as links become congested, user’s bandwidth can suddenly change, making all estimates meaningless. It’s also important to understand where pinch-points might exist on backbone networks - this is often something that you might need to engage ISPs and interchange vendors to understand thoroughly.

If you’ve managed to build great, collaborative environment between you and your vendors, for the biggest events in the world, you’ll want to build a war room environment. You should have technical representatives from all your vendors in the same room, all working together to solve issues, and monitor their services. Making sure this is in the same room is actually really important to foster relationships, no phone bridges, no people talking over each other, just everyone in the room wanting to make sure your event succeeds.

6) Have a plan for when it all goes wrong

However well-formed your resilience and redundancy models are, there’s always the chance that something goes catastrophically wrong. What if you have twice as many viewers as you’re expecting and know your CDN partners can’t handle it?

It’s always worth having a plan for the absolute worst case where you can’t elegantly recover the stream. As Donald Rumsfeld once said, there are, after all, “unknown unknowns”.

There’s a few things you can do to mitigate these risks though. When it comes to running out of bandwidth across multiple partners, you should be ready to drop the top bitrates out of your manifests if needed - this has been done on many large events historically, and as we venture into UHD live territory, this will only become more commonplace. During some UHD live trials, platforms such as BBC iPlayer have also experimented with only letting a small number of users onto the UHD version of the stream in order to ensure the QoE for all users of their service.

As we also move into a world where low-latency live streaming is going to become a dominating force in events such a live sport, it's likely that we’ll also start to see platforms dynamically controlling the end user latency during large scale events to improve cacheability when under high load. In particular, over the next few years while low-latency methodology is continuing to evolve, there will be an inevitable challenging tradeoff of scalability, latency, and resilience for live sporting events.

It’s important to think about client side behavior here too. If something does go catastrophically wrong and a service 500s for a few seconds or minutes, how well will your application handle that outage? Will it start spamming the service with requests while its already overloaded? Will it crash the application entirely? Will it just look like buffering to the user? It’s important to think this through as many large scale software failures have snowballed due to bad client side behavior interacting with already struggling web services.

Time should also be spent thinking about how to communicate with users if there’s a service degradation. Lots of platforms have service status pages, but many users don’t know where to find them. Platforms like Twitter and Facebook are also great ways of communicating with your audience, but you should also consider in-app indication of service related issues. Think, ”It’s not you, it’s us” in banner form.

7) Practice makes perfect

When you’re running the biggest events in the world, it can be really hard to practice. In events like the World Cup, Super Bowl, Cricket World Cup, or Premiere League Final, the lead up events in the weeks preceding “the big one” are often an order of magnitude smaller than the event you’re building up to.

So how can you test real world scale? Honestly, you can’t practically test for the volume of requests you’re expecting on tier 1 live events. We’re not saying that load testing isn’t a critical part of building huge business critical video platforms, it absolutely is, but testing all your CDN edges at the scale you’re expecting is unrealistic. However, you can absolutely load test your origins, origin shields, and encoders at meaningful scale with lots of publicly available SAAS and self hosted load testing tools.

What you can do however, is practice things going wrong during smaller events.

There’s no point in having a fully redundant delivery chain if you never exercise that behavior. Netflix first talked about the concept of the “chaos monkey” in 2011, which destroys components of their cloud infrastructure, testing redundancy modes. While an application rebooting one of your redundant encoding chains during a real event might seem a little scary, this is where the industry will be heading in the next couple of years. In the real world this probably isn’t something you’re likely to automate right now, but during run up events, manually testing your resiliency model is critical.

Try to test every point where you’ve added redundancy. If you have multiple encoders, reboot one, does the stream freeze? Do the same with your packagers, your CDNs. Make sure that under a real failure of a component that your stream keeps playing. You should also always remember to keep an eye on the physical stream while you’re doing this rather than relying on synthetic monitoring of QoE to tell you if there’s an issue. While these tools are critical to for looking at things like rebuffering, or segment fetch failures, they aren’t currently great at checking for visual corruption inside video frames, particularly on DRM’d content.

While you should test this in isolation before you test it on a real event, trying this during any lead up events you have can be hugely valuable so you can at least see how your infrastructure recovers under some load. Its also important during your run-up or test events to make sure that you use these opportunities to familiarize your teams with the tools and processes that they’ll be using on game day. Killing an encoder is a great test, but you might not want to tell your team exactly what you’re doing, and instead let them validate your monitoring, tools, runbooks and processes, to figure out exactly what part of the chain you pulled the plug on.