Updated 04/23/24: We now support SRT live ingest protocol. You can read more about it here. We also added User-Agent restrictions to Mux-supported security features.

You did it. Congratulations on getting your app up and running! Now how do you add a video streaming feature?

Maybe you finally got your Next.js or SvelteKit project deployed on Vercel, or maybe you’ve got a whole custom system in the cloud built with serverless functions, queues, object storage, and CDNs. Whether you’re a solo dev making all the decisions or you’re part of a team guided by executive leadership, the product you’re building has a new requirement. So here’s what you need to know about getting video working in your app:

What type of integration do you need?

Every application has different requirements, so it’s impossible to make a blanket recommendation that applies to everyone. How do you know where your app fits within the integration landscape?

- Is it better for your product to support built-in video features, or would it make more sense to embed a third-party platform?

- How much video integration expertise is available to you?

- Do you have access to financing?

These questions help with whittling down the types of providers that could fit into your app and the integration steps to expect when you start building.

Below is a spectrum of video providers loosely grouped by the types of services they offer. As you go right, you shift toward DIY and video that’s built into the app directly (also known as native video, but we’ll steer clear of that term to avoid confusion with native application development). As you go left, you shift toward off-the-shelf (OTC/Big Box) and video that’s embedded into the app but still separate from it.

Of course, as you dive deeper into each provider’s individual feature sets, pricing, and integration steps, their placement on the spectrum gets more complex and blurry. Features are being added as we speak, so the spectrum evolves and changes over time.

If you’re running a small shop with minimal expected video growth, but you still need video as a feature, you might consider going with a free option like YouTube or one of the free tiers on the platform providers. If you have money to spend and don’t really want to get your hands dirty with video, then the enterprise providers could be for you. Now, if you have money and are willing to dive into the video world a bit, then rolling your own video footprint could be valuable, especially if you’re forecasting substantial video growth.

A note on cost. Building the system you need yourself can definitely add up, but some simple setups can help you avoid long-term operating expenses, and can quickly make up for short-term capital expenses. Also, at either extreme on the spectrum above, you may see pricing patterns vary more. The extreme of deploying your own on-prem hardware could save you from paying a continuous third-party bill, but at some point, that cost could turn into large power, cooling, or network bills. At the other extreme, generous free tiers could mean not paying anything for low volumes, and some platforms will even help you generate revenue using their own advertisement features.

DIY: consider the following

Let’s say you want to give it a go. Below is a diagram of the functional components to consider for your DIY footprint. Keep in mind that this could be bundled into a small, local server environment for your own mini pipeline or deployed into a full-scale, clustered, and orchestrated system using Kubernetes and multiple CDNs running on distributed cloud infrastructure. Even with a small server somewhere, you’d likely still want to use a CDN, with your box acting as an origin.

If all of this video stuff isn’t clicking for you, check out howvideo.works for a great breakdown of streaming-related topics and techniques. What’s shown above mimics many of the systems I’ve deployed in the past. This diagram shows the potential topology of a video feature that supports users sending live streams into your app. These streams could come from a remote network or a local hardware setup used to broadcast events (think sports, faith services, and concerts). Deploying and maintaining this type of system would usually call for automation or an Infrastructure as Code solution like Terraform or Ansible.

Component breakdown

Ingress:

Input streams. In the diagram above, the input streams are broken up into Remote Network Streams and Local Wired Streams. User-generated content traverses the internet to reach an ingest point and move on to processing. This type of network stream is typically smaller than a wired uncompressed stream coming out of a control room or video router.

Gateway. In the diagram above, this is split into a Reverse Proxy Gateway and a Video Input Card. In the remote network case, the gateway may be distributed around the world to enable easier uploading. The local wired case mainly applies to a video pipeline that is bundled up all in one appliance. Input protocols like SDI or NDI would arrive on copper or fiber to a video card that then routes the signal to an onboard processor.

Video processing and packaging:

Ingest encode. This step can sometimes include a transcode as part of the normalization process, or just a transmux (container swap instead of a codec change). This may also be where some protocol translation happens if the gateway didn’t already change anything. For video-on-demand (VOD) especially, you would need to consider storage for this step.

Adaptive bitrate. The ABR step combines transrating and transizing to create multiple renditions of the same content. Depending on who you ask and the tools you’re using, this step can be considered part of packaging. For VOD, this can happen ahead of time or just-in-time (JIT).

Segmentation. Usually this step is considered part of packaging. Segmentation is where the .m3u8 manifest files get created for HLS (or .mpd files for DASH). The manifest points to the location of the segmented files. Here, the semicontinuous input stream is chopped up into segments (or chunks). Varying the segment size between 2 and 8 seconds will change your latency and network resilience. Again, for VOD, this can happen ahead of time or just-in-time.

Origin. This is the place to put what you’ve packaged and make it accessible only to your egress portal or CDN. It requires storage for manifest and segment files with a garbage collection policy, and redundancy here can go a long way. Typically, the origin is locked down using a combination of DNS, NAT, ACLs, or firewalls.

Egress:

Delivery controller. Load balancing could simply be provided by DNS, or it could come from a fancier ADC like F5. These days, a cloud load balancer or some sort of gateway with an addressable IP will do the trick. Along with firewalls and ACLs, the ADC tends to act as a network demarcation line for external access.

Content delivery network. A multi-CDN strategy can be used here to facilitate regional redundancy for origins and CDNs. Consider tokenization and cache invalidation strategies for your streams. Avoid caching error responses and stale manifests (or unique ones). This step adds a lot of value to segmented streaming protocols like HLS, since it globally distributes files that can be cached and downloaded at scale.

Player. This is one of the more obscure parts of the pipeline for most people. To watch your output, at least grab an HTML5 player that can support MSE in the browser (some browsers like Safari can play HLS on their own and don’t use MSE). There are standard elements, Web Components, and React components that can be used for this part. For native mobile, there are go-to players that are practically unavoidable. Players will gather the segments a viewer needs, buffer them in memory, and render content on the screen. Since they’re on the client side, assume that players are globally distributed. Consider these main groups when building: Desktop Web, Mobile Web, Native Mobile, and TV/OTT devices.

With the components now broken down, I’ll mention a couple other things to consider in the DIY space:

Video modes

On-demand video has its own complexities, but it’s often considered a simplified subsection of live video. Understanding the layout of a live streaming workflow is a nice “two birds, one stone” situation for digesting on-demand workflows. With on-demand, the inputs change to video files, and you’re able to cache more downstream — but this comes with higher storage needs. You can choose to do your processing just-in-time, but with live video, you don’t get a choice. The input streams are changing over time, so a lot of what you’re processing and delivering needs to be refreshed, which can lead to higher computational needs and consistent throughput requirements.

Real-time video is in a whole other category. You’d need to make architecture decisions about using an MCU, SFU, or P2P Mesh approach, and you’d have to include signaling servers alongside your STUN and TURN footprint. Where real-time video operates on WebRTC, we’re primarily discussing slower streaming pipelines like RTMP-to-HLS or SRT-to-DASH that are used more for live video. These pipelines typically convert a somewhat continuous stream of bits into a ladder of segmented files with varying qualities. So you may start with a 5 Mbps RTMP stream coming from OBS or FFmpeg that in the end gets delivered as HLS, which facilitates adaptive bitrate switching on the player and caching on a CDN.

Engineering expertise

Engineering resources for this type of work vary across tech and broadcast, but if you have access to this type of team, building your own pipeline could be worth the effort. The challenge to build a well-oiled machine is a great driver for many engineers, and this type of footprint can instill a sense of pride in an operational squad maintaining the system.

Any team working in this space would have to understand how to work with Linux-based distributions, CPU and memory constraints, storage throughput limitations, network configuration, and more. There would need to be monitoring systems put in place and maybe even Direct Connect fiber lines to cloud providers if signals are streaming from a NOC. They may need to peer VPCs to get video across the cloud and open up some firewall ports in the process. Storage tiering would need to be considered to mitigate costs. Database replication and sharding would need to be put in place as well. Overall, redundancy plays a huge role in building these types of systems, so syncing connected components also becomes a concern, along with latency from “glass to glass.” The list goes on and on, but chances are, if you are deploying other systems for your app, you may already deal with a lot of this in other ways.

Subsystems

Besides the fundamental team knowledge mentioned above, there are other subsystems that could be included along with your streaming footprint. Of course you’ll have your pre-existing backend server side system, with a CMS and maybe some edge workers or serverless functions. With that, there may be some QoE beacon collection systems that collect and process playback events for reporting. Or a VQ Analysis deployment that assesses the content flowing through your platform. Users could hit a localization and entitlement service to determine what they see and be redirected to a resolved signed URL.

A lot of these subsystems would be tangential to your streaming pipeline, but DAI (SSAI in particular) is an example of a subsystem that can completely change how your streams are delivered. Since ad insertion is happening server side, the ad content is built into the live stream manifest. This requires relatively unique manifests for users depending on your ad campaign’s parameters. Segments of video can still be delivered and cached on a CDN, but manifests will be delivered after a stitcher has manipulated them to include ads. A live workflow like this requires in-band messaging (like SCTE 35), out-of-band messaging (like SCTE 224), a stitcher, and an ad server configured with ad campaigns and access to inventory. As you can see, DAI can quickly become a complex system on its own, but that complexity may be necessary for folks looking to create a FAST channel, for example. Regardless, I bet that Facebook Live is lookin’ pretty good right now, huh?

Using a video API or service as a middle ground

The middle section of the spectrum above gets hard to categorize as API services become platforms. Some providers give you more levers than others, but the typical Infrastructure as a Service tool will allow builders to plug and play as needed. Like Mux, these services provide many integration sockets for you to choose what to connect and use.

Beyond this IaaS space, we get further into the off-the-shelf part of the spectrum, which could be a good fit for smaller teams or projects that don’t need as much scale. It could also fit nicely if you want integrated monetization, because the services usually bring traditional prerolls and midrolls into your stream, in addition to supporting call-to-action overlays and paid message pinning in the chat alongside your video.

Otherwise, going for an IaaS tool tends to give you more freedom to scale your video use as needed, without having to lay all the foundation yourself. In this blurred middle between DIY and fully off-the-shelf, you get more control and visibility as well as the ability to build with speed. Instead of redirecting your users to another service for video, having it built-in allows you to hold onto and curate their journeys as they navigate your app. Check out this post from Patreon on how seamless video features benefit both their creators and their respective audiences.

There are several providers to choose from in this space, but I’m gonna gush over Mux for a bit, since this is our blog after all. We enable the on-demand and live video modes across multiple CDNs with various latency options. We’ve got an awesome customizable player and all kinds of SDKs and CMS integrations. You can check out our fancy new docs for more details on our ever-changing feature list, but here are a few highlighted in the diagram below: auto-generated captions, webhooks, streaming exports for Mux Data, MP4 downloads, and simulcasting.

Here is a video of me walking through that diagram

Note that what is shown here is just a potential footprint for live streaming (RTMP-to-HLS in this case). You definitely don’t need all of this to get video going, but you can grow into more features over time. As mentioned above, you may already have a lot of the backend piece deployed as part of your regular app.

Streaming Exports for Mux Data allow businesses to push QoE metrics to their own Kinesis or PubSub deployments in the cloud as they become available. These metrics can then quickly be funneled into BI tools or redirected to users as part of a live recommendation engine.

We partner with many CMS providers that can run both as serverless services or local deployments, allowing for flexibility come integration time. The same is true for the functions and queues depicted above: they can be VMs you maintain yourself, or they can be a collection of Lambdas and Kinesis Streams orchestrated via Step Functions or an AWS EventBridge. This backend would be responsible for rate limiting requests to Mux and authenticating with an access token. Some endpoints are built to be accessed from the frontend, like the Engagement Counts API and the Live Stream Health Stats API. These require a JWT to be included with the request, so the client is able to use a tokenized URL that is signed by the backend beforehand.

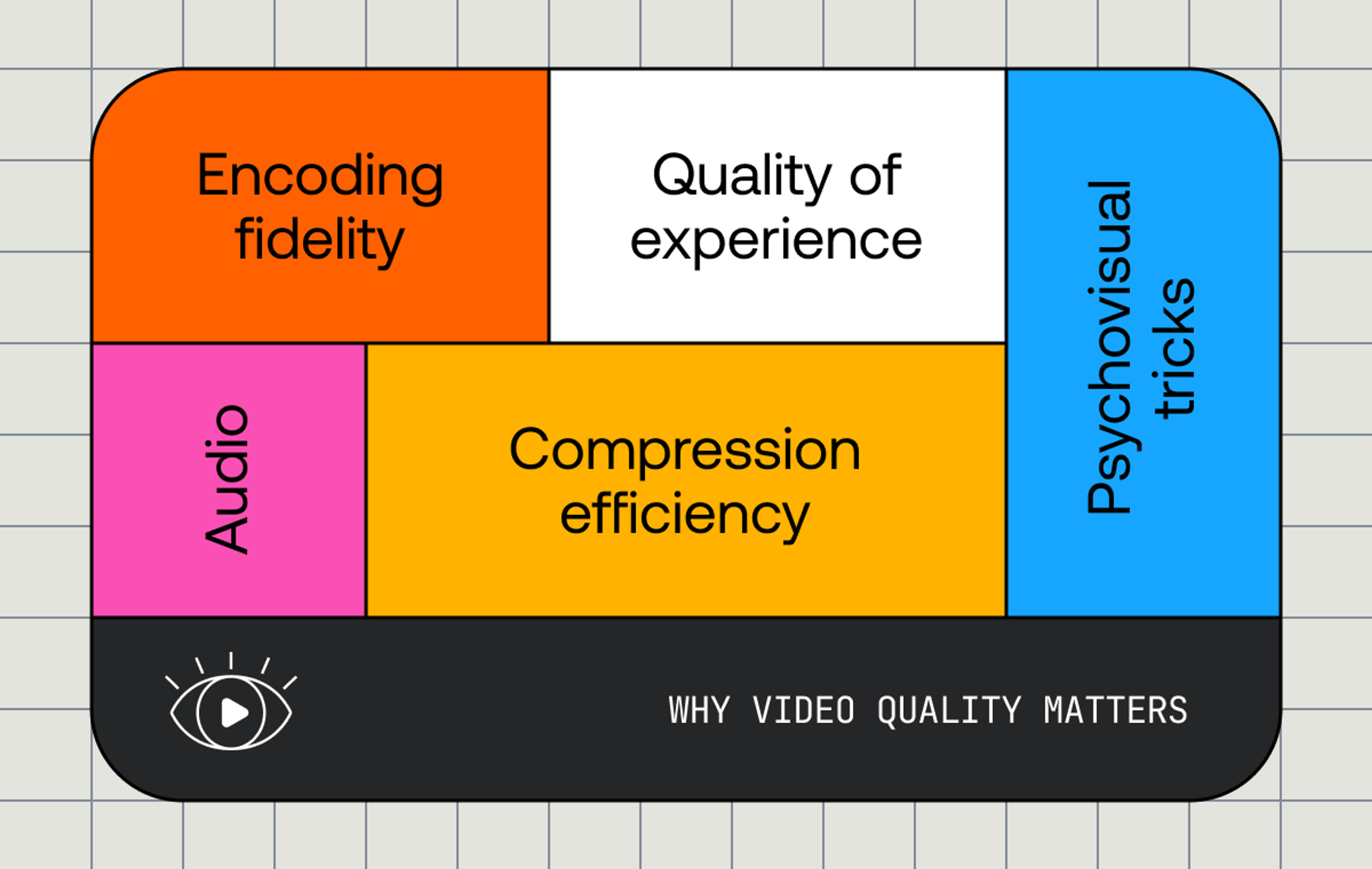

Feature prioritization for your use case

At this point you might be saying: “Great, but what does all this amount to? How do I choose what direction to go in?” To that I ask: “What features do you care about the most?” You could decide to live in that fuzzy middle section of the integration spectrum or lean hard into a certain direction.

The left/off-the-shelf side can basically handle most cases (typically excluding real-time video), but you end up more locked into their tools, and the experience is not as customizable. The right/DIY side could make sense if you need to support some complex SSAI workflows or you have the expertise to build exactly what you need and nothing else. In the middle service/API area, you get a little of both, with a range of changing feature sets, so you have to see what makes sense for you. Once a category is decided on, the actual provider you choose will come down to the individual lists of features.

Below is a short feature table for Mux with respect to the typical topics and feature types that tend to factor into picking a service. As I mentioned, these are always changing. Also, note that we don’t support everything just yet. For example, we don’t ingest SRT for Live Streams yet, we don’t come with traditional DRM support (Widevine, PlayReady, FairPlay) yet, and we can’t deliver SSAI built into our streams as of now. I encourage you to follow along with our changelog and keep reading the blog to find out about new features. We’re quickly moving closer to being a platform, but without losing sight of our API-first roots.

Feature Type | Mux Features |

|---|---|

Ads | CSAI Tracking |

Player | Mux Player, Media Chrome |

QoE | Mux Data Dashboard, SDKs, and Exports |

Security | Signed Playback Policies including: Referrer Validation, User-Agent Restrictions, and Expiration Windows |

Video Modes | Mux Assets, Live Streams |

In the end, think about how your app will evolve over time, and consider tying in other third-party partners to handle certain functional components for you.

There's no one-size-fits-all solution to integrating video, so I hope this lays out some options for newcomers entering the video space. Reach out to us if you want to discuss possible video footprints, what they could look like, and tradeoffs to consider.