Video encoding always loses quality. Always.

When your camera saves video, whether that’s your mobile phone or a high-end professional video camera, it takes the raw sensor output and encodes it to a usable video. Then you’re most likely uploading it to a computer and editing a few times. Through all those processes there’s a good chance that it got encoded a second or third time before you even uploaded it somewhere like Mux to stream it to end users. Every time you do that, there’s some quality loss.

Often, the quality loss is very minimal, and the result is just as good to the casual eye as the source video. If you use a high enough bitrate, it can even be virtually indistinguishable from the original to an expert eye — but even then, there’s a little bit of loss of quality.

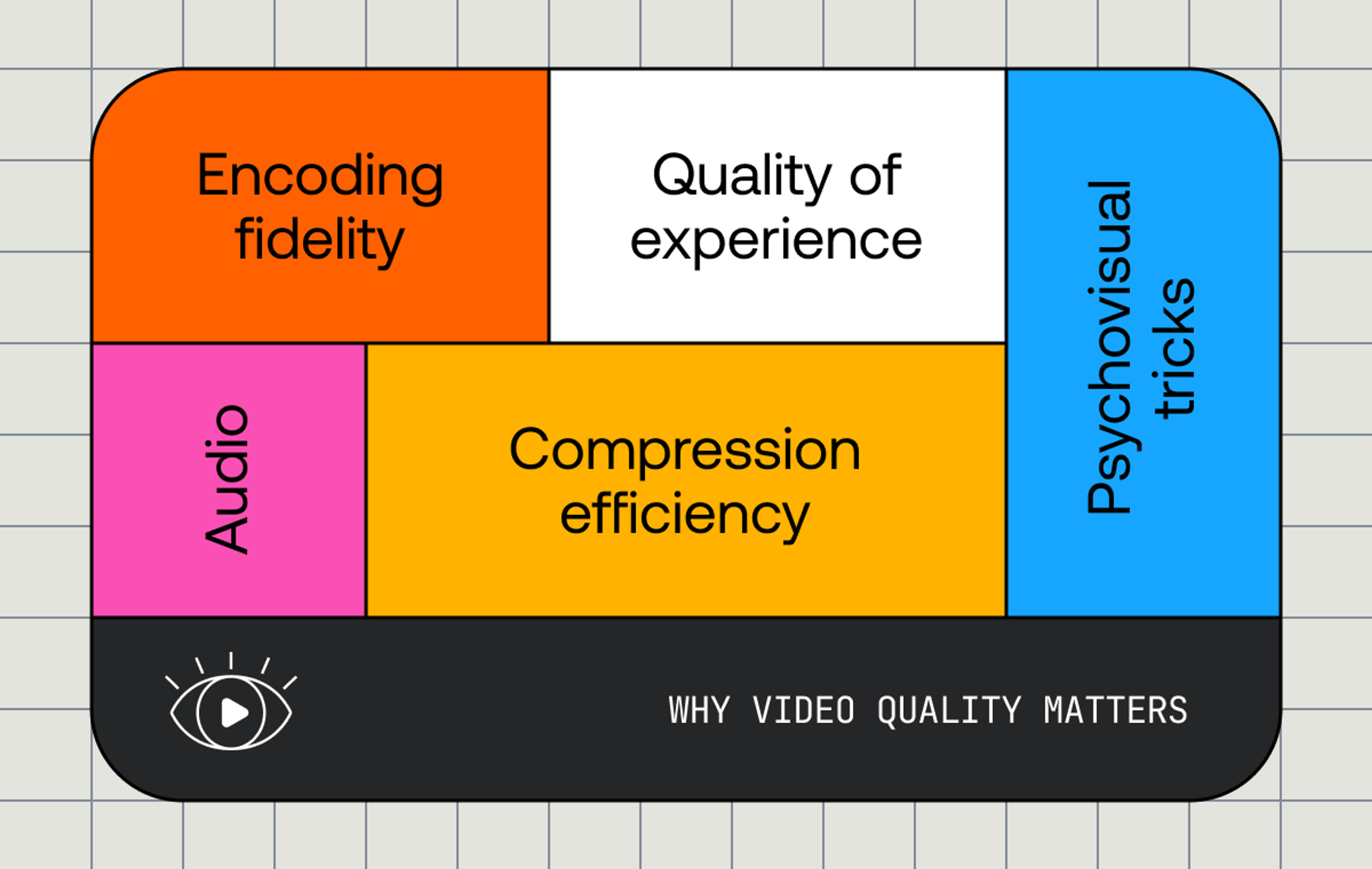

What you want from video encoding is to get the best possible compression for your use case — and that’s pretty complicated! There are a lot of factors you need to consider: compatibility with players, deliverability over the real-world internet, time to encode, cost to store, and, most of all, the perceptual quality you need.

What is perceptual quality (PQ)? It’s a pretty simple concept — it’s how good your content looks compared to the original. We’re not trying to judge whether the content was produced well, if your lighting was good, if your camera was high-end, or anything else like that. It’s just whether the encoding process gives you a video that looks close to the original.

Perceptual quality is one aspect of the video playback experience. Other aspects like how long it takes for your video to start playing, if there’s buffering, whether there are playback errors, and more are an important part of the quality of experience for your audience but they are not part of what we measure with PQ. The experience of watching a video is also going to depend on a lot of other factors — are you watching on a cheap tablet outside in the sun, or on a big-screen TV in a dark room? But today, we’re just going to talk about PQ, and not about all these other important factors.

At Mux, our goal is to help you with these problems, so you can focus on building great experiences for your users without having to spend all your time thinking about every detail of how video technologies work. And so, we’re trying to make sure we can give you good quality video, while keeping costs down, encoding time fast, and being able to deliver it well over the internet to your users around the globe.

Lossy and lossless compression

When you compress a ZIP file, and then later uncompress it, the output is identical - down to the last byte - as the original input. This is because ZIP is a type of ‘lossless’ encoding: we’re able to store some things compressed (smaller than the original) without discarding any information at all.

You can do the same with audio. If you’ve used FLAC, or ALAC (Apple Lossless), these formats will output exactly the same audio as the original input. There’s no possibility of them sounding worse in any way.

Video is harder, though. As an example, take raw 1080p video at 30 frames per second (fps). Just the simplest, smallest option, 4:2:0 chroma sampling, 8-bit samples, is about 750 Megabits per second. Go higher quality, 60 fps, 4K, 4:2:2 with 10-bit samples, and you’re at 10 Gigabits per second. And that’s going to be tough to stream over your home internet connection (or even your corporate intranet), let alone your mobile phone on the go!

Typically, raw video doesn’t losslessly compress very well. You might compress it by a factor of 2 or 3, but that’s not enough. We want to compress video by a factor of at least 100 — that’s what it takes to have video you can deliver consistently well over the internet! Without this, most home internet connections wouldn’t be able to stream video at all — and even where you could, it’d be too expensive for infrastructure providers to offer it.

This is where lossy compression comes in. In video compression, normally, the output after you decode it is not bit-for-bit identical to the input! When a video encoder encodes video, it has to make a lot of decisions about what things to preserve as accurately as possible, and what things don’t matter so much. That’s very complicated and is a major part of why video encoding is difficult. There are a lot of tradeoffs to make here. If a video is compressed to too low a bitrate, it’ll look bad — low resolution and blurry or blocky, or weird visual artifacts, and your users are going to complain. If a video is compressed to too high a bitrate, you’re needlessly increasing costs, and increasing the chances your users will see rebuffering.

Lossy compression is never perfect but it’s super important to have it look good enough to not distract from the content. After all, you’re using video because your users want the content, not because they’re excited about video compression (well, some of us are excited about video compression…).

As an aside: we do this for audio too! Audio codecs like MP3 and AAC use lossy compression, though these are typically trying to squeeze out a 10x reduction in size, rather than the 100x or more we often want from video codecs.

Video encoding and perceptual quality

So, what do we know? Two big things: it’s super important to compress video by a lot, but doing so is going to make it an imperfect reproduction of your original video and we’re throwing away information that humans (your users, unless you’re making TikTok Just For Cats) can see.

Perceptual quality is about measuring how close an encoded video looks compared to the source video. Remember, this isn’t a value judgement of whether the video looks good — you can calculate PQ for a professionally produced Hollywood movie, or for a video your grandparents recorded of you standing for the first time on a shaky VHS camcorder in the 1980s. It’s solely a measure of how close the output of the encoder is to the input.

What does poor PQ look like? Well, the most common artifact (that’s the term we use for “visibly bad encoding”) you’ve probably seen is “blockiness” — your video is full of squares, typically 16x16 squares, with very obvious boundaries from one to the next. You might also see “ringing” where you see unwanted noise next to a sharp edge in the video (a sharp edge might be seen in text in your video, or other sharply defined objects). You might also see “banding” where a smooth gradient (the sky, or a dark area) ends up showing distinct bands of colour, or “smearing” where a moving object in the video temporarily leaves behind a smear of colour where it previously was.

How do we measure it?

The gold standard for measuring PQ is to ask a group of people to compare an encoded video against the original, and ask them, “How good does this look?”. Taking the average of their ratings, you get a Mean Opinion Score (MOS). Often, this is done with a simple scale from 1-5, though other tests might use different scales.

Unfortunately, asking a group of people to compare videos is very, very, expensive, if you have a lot of videos (and here at Mux, we ingest millions of videos every month). So this isn’t a practical method at scale. It’s also much slower than asking a computer to do the work for you!

Thankfully, the computers are ready to do the work. There are a number of widely used algorithms for estimating PQ for a video. In general, the older ones are computationally cheaper but give results that are less well correlated with MOS-style metrics (the ones with humans judging). The newer algorithms are more complicated but correlate well to the experience of a real human watching a video — though remember, PQ isn’t the only thing that matters for the overall experience.

We’re not going to go through every approach here as there are decades of research into this problem space. We’re going to walk through some of the more popular approaches that have seen widespread use over the years.

PSNR

The oldest widely used algorithm for video quality is PSNR (Peak Signal-to-Noise Ratio). This ratio is really quick to calculate and because of this, was historically a very popular option. But it doesn’t really measure anything to do with how a human perceives the video, and thus is considered inadequate in modern use.

SSIM

SSIM (Structural Similarity Index Measure) is an algorithm that was introduced in 2004 (20 years ago!) that attempts to incorporate a number of aspects of human vision to compute a score that is better aligned with what your users are likely to think of the visual quality of a video. This is still reasonably cheap to compute and is often considered a useful proxy in situations where using a more complicated algorithm is too slow.

VMAF

Fast-forwarding to 2016, a group of scientists at the University of Southern California and Université de Nantes published a new method alongside researchers at Netflix called Video Multimethod Assessment Fusion (VMAF). This takes a number of different low-level metrics (all which have different benefits and drawbacks) and uses a machine learning model, using Support Vector Machines, to generate a single number to describe the overall quality of the video.

VMAF has been further refined over the years, tuned for specific content types and use cases, and faster implementations released, and over the past several years has consistently been the most widely used metric in the industry. However, the use cases it’s been tuned for aren’t universal. It’s focused on full-screen, long-form video playback, and so it’s not always useful as a completely general-purpose PQ metric. Like with all the other metrics discussed here, you have to understand the limitations of the metric to know when it applies.

With VMAF, the final generated score ranges from 0-100. The industry generally considers a score of 94 to be a good compromise for the highest quality used in streaming — neither so high that you’re wasting a lot of (expensive) bandwidth nor so low that the quality is impacted a lot.

Why not go higher?

You might be wondering, if we have metrics that tell us how good a video encoding is… why wouldn’t we always aim for the top? Why not get a VMAF score of 100? Why not guarantee that we’re always getting a VMAF score of 94, if that’s our target?

The biggest reason here is that higher bitrates (which you’ll generally need for higher quality) have downsides too. They cost a lot more both to store and to deliver to end-users and as you approach “perfect” the bitrate you need will skyrocket on complex content. Your end users also need to be able to reliably stream the content in real-time — there’s little value in having a top rendition in your ladder that’s so high that almost nobody can view it!

That’s why we pick a “good enough” target — such as a VMAF score of 94. It’s a good tradeoff between excessive bitrate (and hence excessive cost and limited usability) and excellent quality, for most content for end-user delivery.

Stay tuned to learn more

Now you understand a little more about PQ and some of the approaches the industry uses to measure it.

Helping you to understand the tradeoffs of adjusting PQ and why “just make it higher quality” isn’t always the right solution for your users is an important part of providing a great service. Over the next couple of months, we’ll be exploring more about PQ, what Mux is doing to optimise it, and how we can help you build better video.