Updated 8/4/21: We hit pause on audience-adaptive encoding. Read the details on why after the section break below.

Mux recently announced our new Audience Adaptive Encoding (AAE). However, we left out a deeper explanation of how and why it actually works.

Today, I'm going to do a deep dive into how AAE works and why you should try it out.

Why Did We Make It?

AAE evolved from our work on fast Per-Title Encoding which alters the encoding ladder based on content type (we use AI-models to quickly classify content types and generate ladders from those predictions). The Per-Title Encoding methodology was a great solution for how we hoped customers were watching videos, but it turns out, it's not how they were actually watching videos.

The vast majority of bitrate ladders out there encode based on a theoretical audience. Engineers make their best guess, and they hope their actual audience matches up to their assumptions. However, at Mux, we are able to utilize our Mux Data platform so we can actually see how users are consuming video. That means we can optimize, not only for content-type, but for resolution and bandwidth as well.

You might be asking "Is all that really necessary though? My video seems to be working just fine".

So what happens if you don't optimize and just use a standard encoding ladder?

For example, let's say a platform has a significant portion of mobile users watching at 640p, and the rest of their users watching at 720p (let's assume both groups have 3Mbps of bandwidth).

A standard encoding ladder might include renditions of 432p at 1Mbps and 720p at 3Mbps. In this case, the 640p users would either have to:

1) Grab the 720p rendition, which wastes bits per pixel.

2) Grab the 432p rendition, which reduces the quality.

In both cases, your mobile users are losing out.

What makes it worse is the difficulty of even knowing if this situation is happening. Without possessing the resolution and bandwidth distributions, you cannot make the optimal encoding decisions for a bitrate ladder. You're completely in the dark, unless of course, you happen to have a Data API that works seamlessly with your Video API.

When you realize you have a video platform but no data.

Methodology

Using Mux Data, we are able to see exactly how users are consuming video and use that knowledge to optimize the bitrate ladder.

To begin, we run our instant Per-Title solution to find the convex hull of a video. This is the optimal resolution for each bitrate in order to maximize video quality (as measured by VMAF). If you're using our Per-Title Encoding, this is where we stop. We choose standard bitrate encodes from the convex hull, and you can rest assured that we are maximizing quality at those bitrates.

However, as we saw earlier, this is an incomplete solution since it doesn't take into account the different ways users consume video. In order to do that, we first need to make an important decision: where to place the highest quality rendition in the ladder.

ML All the Way Down

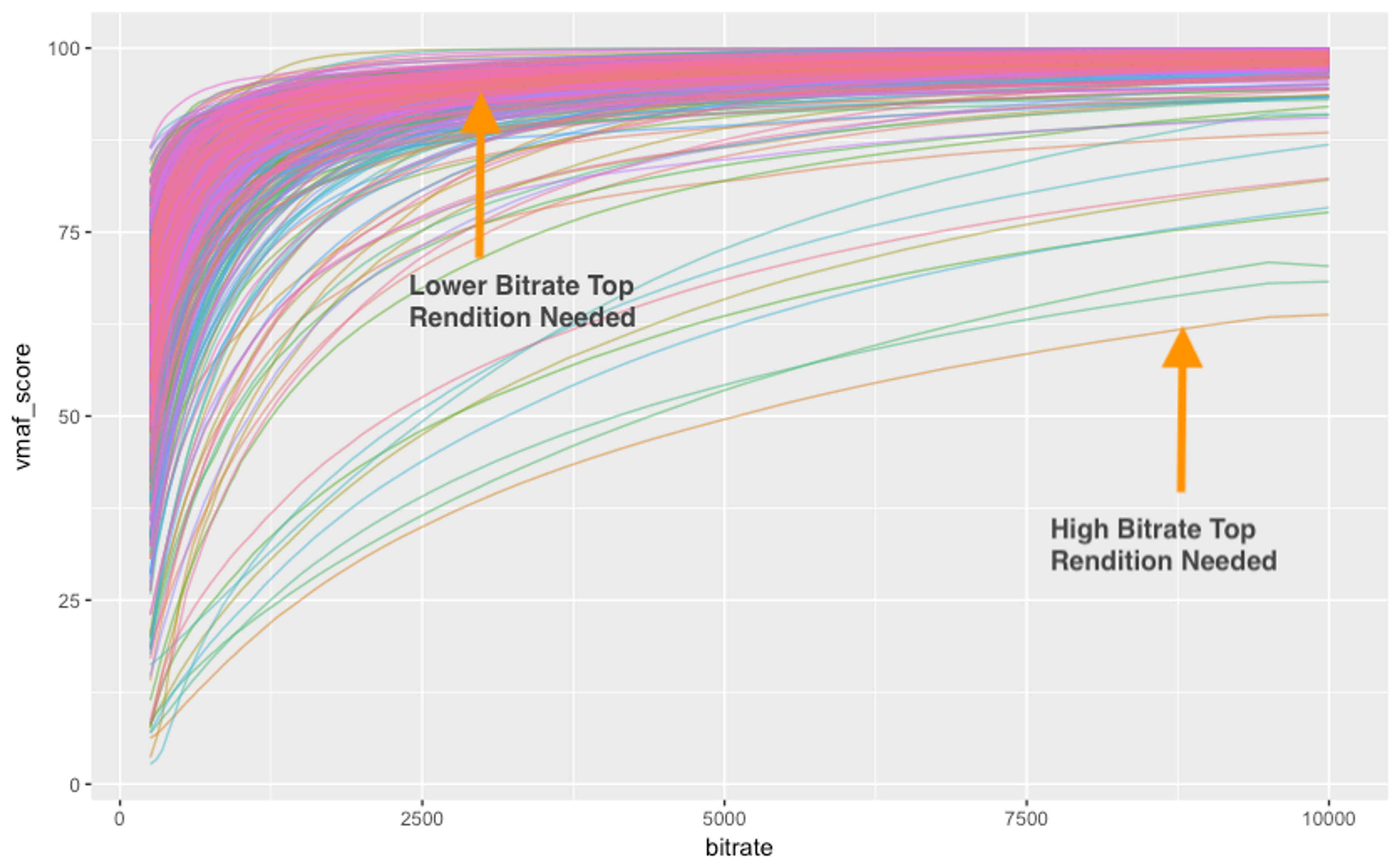

The top rendition is the basis for choosing a smart bitrate ladder because it serves as the catch-all for all the users with high connection speeds that just want great video quality. You want to choose the lowest bitrate possible while still ensuring a high-level of quality. The problem is, this bitrate depends entirely on the shape of the convex hull. Below is a sample of the convex hulls we use to train our prediction model:

A Sample of Convex Hulls

Because we're using machine learning to quickly generate these convex hulls, we don't have a reliable estimate of the VMAF at each bitrate. However, our model output does contain important metadata and video features which we can feed into another machine learning model. We utilize a boosted-tree algorithm in order to translate our Per-Title tool into a top rendition selection model which estimates the lowest bitrate at which quality can be maintained.

At this point, we can look at the Mux Data user information to estimate the bitrate-resolutions pairs for the rest of the encoding ladder. This is the part where we can see lots of mobile users watching at 640p, and create a rendition at their viewing resolution instead of a standard 432p. In this way, our tool is adapting to your audience in order to optimize encoding.

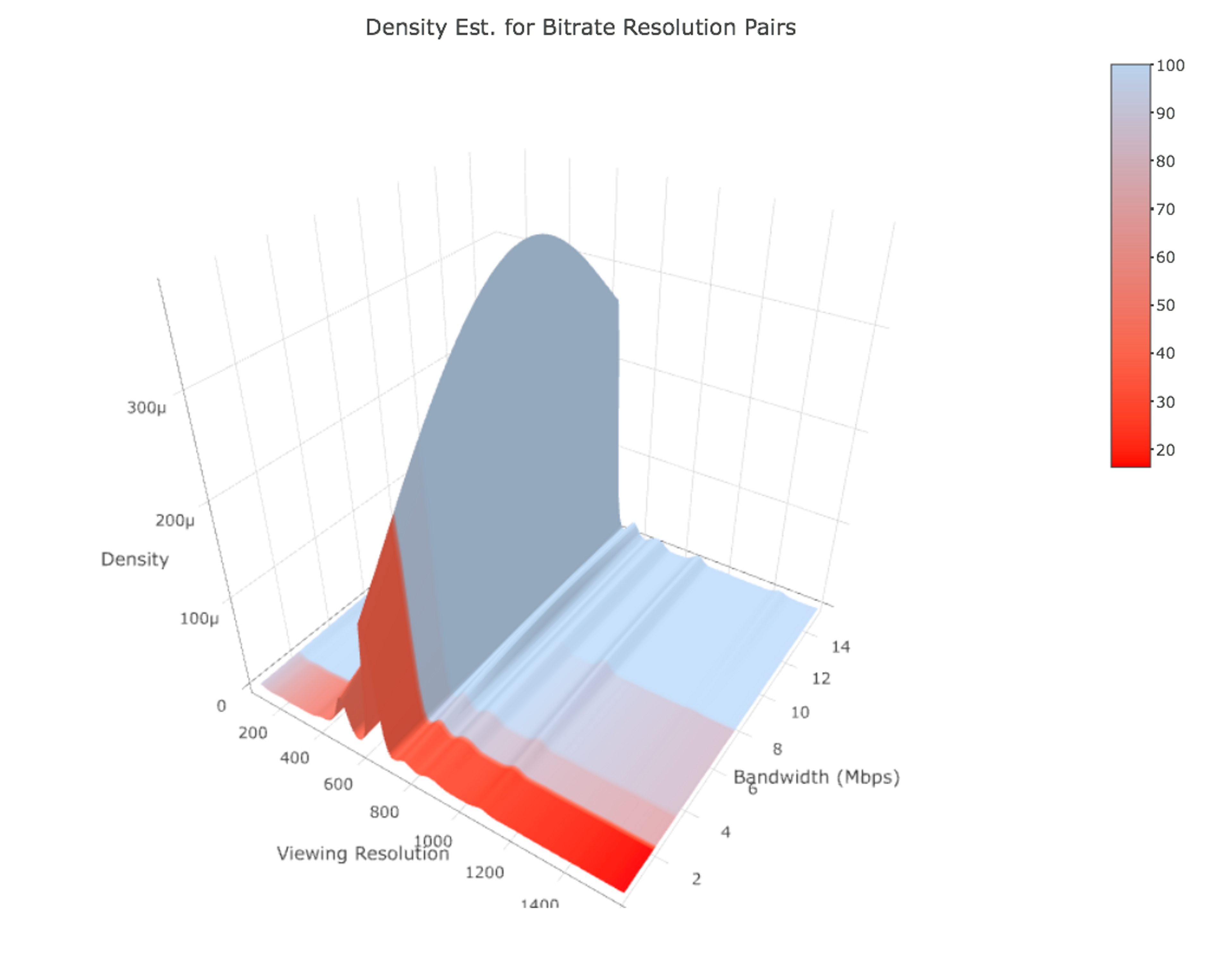

That said, we are continually evaluating the data behind users' video consumption, which can often change, and we work to estimate the best renditions for a given video. Visualized, a snapshot distribution might look something like this:

The surface color depicts estimated VMAF quality for a sample rendition ladder. We want to minimize the amount of red for as many users as possible

We can see the different bumps and curves of how and where users are viewing video, and then we create renditions to improve their video experience. This means choosing renditions so we don't isolate large populations of users, and we minimize the poor quality experiences (the red bands).

Try it!

Online video remains in a constant state of flux as companies release newer ways to improve the streaming experiences. Some succeed while others fail. Some are exciting industry-shaking developments, while others are just marketing fluff. For most of us though, each announcement feels a little like this.

The point of Mux Video is to create solutions to the problems you don't even know to worry about. Instead of worrying about the latest streaming developments, we want Mux Video to be a single solution that permanently stays on the bleeding edge.

Video should be easy. Video should just work. We're hoping to make our Audience Adaptive Encoding a big part of that, and we would love for you to try it out. Sign-up for a free account today, or reach out to info@mux.com for any questions.

Thanks for reading and hope you found this interesting and informative. For questions regarding this post, feel free to reach me at ben@mux.com.

Why we hit pause on audience-adaptive encoding

A year after launching Mux Video, we released Audience Adaptive Encoding. Unfortunately, we’ve had to pause development on that service and pull it from general availability.

Our intention with these services supports what Mux is all about creating solutions for video so you can focus on building your application and solving problems you may not even know yet to worry about. However, as we saw demand for Mux Live Streaming grow, we had to shift resources away from experimental machine learning to support it. We still fully believe in machine-learning-driven encoding but it deserves focused resources and its own dedicated team.

Since shifting engineering resources, we’ve scaled Mux Live and built a bunch of supporting features like live simulcasting, clipping, engagement metrics, and more. We’re still striving to take care of the hard parts of video infrastructure to make it easy for any developer to build video.

Hopefully, we’ll be able to revisit that service (or something even better) soon. In the meantime, if you have feedback or questions, contact us.