We’ve seen a number of customer use cases leveraging new AI tools. We decided to put together some new guides to help get your creative juices flowing and answer the question: “What should I be doing with AI?” and “How can I improve my video experience with AI?”

In the docs, we’re calling this “AI workflows” and we’re starting with 3 simple use cases that we’ve seen repeated enough that it makes sense to write it down.

All these workflows are:

- AI-model agnostic: You can use OpenAI, Claude, or you can bring your own model to the party. It doesn’t matter to us.

- Developer-first: Since Mux is an API platform for developers, we’re approaching these with the assumption that you are a developer and you’re comfortable handling API requests/responses and saving data into your database or CMS. Having said that, the general ideas still stand on their own and you can piece together a no-code flow with various tools to do the same thing.

- Use a mix of Mux features and 3rd party tools: All of these involve both Mux and one or more 3rd parties.

Generating video chapters with AI

Although not required, this works great with Mux Player, which recently added chapters support. When you initialize Mux Player, you pass in chapters in JSON format and boom, you get chapter markers on the player, just like you’re used to with YouTube and other platforms.

The basic idea here is that you use Mux’s auto-generated captions feature, pull the transcript of the video, and prompt the AI model to understand the transcript and generate sensible chapters. Easy enough. The nice thing about this approach is that you can have a human review step to review the chapters output, make sure it makes sense, and make slight tweaks if necessary. I find that in the current state of things, I don’t fully trust an AI model’s output right off the bat. I can sleep easier at night with a human in the loop.

See the full workflow for Generating chapters for videos with AI.

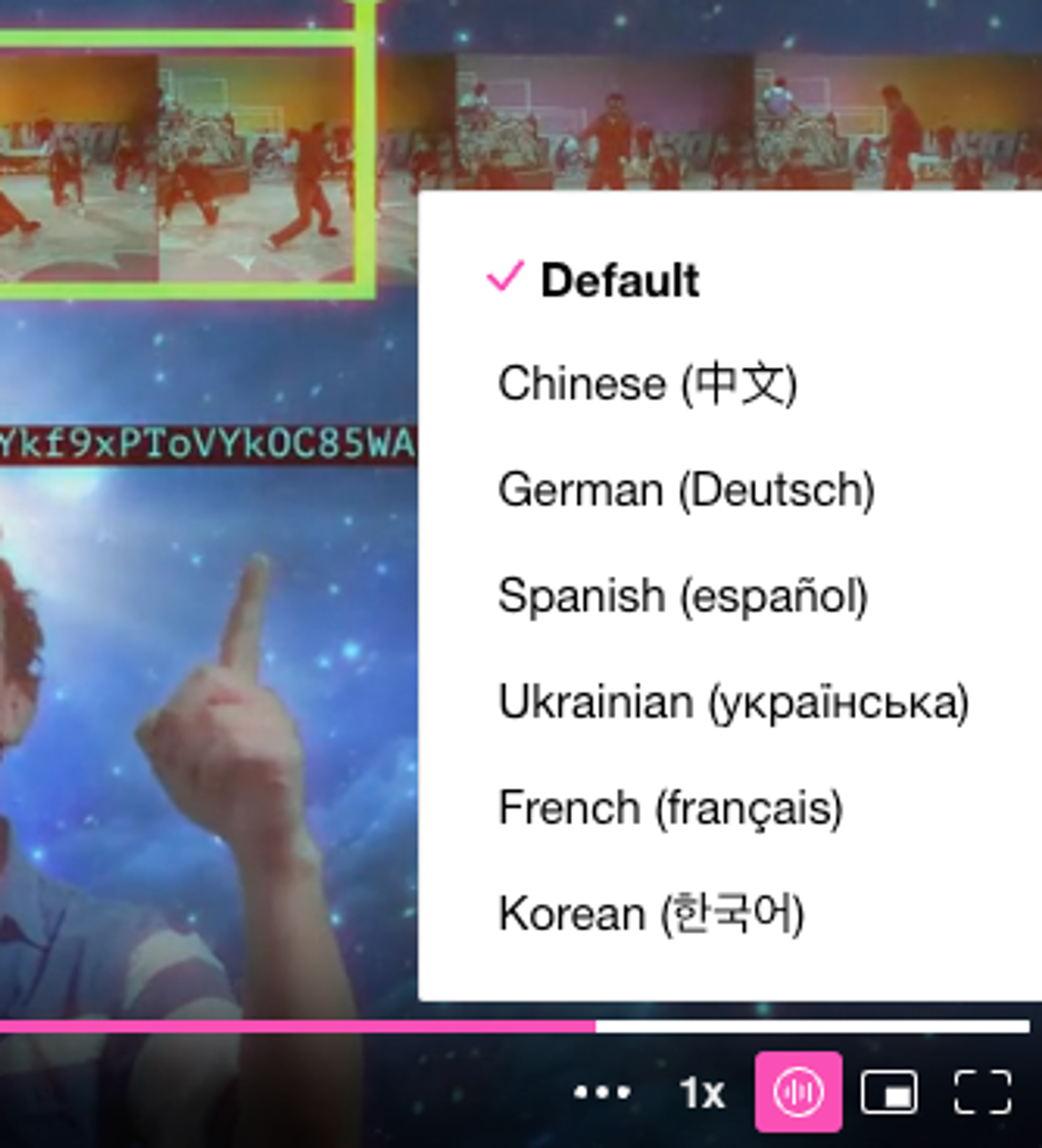

Automatic translation and dubbing

About a year ago, Mux Video and Mux Player added multi-track audio support. That, coupled with the MP4 static rendition enhancements to support an audio-only version of the video makes this a powerful workflow.

This guide leverages Sieve, a third-party product, to do the AI-powered task of converting the audio track from one language to another. Once you have the new audio track, you can attach it to the Mux Asset as an additional audio track and make it available for selection within the player.

See the full workflow for Automatic translating and dubbing videos with AI.

There’s even a one-click val.town script you can deploy and point your webhook to for easy integration.

Summarizing and tagging

This one is my favorite. If you have a large video library that you’ve built up over time, an obvious question you might ask yourself is: What the heck are all these videos about, anyway? Or I know we made a video about X topic, but I don’t know where to find it.

That’s where summarizing and tagging comes in. Historically, this used to be a task reserved for humans, who had to spend hours painstakingly watching every video. AI models today seem to be pretty good at summarizing and tagging things in sensible ways, and that can unlock exciting possibilities:

- Recommendation engines (you watched X video, you might like Y video)

- Contextual search (show me all videos that talk about Z)

- Providing context to viewers so they have more of an idea about what a video contains before they watch it

- Making it easy for video creators to add rich descriptions and tags to their videos. Instead of requiring users to manually enter descriptions and tags, you might want to recommend descriptions and tags and let them refine or edit them to their liking.

See the full workflow for Summarizing and tagging videos with AI, including a system prompt that worked well in our testing.

How Mux fits into the AI + video landscape

Mux is using AI in a few different ways right now. The most prominent and customer-facing is using the Open AI Whisper model for auto-generating captions (for free) on your videos (if you’re using Smart encoding).

There are some other things we have in the works, but we’ll save that for another day. In the fast-paced AI-powered technology world, one thing that is not changing about Mux is that Mux is still here to power your video infrastructure, whether it is AI-backed or not. Just like the non-AI-created videos that came before, AI-created videos still need:

Those services are all things we’ve been doing for years and will continue to do as the AI-powered video future evolves.

What’s next?

There are probably about 1,000 more creative use cases incorporating AI, and Mux strives to provide you with all the primitives you need to easily and cost-effectively integrate with the AI system of your choice. If you’re working on a new use case (or if you’re trying to pull something off but it’s just not quite working because you need something else from Mux, please let us know, we’d love to hear about it!