One of the hot topics of NAB, IBC, and Demuxed last year was “low latency” live video streaming. In this post we’ll examine the spectrum of different latencies that users experience, discuss the use cases for each, and explore evolving technologies which can deliver the best experience for your users.

Before we get too far into exploring the world of latency, it’s important to be clear what we’re measuring. Latency, in this context, refers to the time taken between something happening in the real world and being visible on the video stream on a viewer’s device. You’ll often hear this referred to as end-to-end latency, wall-clock latency, or “glass to glass”.

When does latency matter?

The story of latency is complicated. How much latency is acceptable depends entirely on the problem that you’re trying to solve. We’ve put together some use cases below that we think group the types of experiences which commonly have challenges around the latency the end-user sees.

Voice and real-time communication

The most latency sensitive applications are those with a real-time communication experience. In general, these would be in either a one-to-one context or a small group setting - for example, a personal video call, or a video conference within a business.

For real-time communication, it is generally considered that the experience starts to degrade above 200ms of latency (0.2 seconds!) - beyond this limit conversations start to become more challenging. However, the challenges don’t end at the human interaction level. As latency increases, the technical challenges around noise and echo cancellation become significantly more complex.

When the latency in a real-time context reaches anything more than around one second, the experience becomes so degraded that having a meaningful conversation becomes nearly impossible. In general, in the real-time communication space, protocols and applications are often designed to compromise on visual quality in order to ensure that a minimal level of audio quality and latency are sustained.

Examples:

- FaceTime

- Google Meet/Hangouts

- Skype

Sports & eSports

One of the interesting challenges in live sports is that achieving the lowest latency possible is not necessarily the objective. Most live sporting events are now being simulcast online alongside more traditional content delivery chains over cable, satellite or terrestrial broadcasts. For many broadcasters, the target is to be around the same latency as users experience in a traditional broadcast chain, which tends to vary between 2 and 15 seconds. On average, broadcast latency is around 6 seconds. It is worth remembering however that in many cases this latency is deliberate. The artificial latency is introduced to ensure that content can be censored before it is broadcast, avoiding potential large fines from broadcasting watchdogs such as the FCC in the USA, or Ofcom in the UK. This is known as "Broadcast Delay" or "Seven Second Delay".

In large, nation-grabbing sports events, it is incredibly important that the two delivery strategies that not only have a similar latency but are synchronized. As an example, during penalties in the last World Cup, it was easy to hear the cheers (and groans) of England fans from different pubs and parties in the centre of London. Unfortunately, the UHD stream on BBC iPlayer was 20-30 seconds behind linear TV… there’s nothing like having the climax of a World Cup campaign ruined by your neighbors! It is worth mentioning that the inverse situation also isn’t desirable - one great example is Thursday night football when streamed via Twitch.tv is often ahead of the cable broadcast.

eSports presents an interesting situation where, given the lack of comparable linear broadcast experience, it's actually less sensitive to latency than many live sports are. However as social networks and news vendors improve their latency, the last thing viewers want is a push notification informing them of a result of a match that hasn’t yet finished on their live stream.

Examples:

- Sports: FIFA World Cup on BBC iPlayer or Fubo.tv

- eSports: DOTA “The Internationals” on Twitch

Interactive experiences and interactive UGC streams

As the UGC live streaming culture has evolved, it has become clear that this space is about much more than just watching other people play video games. The variety of content available on a site like Twitch is larger than anyone could really have expected but what has become universal across all types of content on Twitch is the interactivity element of streams. UGC content has become oriented around the interactions with chat which acts as a meeting point for the community of the viewership of a particular streamer.

Our friends over at Twitch have been pioneers of low latency live streaming experiences for a long time and have worked relentlessly to reduce the latency for streamers on their platform, in some cases reducing latency to only a couple of seconds.

We also include wider interactive experiences in this group. One of our favorite apps over the last couple of years has been HQ Trivia. The combination of a painfully infuriating quiz, and a great, synchronized video experience generates an enjoyable, addicting experience. It’s hard to measure the end-to-end latency of HQ trivia since we have no immediate point of reference for when the signal was acquired, however just by watching the live presenter respond to chat, we can easily assume it's only a handful of seconds at worst. What’s most impressive (and also critical) with HQ Trivia has been the synchronization we have observed across a diverse set of devices and network locations, and conditions - the synchronization is particularly interesting because it also extends beyond the video and into the questions and answers pushed to the user within the app via separate control channels.

Examples:

- IRL, creative, and video game streamers on Twitch.tv

- HQ Trivia

Auctions and gambling

One area where we’re seeing a new growth in live streaming experiences is for auctions and gambling.

People bidding remotely at auctions isn’t something new - phone bids have been commonplace for a long time. However, this trend has recently been replaced with the combination of online bidding while watching a live stream. Not only have we seen a rise in large, traditional auction houses, such as Sotheby’s, starting to integrate live streaming experiences, but we’re also starting to see the creation of online-only live streaming auctions.

One new trend we're noticing is live streaming experiences being built specifically for gambling. Last year we saw a lot of sites starting to build casino blackjack, roulette, or poker experiences with real-life dealers. These streams are effectively also based on interactivity, but also need to keep up a pace of the game - it’s critical that the time between each action by a dealer or player is kept to a minimum to minimize interruption (bad for the casino) and maintain flow (bad for the user).

Streamer sodapoppin bets big at a video casino

Examples

- Auctions: Sotheby’s

- Video casino: BetOnline

Low latency tradeoffs

One of the most important things to keep in mind when designing a live streaming experience for viewers is to remember that reducing the latency of an experience comes with sacrifices. Ever since video started to surface on the internet, we've used the process of pre-buffering content to make sure that the viewer's experience isn't affected by adverse network conditions. Realistically, any attempt to reduce latency also has the consequence of reducing the amount of content a player has buffered, risking a poorer experience for end users.

Over the past 10 years, the video industry has been investing in adaptive bitrate technologies (ABR) to improve the end-user experience of viewers by adapting the stream to the user's available bandwidth. In a nutshell, this works by measuring the bandwidth of the viewer when the player requests a chunk of video and using this information to decide what quality of video to request when the next chunk of content is needed. In practice this works really well, but as latency is reduced in this sort of model, it can introduce issues.

Traditionally to reduce latency on a live stream, we would reduce the duration of each chunk of video that the viewer receives. However, not only does this reduce the duration of a stream that the player has buffered, but it also means that the viewer has to request chunks more frequently meaning an increase in the number of HTTP requests sent, each of which has a significant overhead.

Ultimately, there will always be some level of sacrifice in the effectiveness of ABR technologies when the ultimate aim is to lower latency. The more duration of a video stream that a client has buffered, the more resilient the playback experience will be to packet loss, network changes, or cache misses. Unfortunately, some of the technologies we’re seeing marketed in the name of low latency also reduce redundancy, increase complexity, and introduce significant vendor lock in.

Approaches to reducing latency

In general, three main approaches are starting to become common strategies to reduce latency within the live streaming technology space. We’ll discuss these three below, and try to apply each to the use cases we identified above.

Short segment duration

Latency: Between 30 and 10 seconds

Use Cases: The majority of live streams delivered today, most sports & some eSports

Resilience: High

Viewership: Large

As we discussed earlier, the traditional approach to reducing latency on an ABR powered stream is to reduce the duration of the segments being delivered to the end user. Over the last few years, the average segment size has reduced from around 10 seconds to around 6 seconds following updated recommendations in Apple’s HLS specification. The reason that shorter segments generally result in lower latency is that most players are implemented to pre-buffer a certain number of segments before starting playback. For example, the embedded video player on iOS devices and Safari will always buffer three video segments before starting playback. Three segments with a duration of 2 seconds (roughly the minimum feasible) per segment gives a minimum latency of around 6 seconds without taking into account the time taken to ingest, transcode, package, and deliver the media segments.

The DASH protocol does improve on this behavior slightly allowing the manifest file to specify how much of a stream needs to be buffered before playback can start. This is contained in the minBufferTime attribute of a DASH manifest. Unfortunately, in the real world, only some DASH players and devices have implemented this behavior and many continue to download a set number of segments before starting playback. This is particularly common on "SmartTV" or Living Room devices.

Real-time protocols

Latency: < 1 second

Use Cases: Voice and real-time communication, Auctions & gambling

Resilience: Low

Viewership: Small

Thanks to the proliferation of the WebRTC protocol, we now have browsers and devices with real-time communications capabilities built-in. This technology has been the basis for applications such as Google Meet/Hangouts, Facebook video chat, and many others, and generally works passably for those applications. However, any frequent user of any video chat technology will be able to tell you how sensitive these systems are to low bandwidth or high packet loss situations, which are common occurrences in a home user's broadband, or cellular network connection.

Because WebRTC is peer-to-peer based, you’ll only have a limited number of participants in a call, however in 2018 we started to see some systems built on top of WebRTC to deliver high-scale video delivery systems. For the most part this has been implemented by adding WebRTC relay nodes into CDNs or edge compute networks to allow the browser to connect to what it sees as a peer for video delivery.

While this approach is an innovative use of the WebRTC protocol, it isn’t really what it was designed for and won’t necessarily scale to the limits you need unless you’re interested in running your own WebRTC edge servers in a public cloud. We’re excited to see how extensively mainstream CDN vendors will introduce more public WebRTC offerings to help others implement this approach in the coming year - unfortunately with only one CDN (Limelight) offering this today, going in this direction can limit your scale and increase your vendor lock-in. Having a comprehensive multi-CDN strategy is one of the most important areas of development in the last few years - using tools, like Cedexis, to perform active CDN switching can decrease latency and increase stability for your end users by choosing the best CDN for a user in real time.

Chunked-transfer segmented streaming

Latency: Between 4 and 1 seconds

Use Cases: UGC & interactive experiences, Sports & eSports.

Resilience: Medium

Viewership: Large

Towards the end of last year we started to see a new low latency live streaming approach start to become standardized via multiple bodies. We’ve already talked about how segmented streaming works at large today - chunked transfer solutions are an elegant, backwards compatible extension of that solution.

A video segment is made up of many video frames, and these video frames are grouped together into groups, known as GOPs (groups of pictures) - generally a segment of video will contain multiple GOPs. To decode a piece of a video stream, you generally need to have a complete GOP available, but this doesn’t necessarily mean that your player needs to have a full segment available in order to decode the first GOPs available within it.

By leveraging a little-known piece of the HTTP 1.1 specification known as chunked transfer encoding, a player can make a standard HTTP request for a segment of video, and the encoder can respond with chunks of the segment (generally a full GOP) while the full segment is still being encoded. This allows the player to start to decode a segment once it has enough frames available, rather than one, or several full segments.

Beyond needing an encoder that supports chunked transfer outputs, and a CDN that supports this mode of transfer (most already do), fairly minor changes are needed to the player in order to support this low latency streaming approach. It is worth remembering however, that while this approach can provide impressive latency figures, the same challenges with a reduced buffer size in the player still exist - you may want to consider falling back to a higher latency strategy for end users who experience repeated buffering.

One of the bigger challenges on the client-side is that this approach introduces new challenges around measuring network performance. Most players today rely on segment download performance to measure the available bandwidth, but with chunked-transfer based solutions it would be completely normal for a 10 second segment to take 10 seconds to download, as that’s the speed the chunks are being produced by the encoder.

The really great news is that lots of people have already started investing in this approach and are making forward progress on working towards standardization. Currently, there are two groups working towards standardization of chunked-transfer segmented streaming. The LHLS (Low latency HLS) community is currently working within the HLS-JS project to define an RFC - you can get involved on this Github issue. At the same time, work is ongoing in the CMAF (common media application format) group where a subtlety different approach is being taken, oriented around using a CMAF compliant fMP4 sub-fragment, rather than a GOP contained within a Transport Stream segment - this obviously aligns strongly with MPEG-DASH delivery.

Both approaches already have support from a lot of the video streaming powerhouses. LHLS currently has contributions from Mux, JWPlayer, Wowza, Akamai, Mist Server, and AWS Elemental. The CMAF approach also has a lot of support beyond the CMAF community, with GPAC, Akamai, Harmonic, THEOplayer, Bitmovin, and others contributing. We're hopeful that we'll soon start to see convergence of the two groups. In particular, the ability to use CMAF media chunks in an LHLS manifest would introduce an interchangeable media format which could be served with 2 different manifests depending on the client's device.

While these approaches are still early in the standardization process, they provide an elegant approach which also has the advantage of fitting elegantly with existing multi-CDN strategies. They also offer a backwards compatible approach when a player isn’t capable of supporting low-latency strategies.

Proprietary solutions

In the last 12 months, we’ve seen a lot of new proprietary low latency live streaming solutions starting to make inroads into the market, most of which use WebRTC as a transport mechanism. As we already mentioned earlier in this post, we have concerns around vendor lock in and scale limitations of WebRTC solutions. We’re eager to watch these solutions gain adoption and scale so we can see how these vendors tackle these challenges.

Here’s some of the proprietary solutions that we think are super interesting:

Limelight Video Acceleration

Limelight have partnered with Red5 Pro to build out a WebRTC based solution offering high-scale low latency live streaming experiences. The demo we saw at IBC (in Amsterdam) was particularly impressive, with an end-to-end latency of less than 500ms on a stream coming from the west coast of the USA.

Wowza Streaming Cloud with Ultra Low Latency

Wowza is offering a couple of different solutions for low latency live streaming at the moment, including a proprietary technology referred to as “WOWZ”, which is available as a part of their SaaS product line. WOWZ offers under 3 second latency when used with Wowza’s own player which solidly puts it in the Ultra Low Latency category - this is super impressive at scale. As Scott and Jamie from Wowza explained at Demuxed 2017, the WOWZ protocol is based on pushing video through WebSockets.

You can also leverage WebRTC in Wowza’s Streaming Engine if you have a CDN which supports WebRTC relays, such as Limelight.

Phenix

Phenix is a fairly new player in the market that offers a live-streaming solution which claims to deliver sub 500ms latency worldwide. Phenix claims to use AI to address the usual issues seen around large amounts of users suddenly joining a single stream (AKA a thundering-herd). Phenix is built on-top of WebRTC protocol and delivers ABR content from their own global network of edge nodes.

Nanocosmos

We don’t know too much about Nanocosmos’ solutions, but they claim to have a scalable live-streaming solution with a sub-second global latency. We haven’t been able to dig into their technology yet, but we’re interested to watch it evolve and gain traction.

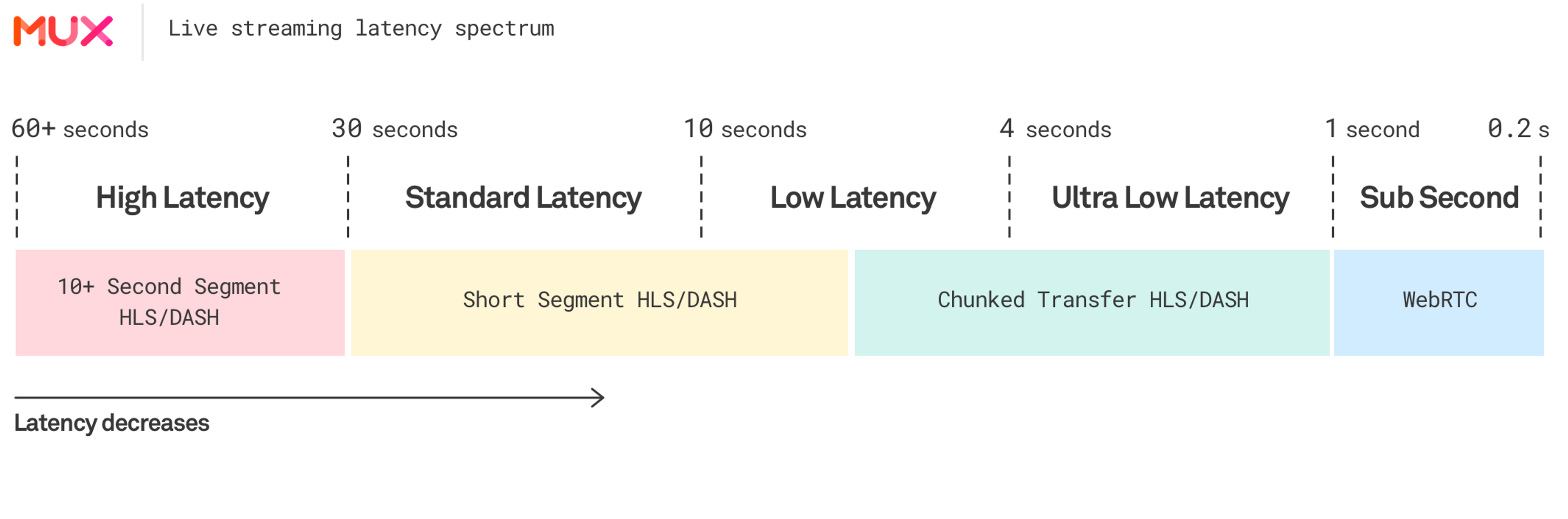

Mux’s live streaming latency spectrum

There’s been a lot of suggested terminology in the industry over the last 12 months for what to call different latencies within the spectrum of the live streaming sector. We’ve spent some time looking at what’s out there and evaluated the landscape, and here’s our proposal.

We'd like to thank Will Law for inspiring our definitions here.

High Latency

Latency: Over 30 Seconds

Technologies: HLS, DASH, Smooth - 10+ second segments

Use Cases: Live streams where latency doesn’t matter

Standard Latency

Latency: 30 to 10 Seconds

Technologies: Short segment HLS, DASH - 6 to 2 second segments

Use Cases: Simulcast where there’s no desire to be ahead of over-the-air broadcasting

Low Latency

Latency: 10 to 4 Seconds

Technologies: Very short segment HLS, DASH - 2 to 1 second segments OR Chunked Transfer HLS / DASH - LHLS / CMAF

Use Cases: UGC, Live experiences, Auctions, Gambling

Ultra Low Latency

Latency: 4 to 1 Seconds

Technologies: Chunked Transfer HLS / DASH - LHLS / CMAF

Use Cases: UGC, Live experiences, Auctions, Gambling

Sub-Second

Latency: Less than 1 second

Technologies: WebRTC, Proprietary solutions

Use Cases: Video Conferencing, VOIP etc.

We’ve also put together our own version of Will Law’s diagram from Demuxed last year showing how we see the industry progressing.

The Mux live streaming latency spectrum - Mux 2019

We’re excited to work with the LHLS community to continue the work to push forward standardization of LHLS. Keep your eyes on the Mux blog for more content about low latency live streaming coming soon. In the meantime, create a free account to see how Mux Video makes live streaming easy.